Hi,

I'm a bit puzzled.

I have installed my system so that it is 99.9% complete.

The host boots from nvme1 and the vm/containers are on nvme2, which is mounted to /var

Then I decided to clone the system to identical hardware by

I edited the container config files to assign different IP-addresses and booted the system.

Initially there were complaints by the browser about identical certificate serial numbers, but that is solved.

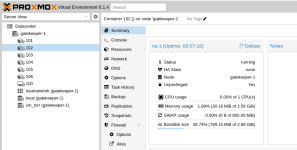

I can see the containers, however their names are not shown as on the original system.

They are in status unknown in the web-interface. I can start them and ssh into them just fine

The cloned nameserver for example works just fine.

Is this a cosmetic issue and how can it be solved? I fail to see nay smoking guns in the logs...

kind regards

I'm a bit puzzled.

I have installed my system so that it is 99.9% complete.

The host boots from nvme1 and the vm/containers are on nvme2, which is mounted to /var

Then I decided to clone the system to identical hardware by

Code:

dd if=source-disk of=destination-diskInitially there were complaints by the browser about identical certificate serial numbers, but that is solved.

I can see the containers, however their names are not shown as on the original system.

They are in status unknown in the web-interface. I can start them and ssh into them just fine

The cloned nameserver for example works just fine.

Is this a cosmetic issue and how can it be solved? I fail to see nay smoking guns in the logs...

kind regards