pveversion -v

/etc/pve/storage.cfg

pct config 103

If I create a random 8G file in /root of the container

and run vzdump

the container backs up reasonably quickly.

However if I replace the random file with a sparse file of the same size

the same backup command is much slower

If I increase the size of the sparse file the backup becomes slower and slower until it bottoms out at ~3MiB/s. This is unbearably slow.

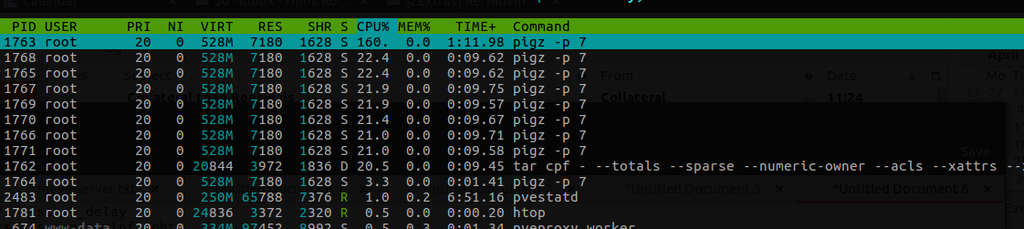

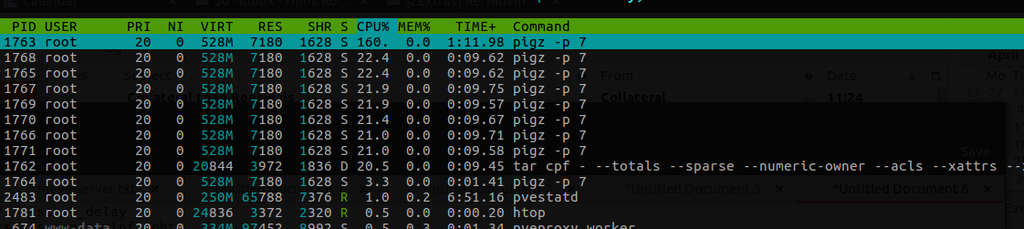

What I've also noticed is that with the random file the multiple pigz threads are being used throughout the vzdump. If the sparse file is present the process starts off with the pigz threads being used:

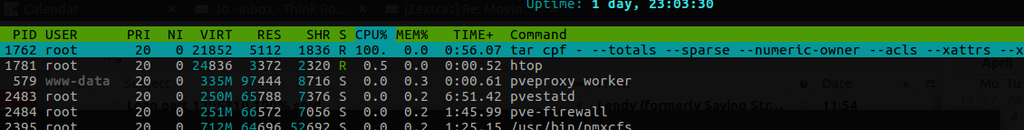

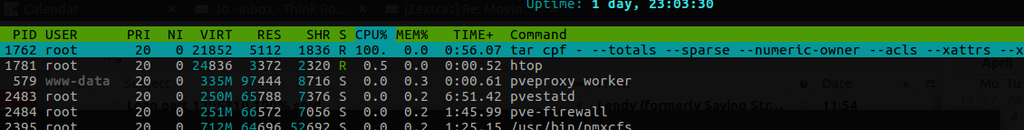

and then the pigz threads become dormant leaving only tar:

What am I doing wrong / how can I fix this?

Code:

proxmox-ve: 4.4-86 (running kernel: 4.4.49-1-pve)

pve-manager: 4.4-13 (running version: 4.4-13/7ea56165)

pve-kernel-4.4.49-1-pve: 4.4.49-86

lvm2: 2.02.116-pve3

corosync-pve: 2.4.2-2~pve4+1

libqb0: 1.0.1-1

pve-cluster: 4.0-49

qemu-server: 4.0-110

pve-firmware: 1.1-11

libpve-common-perl: 4.0-94

libpve-access-control: 4.0-23

libpve-storage-perl: 4.0-76

pve-libspice-server1: 0.12.8-2

vncterm: 1.3-2

pve-docs: 4.4-4

pve-qemu-kvm: 2.7.1-4

pve-container: 1.0-97

pve-firewall: 2.0-33

pve-ha-manager: 1.0-40

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u3

lxc-pve: 2.0.7-4

lxcfs: 2.0.6-pve1

criu: 1.6.0-1

novnc-pve: 0.5-9

smartmontools: 6.5+svn4324-1~pve80

zfsutils: 0.6.5.9-pve15~bpo80/etc/pve/storage.cfg

Code:

dir: local

path /var/lib/vz

content iso,backup,vztmpl

zfspool: local-zfs

pool rpool/data

content rootdir,images

sparse 1pct config 103

Code:

arch: i386

cores: 2

cpulimit: 2

hostname: dirac

memory: 1536

nameserver: 127.0.0.1 213.133.98.98

net0: name=eth0,bridge=vmbr0,gw=10.0.0.1,hwaddr=2E:56:98:41:27:95,ip=10.0.0.103/32,type=veth

ostype: ubuntu

rootfs: local-zfs:subvol-103-disk-1,size=15G

searchdomain: co.uk

swap: 512If I create a random 8G file in /root of the container

Code:

dd if=/dev/urandom of=file bs=64M count=128 iflag=fullblock

Code:

vzdump 103 --quiet --mode stop --compress gzip -pigz 7 --storage local --maxfiles 1

Code:

Total bytes written: 13600952320 (13GiB, 64MiB/s)However if I replace the random file with a sparse file of the same size

Code:

dd if=/dev/zero of=file bs=1 count=0 seek=8G

Code:

Total bytes written: 5011015680 (4.7GiB, 24MiB/s)If I increase the size of the sparse file the backup becomes slower and slower until it bottoms out at ~3MiB/s. This is unbearably slow.

What I've also noticed is that with the random file the multiple pigz threads are being used throughout the vzdump. If the sparse file is present the process starts off with the pigz threads being used:

and then the pigz threads become dormant leaving only tar:

What am I doing wrong / how can I fix this?

Last edited: