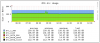

Hello, I'm wondering why when vzdump starts (once overy week), that it suddenly empties ZFS ARC cache. If you take a look at the graphs you see increase in CPU utilization (vzdump starts using pigz) and on the same time there is steady memory usage drop, which corresponds to lowering ARC memory usage...

Yesterday ARC cache memory usage was at 10GB, now it is on low limit.

Anyone can help me explaining this?

Thank you!

arc_summary dump:

Yesterday ARC cache memory usage was at 10GB, now it is on low limit.

Anyone can help me explaining this?

Thank you!

arc_summary dump:

Code:

ZFS Subsystem Report Sat Nov 03 09:02:03 2018

ARC Summary: (HEALTHY)

Memory Throttle Count: 0

ARC Misc:

Deleted: 1.32G

Mutex Misses: 252.99k

Evict Skips: 445.25M

ARC Size: 20.02% 2.00 GiB

Target Size: (Adaptive) 20.39% 2.04 GiB

Min Size (Hard Limit): 20.00% 2.00 GiB

Max Size (High Water): 5:1 10.00 GiB

ARC Size Breakdown:

Recently Used Cache Size: 7.81% 118.28 MiB

Frequently Used Cache Size: 92.19% 1.36 GiB

ARC Hash Breakdown:

Elements Max: 10.23M

Elements Current: 49.28% 5.04M

Collisions: 1.13G

Chain Max: 11

Chains: 1.03M

ARC Total accesses: 5.02G

Cache Hit Ratio: 69.15% 3.47G

Cache Miss Ratio: 30.85% 1.55G

Actual Hit Ratio: 68.05% 3.42G

Data Demand Efficiency: 46.68% 1.57G

Data Prefetch Efficiency: 7.11% 762.18M

CACHE HITS BY CACHE LIST:

Anonymously Used: 1.36% 47.26M

Most Recently Used: 31.41% 1.09G

Most Frequently Used: 67.01% 2.33G

Most Recently Used Ghost: 0.14% 4.96M

Most Frequently Used Ghost: 0.08% 2.74M

CACHE HITS BY DATA TYPE:

Demand Data: 21.07% 731.20M

Prefetch Data: 1.56% 54.22M

Demand Metadata: 77.21% 2.68G

Prefetch Metadata: 0.16% 5.56M

CACHE MISSES BY DATA TYPE:

Demand Data: 53.95% 835.36M

Prefetch Data: 45.72% 707.96M

Demand Metadata: 0.24% 3.67M

Prefetch Metadata: 0.10% 1.55M

L2 ARC Summary: (HEALTHY)

Low Memory Aborts: 177

Free on Write: 7.09M

R/W Clashes: 184

Bad Checksums: 0

IO Errors: 0

L2 ARC Size: (Adaptive) 39.60 GiB

Compressed: 63.36% 25.09 GiB

Header Size: 1.08% 437.96 MiB

L2 ARC Evicts:

Lock Retries: 5.54k

Upon Reading: 0

L2 ARC Breakdown: 1.55G

Hit Ratio: 0.40% 6.26M

Miss Ratio: 99.60% 1.54G

Feeds: 941.12k

L2 ARC Writes:

Writes Sent: 100.00% 709.02k

DMU Prefetch Efficiency: 221.34M

Hit Ratio: 44.23% 97.90M

Miss Ratio: 55.77% 123.45M

ZFS Tunables:

dbuf_cache_hiwater_pct 10

dbuf_cache_lowater_pct 10

dbuf_cache_max_bytes 104857600

dbuf_cache_max_shift 5

dmu_object_alloc_chunk_shift 7

ignore_hole_birth 1

l2arc_feed_again 1

l2arc_feed_min_ms 200

l2arc_feed_secs 1

l2arc_headroom 2

l2arc_headroom_boost 200

l2arc_noprefetch 0

l2arc_norw 0

l2arc_write_boost 33554432

l2arc_write_max 16777216

metaslab_aliquot 524288

metaslab_bias_enabled 1

metaslab_debug_load 0

metaslab_debug_unload 0

metaslab_fragmentation_factor_enabled 1

metaslab_lba_weighting_enabled 1

metaslab_preload_enabled 1

metaslabs_per_vdev 200

send_holes_without_birth_time 1

spa_asize_inflation 24

spa_config_path /etc/zfs/zpool.cache

spa_load_verify_data 1

spa_load_verify_maxinflight 10000

spa_load_verify_metadata 1

spa_slop_shift 5

zfetch_array_rd_sz 1048576

zfetch_max_distance 8388608

zfetch_max_streams 8

zfetch_min_sec_reap 2

zfs_abd_scatter_enabled 1

zfs_abd_scatter_max_order 10

zfs_admin_snapshot 1

zfs_arc_average_blocksize 8192

zfs_arc_dnode_limit 0

zfs_arc_dnode_limit_percent 10

zfs_arc_dnode_reduce_percent 10

zfs_arc_grow_retry 0

zfs_arc_lotsfree_percent 10

zfs_arc_max 10737418240

zfs_arc_meta_adjust_restarts 4096

zfs_arc_meta_limit 0

zfs_arc_meta_limit_percent 75

zfs_arc_meta_min 0

zfs_arc_meta_prune 10000

zfs_arc_meta_strategy 1

zfs_arc_min 2147483648

zfs_arc_min_prefetch_lifespan 0

zfs_arc_p_dampener_disable 1

zfs_arc_p_min_shift 0

zfs_arc_pc_percent 0

zfs_arc_shrink_shift 0

zfs_arc_sys_free 0

zfs_autoimport_disable 1

zfs_checksums_per_second 20

zfs_compressed_arc_enabled 1

zfs_dbgmsg_enable 0

zfs_dbgmsg_maxsize 4194304

zfs_dbuf_state_index 0

zfs_deadman_checktime_ms 5000

zfs_deadman_enabled 1

zfs_deadman_synctime_ms 1000000

zfs_dedup_prefetch 0

zfs_delay_min_dirty_percent 60

zfs_delay_scale 500000

zfs_delays_per_second 20

zfs_delete_blocks 20480

zfs_dirty_data_max 4294967296

zfs_dirty_data_max_max 4294967296

zfs_dirty_data_max_max_percent 25

zfs_dirty_data_max_percent 10

zfs_dirty_data_sync 67108864

zfs_dmu_offset_next_sync 0

zfs_expire_snapshot 300

zfs_flags 0

zfs_free_bpobj_enabled 1

zfs_free_leak_on_eio 0

zfs_free_max_blocks 100000

zfs_free_min_time_ms 1000

zfs_immediate_write_sz 32768

zfs_max_recordsize 1048576

zfs_mdcomp_disable 0

zfs_metaslab_fragmentation_threshold 70

zfs_metaslab_segment_weight_enabled 1

zfs_metaslab_switch_threshold 2

zfs_mg_fragmentation_threshold 85

zfs_mg_noalloc_threshold 0

zfs_multihost_fail_intervals 5

zfs_multihost_history 0

zfs_multihost_import_intervals 10

zfs_multihost_interval 1000

zfs_multilist_num_sublists 0

zfs_no_scrub_io 0

zfs_no_scrub_prefetch 0

zfs_nocacheflush 0

zfs_nopwrite_enabled 1

zfs_object_mutex_size 64

zfs_pd_bytes_max 52428800

zfs_per_txg_dirty_frees_percent 30

zfs_prefetch_disable 0

zfs_read_chunk_size 1048576

zfs_read_history 0

zfs_read_history_hits 0

zfs_recover 0

zfs_recv_queue_length 16777216

zfs_resilver_delay 2

zfs_resilver_min_time_ms 3000

zfs_scan_idle 50

zfs_scan_ignore_errors 0

zfs_scan_min_time_ms 1000

zfs_scrub_delay 4

zfs_send_corrupt_data 0

zfs_send_queue_length 16777216

zfs_sync_pass_deferred_free 2

zfs_sync_pass_dont_compress 5

zfs_sync_pass_rewrite 2

zfs_sync_taskq_batch_pct 75

zfs_top_maxinflight 32

zfs_txg_history 0

zfs_txg_timeout 5

zfs_vdev_aggregation_limit 131072

zfs_vdev_async_read_max_active 3

zfs_vdev_async_read_min_active 1

zfs_vdev_async_write_active_max_dirty_percent 60

zfs_vdev_async_write_active_min_dirty_percent 30

zfs_vdev_async_write_max_active 10

zfs_vdev_async_write_min_active 2

zfs_vdev_cache_bshift 16

zfs_vdev_cache_max 16384

zfs_vdev_cache_size 0

zfs_vdev_max_active 1000

zfs_vdev_mirror_non_rotating_inc 0

zfs_vdev_mirror_non_rotating_seek_inc 1

zfs_vdev_mirror_rotating_inc 0

zfs_vdev_mirror_rotating_seek_inc 5

zfs_vdev_mirror_rotating_seek_offset 1048576

zfs_vdev_queue_depth_pct 1000

zfs_vdev_raidz_impl [fastest] original scalar sse2 ssse3 avx2

zfs_vdev_read_gap_limit 32768

zfs_vdev_scheduler noop

zfs_vdev_scrub_max_active 2

zfs_vdev_scrub_min_active 1

zfs_vdev_sync_read_max_active 10

zfs_vdev_sync_read_min_active 10

zfs_vdev_sync_write_max_active 10

zfs_vdev_sync_write_min_active 10

zfs_vdev_write_gap_limit 4096

zfs_zevent_cols 80

zfs_zevent_console 0

zfs_zevent_len_max 128

zil_replay_disable 0

zil_slog_bulk 786432

zio_delay_max 30000

zio_dva_throttle_enabled 1

zio_requeue_io_start_cut_in_line 1

zio_taskq_batch_pct 75

zvol_inhibit_dev 0

zvol_major 230

zvol_max_discard_blocks 16384

zvol_prefetch_bytes 131072

zvol_request_sync 0

zvol_threads 32

zvol_volmode 1

Last edited: