Hi... Hope everybody is ok!

I had have noticed something weird... Or, perhaps, is just the way it is...

I have 3 nodes, with 3 nic, 2x 1g and 1x 10G.

So I went ahead and create a vxlan and a zone like that:

The IPs 172.18.0 are using the 10G SPF nic. In this IP there is only cluster communications, which, I believe, is very light.

The MTU 9000 is not set etiher in the physical NIC or in the switch!

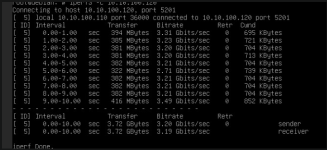

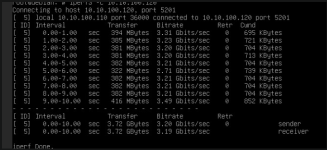

But only with MTU 9000 in the SDN, and MTU 8950 in the VM NIC configuration (virtio) I could get 3G of transfer using iperf3 as a test between the 2 vms.

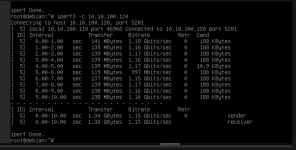

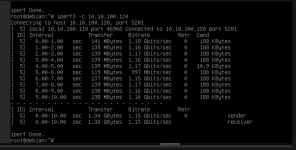

Without MTU 9000/8950, i.e., using the default 1500 I could get only 1.2, 1.3 G.

My question is why?

Is there something in the SDN that hold the speed of the nics?

Sorry if I dont do myself clear enought.

Any help will do!

Thank you

Best regard

With MTU 1500

With MTU 9000

I had have noticed something weird... Or, perhaps, is just the way it is...

I have 3 nodes, with 3 nic, 2x 1g and 1x 10G.

So I went ahead and create a vxlan and a zone like that:

Code:

pve101:/etc/pve/sdn# cat vnets.cfg

vnet: vxnet1

zone vxzone

tag 100000

vlanaware 1

pve101:/etc/pve/sdn# cat zones.cfg

vxlan: vxzone

ipam pve

mtu 9000

peers 172.18.0.20 172.18.0.30The IPs 172.18.0 are using the 10G SPF nic. In this IP there is only cluster communications, which, I believe, is very light.

The MTU 9000 is not set etiher in the physical NIC or in the switch!

But only with MTU 9000 in the SDN, and MTU 8950 in the VM NIC configuration (virtio) I could get 3G of transfer using iperf3 as a test between the 2 vms.

Without MTU 9000/8950, i.e., using the default 1500 I could get only 1.2, 1.3 G.

My question is why?

Is there something in the SDN that hold the speed of the nics?

Sorry if I dont do myself clear enought.

Any help will do!

Thank you

Best regard

With MTU 1500

With MTU 9000

Last edited: