Hey Guys,

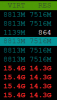

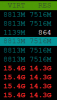

we have Guests VMS which are set with 7GB/14GB of RAM. When I look in htop, the VMs take more Virtual RAM:

This breaks our Ressource calculation and forces the Server to use the SWAP File. Sometimes VMs are getting randomly turned off by the Server..

What does it exactly mean? Can we someone turn this "VIRT" overhead off?

we have Guests VMS which are set with 7GB/14GB of RAM. When I look in htop, the VMs take more Virtual RAM:

This breaks our Ressource calculation and forces the Server to use the SWAP File. Sometimes VMs are getting randomly turned off by the Server..

What does it exactly mean? Can we someone turn this "VIRT" overhead off?