One of our VMs got down While running a backup for a VM from the proxmox, May I know what would be the possible reason?

VMs got down While running a backup

- Thread starter roger cheng

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

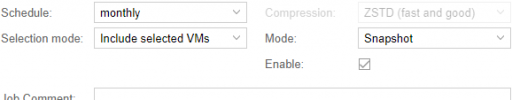

Backups for the VMS are configured to run on PBS and the resource usage hits maximum while running the backup.

That's a good one, you haven't run out of resources on node I think?Hi,

Please see the attached screenshot, We chose the snapshot option, but somehow when the backup running the VM resource usage hit maximum, and it stuck with no response, so we had to restart the VM to work

Thank you

>full backup log and the system log/journal from around the time the issue

May I know If it is possible to get the VM based log ? If yes, could you please provide the steps?

++++++++

root@example:~# pveversion -v

proxmox-ve: 8.0.1 (running kernel: 6.2.16-4-pve)

pve-manager: 8.0.3 (running version: 8.0.3/bbf3993334bfa916)

pve-kernel-6.2: 8.0.3

pve-kernel-5.15: 7.4-4

pve-kernel-5.13: 7.1-9

pve-kernel-5.4: 6.4-7

pve-kernel-6.2.16-4-pve: 6.2.16-5

pve-kernel-5.3: 6.1-6

pve-kernel-5.15.108-1-pve: 5.15.108-1

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-1-pve: 5.13.19-3

pve-kernel-5.4.143-1-pve: 5.4.143-1

pve-kernel-5.4.140-1-pve: 5.4.140-1

pve-kernel-5.4.128-1-pve: 5.4.128-2

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.65-1-pve: 5.4.65-1

pve-kernel-5.4.44-1-pve: 5.4.44-1

pve-kernel-5.4.41-1-pve: 5.4.41-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph: 17.2.6-pve1+3

ceph-fuse: 17.2.6-pve1+3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-3

libknet1: 1.25-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.0

libpve-access-control: 8.0.3

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.6

libpve-guest-common-perl: 5.0.3

libpve-http-server-perl: 5.0.4

libpve-rs-perl: 0.8.4

libpve-storage-perl: 8.0.2

libqb0: 1.0.5-1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 3.0.1-1

proxmox-backup-file-restore: 3.0.1-1

proxmox-kernel-helper: 8.0.2

proxmox-mail-forward: 0.2.0

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.2

proxmox-widget-toolkit: 4.0.6

pve-cluster: 8.0.2

pve-container: 5.0.4

pve-docs: 8.0.4

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.3

pve-firmware: 3.7-1

pve-ha-manager: 4.0.2

pve-i18n: 3.0.5

pve-qemu-kvm: 8.0.2-3

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.6

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.12-pve1

May I know If it is possible to get the VM based log ? If yes, could you please provide the steps?

++++++++

root@example:~# pveversion -v

proxmox-ve: 8.0.1 (running kernel: 6.2.16-4-pve)

pve-manager: 8.0.3 (running version: 8.0.3/bbf3993334bfa916)

pve-kernel-6.2: 8.0.3

pve-kernel-5.15: 7.4-4

pve-kernel-5.13: 7.1-9

pve-kernel-5.4: 6.4-7

pve-kernel-6.2.16-4-pve: 6.2.16-5

pve-kernel-5.3: 6.1-6

pve-kernel-5.15.108-1-pve: 5.15.108-1

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-1-pve: 5.13.19-3

pve-kernel-5.4.143-1-pve: 5.4.143-1

pve-kernel-5.4.140-1-pve: 5.4.140-1

pve-kernel-5.4.128-1-pve: 5.4.128-2

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.65-1-pve: 5.4.65-1

pve-kernel-5.4.44-1-pve: 5.4.44-1

pve-kernel-5.4.41-1-pve: 5.4.41-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph: 17.2.6-pve1+3

ceph-fuse: 17.2.6-pve1+3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-3

libknet1: 1.25-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.0

libpve-access-control: 8.0.3

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.6

libpve-guest-common-perl: 5.0.3

libpve-http-server-perl: 5.0.4

libpve-rs-perl: 0.8.4

libpve-storage-perl: 8.0.2

libqb0: 1.0.5-1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 3.0.1-1

proxmox-backup-file-restore: 3.0.1-1

proxmox-kernel-helper: 8.0.2

proxmox-mail-forward: 0.2.0

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.2

proxmox-widget-toolkit: 4.0.6

pve-cluster: 8.0.2

pve-container: 5.0.4

pve-docs: 8.0.4

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.3

pve-firmware: 3.7-1

pve-ha-manager: 4.0.2

pve-i18n: 3.0.5

pve-qemu-kvm: 8.0.2-3

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.6

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.12-pve1

>full backup log and the system log/journal from around the time the issue

May I know If it is possible to get the VM based log ? If yes, could you please provide the steps?

qm config <ID> --current replacing <ID> with the actual ID of the VM.See the VM's or node's

Task History in the UI for the backup task log.journalctl --since <date> for the system journal.

Code:

journalctl --since 2023-09-10 | grep -i 10324

Sep 10 05:51:13 px-sg1-n8 pvedaemon[3091814]: <root@pam> starting task UPID:px-sg1-n8:0014C554:0AA0D010:64FCE8D1:vzdump:10324:root@pam:

Sep 10 07:34:46 px-sg1-n8 pvedaemon[1361236]: INFO: starting new backup job: vzdump 10324 --notes-template '{{guestname}}{{guestname}}, {{node}}, {{vmid}}' --storage maintanance-bu3 --mode snapshot --remove 0 --node px-sg1-n8

Sep 10 07:34:46 px-sg1-n8 pvedaemon[1361236]: INFO: Starting Backup of VM 10324 (qemu)

Sep 10 08:34:46 px-sg1-n8 pvedaemon[1361236]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-fsfreeze-freeze' failed - got timeout

Sep 10 08:37:46 px-sg1-n8 pvedaemon[1361236]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-fsfreeze-thaw' failed - got timeout

Sep 10 08:37:46 px-sg1-n8 pvedaemon[1361236]: INFO: Finished Backup of VM 10324 (01:03:00)

Sep 10 09:14:22 px-sg1-n8 pvedaemon[1402809]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

Sep 10 15:36:13 px-sg1-n8 pvedaemon[1421105]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

Sep 10 15:36:24 px-sg1-n8 pvedaemon[3299877]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

Sep 10 15:37:34 px-sg1-n8 pvedaemon[1421105]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

Sep 10 15:37:48 px-sg1-n8 pvedaemon[3299877]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

Sep 10 15:40:57 px-sg1-n8 pvedaemon[1402809]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

Sep 10 15:41:17 px-sg1-n8 pvedaemon[3299877]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

Sep 10 15:41:38 px-sg1-n8 pvedaemon[3299877]: <root@pam> starting task UPID:px-sg1-n8:00236A75:0AD6DDE6:64FD7332:hastop:10324:root@pam:

Sep 10 15:41:39 px-sg1-n8 pvedaemon[3299877]: <root@pam> end task UPID:px-sg1-n8:00236A75:0AD6DDE6:64FD7332:hastop:10324:root@pam: OK

Sep 10 15:41:43 px-sg1-n8 pve-ha-lrm[2321107]: stopping service vm:10324 (timeout=0)

Sep 10 15:41:43 px-sg1-n8 pve-ha-lrm[2321111]: stop VM 10324: UPID:px-sg1-n8:00236AD7:0AD6E016:64FD7337:qmstop:10324:root@pam:

Sep 10 15:41:43 px-sg1-n8 pve-ha-lrm[2321107]: <root@pam> starting task UPID:px-sg1-n8:00236AD7:0AD6E016:64FD7337:qmstop:10324:root@pam:

Sep 10 15:41:44 px-sg1-n8 kernel: fwbr10324i0: port 2(tap10324i0) entered disabled state

Sep 10 15:41:44 px-sg1-n8 kernel: fwbr10324i0: port 1(fwln10324i0) entered disabled state

Sep 10 15:41:44 px-sg1-n8 kernel: vmbr0: port 17(fwpr10324p0) entered disabled state

Sep 10 15:41:44 px-sg1-n8 kernel: device fwln10324i0 left promiscuous mode

Sep 10 15:41:44 px-sg1-n8 kernel: fwbr10324i0: port 1(fwln10324i0) entered disabled state

Sep 10 15:41:44 px-sg1-n8 kernel: device fwpr10324p0 left promiscuous mode

Sep 10 15:41:44 px-sg1-n8 kernel: vmbr0: port 17(fwpr10324p0) entered disabled state

Sep 10 15:41:44 px-sg1-n8 systemd[1]: 10324.scope: Deactivated successfully.

Sep 10 15:41:44 px-sg1-n8 systemd[1]: 10324.scope: Consumed 8h 18min 49.321s CPU time.

Sep 10 15:41:44 px-sg1-n8 pve-ha-lrm[2321107]: <root@pam> end task UPID:px-sg1-n8:00236AD7:0AD6E016:64FD7337:qmstop:10324:root@pam: OK

Sep 10 15:41:44 px-sg1-n8 pve-ha-lrm[2321107]: service status vm:10324 stopped

Sep 10 15:41:45 px-sg1-n8 qmeventd[2321135]: Starting cleanup for 10324

Sep 10 15:41:45 px-sg1-n8 qmeventd[2321135]: Finished cleanup for 10324

Sep 10 15:41:48 px-sg1-n8 pvedaemon[1421105]: <root@pam> starting task UPID:px-sg1-n8:00236AF4:0AD6E1B2:64FD733C:hastart:10324:root@pam:

Sep 10 15:41:48 px-sg1-n8 pvedaemon[1421105]: <root@pam> end task UPID:px-sg1-n8:00236AF4:0AD6E1B2:64FD733C:hastart:10324:root@pam: OK

Sep 10 15:41:54 px-sg1-n8 pve-ha-lrm[2321393]: starting service vm:10324

Sep 10 15:41:54 px-sg1-n8 pve-ha-lrm[2321396]: start VM 10324: UPID:px-sg1-n8:00236BF4:0AD6E40B:64FD7342:qmstart:10324:root@pam:

Sep 10 15:41:54 px-sg1-n8 pve-ha-lrm[2321393]: <root@pam> starting task UPID:px-sg1-n8:00236BF4:0AD6E40B:64FD7342:qmstart:10324:root@pam:

Sep 10 15:41:54 px-sg1-n8 systemd[1]: Started 10324.scope.

Sep 10 15:41:55 px-sg1-n8 kernel: device tap10324i0 entered promiscuous mode

Sep 10 15:41:55 px-sg1-n8 kernel: vmbr0: port 6(fwpr10324p0) entered blocking state

Sep 10 15:41:55 px-sg1-n8 kernel: vmbr0: port 6(fwpr10324p0) entered disabled state

Sep 10 15:41:55 px-sg1-n8 kernel: device fwpr10324p0 entered promiscuous mode

Sep 10 15:41:55 px-sg1-n8 kernel: vmbr0: port 6(fwpr10324p0) entered blocking state

Sep 10 15:41:55 px-sg1-n8 kernel: vmbr0: port 6(fwpr10324p0) entered forwarding state

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 1(fwln10324i0) entered blocking state

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 1(fwln10324i0) entered disabled state

Sep 10 15:41:55 px-sg1-n8 kernel: device fwln10324i0 entered promiscuous mode

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 1(fwln10324i0) entered blocking state

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 1(fwln10324i0) entered forwarding state

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 2(tap10324i0) entered blocking state

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 2(tap10324i0) entered disabled state

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 2(tap10324i0) entered blocking state

Sep 10 15:41:55 px-sg1-n8 kernel: fwbr10324i0: port 2(tap10324i0) entered forwarding state

Sep 10 15:41:55 px-sg1-n8 pve-ha-lrm[2321393]: <root@pam> end task UPID:px-sg1-n8:00236BF4:0AD6E40B:64FD7342:qmstart:10324:root@pam: OK

Sep 10 15:41:55 px-sg1-n8 pve-ha-lrm[2321393]: service status vm:10324 started

root@px-sg1-n8:~#Here is the result for the issue facing VM backup log

Because of the grep, this is not a full picture of what might've happened.Code:journalctl --since 2023-09-10 | grep -i 10324

Sounds like there were issues with guest agent/freeze/thaw. But the message about the failed guest-ping come more than half an hour later and just the first one, all others only at 15:36-15:41. Are you sure the guest was down during or immediately after backup? I'd suggest to check inside the guest what is going on (e.g. system logs and logs from the guest agent).Code:Sep 10 07:34:46 px-sg1-n8 pvedaemon[1361236]: INFO: Starting Backup of VM 10324 (qemu) Sep 10 08:34:46 px-sg1-n8 pvedaemon[1361236]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-fsfreeze-freeze' failed - got timeout Sep 10 08:37:46 px-sg1-n8 pvedaemon[1361236]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-fsfreeze-thaw' failed - got timeout Sep 10 08:37:46 px-sg1-n8 pvedaemon[1361236]: INFO: Finished Backup of VM 10324 (01:03:00) Sep 10 09:14:22 px-sg1-n8 pvedaemon[1402809]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout Sep 10 15:36:13 px-sg1-n8 pvedaemon[1421105]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout Sep 10 15:36:24 px-sg1-n8 pvedaemon[3299877]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout Sep 10 15:37:34 px-sg1-n8 pvedaemon[1421105]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout Sep 10 15:37:48 px-sg1-n8 pvedaemon[3299877]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout Sep 10 15:40:57 px-sg1-n8 pvedaemon[1402809]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout Sep 10 15:41:17 px-sg1-n8 pvedaemon[3299877]: VM 10324 qmp command failed - VM 10324 qmp command 'guest-ping' failed - got timeout

I had this issue when the Backup-Storage was too slow/stalls/timed-out.

You should look into this.

You should look into this.

Last edited: