Hello everyone,

I am having trouble to boot-up a virtual machine on a PVE-Server whose disk image was exported from a PVE.

So there are two PVE-Servers (let's call them PVE "A" and "B") which are essentially the same (Version/Details below).

The VM in question (Ubuntu 18.04) is configured and bootable on PVE "A".

Disk Image (pvesm):

Exporting Disk Image :

Transferring Image and importing on PVE "B":

Resulting Disk Image (pvesm):

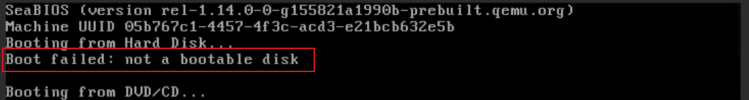

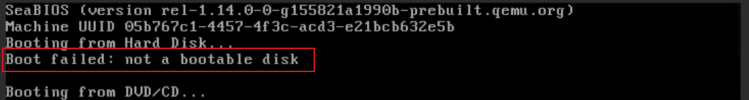

Boot-up fails with "not a bootable disk":

When I re-import that very same disk-image again to a freshly created VM on PVE "A" (origin) everything works as expected - bootable, running fine. I did that in an attempt to see if I made a mistake in the exporting-importing procedure. I also checked if there was a transferring error via sftp, the checksum of the raw-disk images however are identical.

The configuration of the virtual machines are essentially the same as well - as far as I can tell.

conf-file on PVE "A" :

conf-file on PVE "B":

I also compared PVE-versions between the two hypervisors:

"A" - pveversion --verbose

PVE "B" (configured as HA-Cluster with 3 nodes):

I had migrated VMs like that before successfully. I am having the same trouble with another VM (Windows Server 2019).

So my question is:

What is missing/ wrong with the VM?

Is there another/ better way to migrate the VM?

edit: I also tried to boot up with OVMF (UEFI) as BIOS on PVE "B" which fails as well.

I am having trouble to boot-up a virtual machine on a PVE-Server whose disk image was exported from a PVE.

So there are two PVE-Servers (let's call them PVE "A" and "B") which are essentially the same (Version/Details below).

The VM in question (Ubuntu 18.04) is configured and bootable on PVE "A".

Disk Image (pvesm):

local:99503/vm-disk-99503-disk-0.raw raw images 64424509440 99503Exporting Disk Image :

Bash:

pvesm export local:99503/vm-99503-disk-0.raw raw+size server-core.rawTransferring Image and importing on PVE "B":

Bash:

pvesm import vm-hdd:vm-132103-disk-0.raw raw+size server-core.rawResulting Disk Image (pvesm):

vm-hdd:vm-132103-disk-0.raw raw images 64424509440 132103Boot-up fails with "not a bootable disk":

When I re-import that very same disk-image again to a freshly created VM on PVE "A" (origin) everything works as expected - bootable, running fine. I did that in an attempt to see if I made a mistake in the exporting-importing procedure. I also checked if there was a transferring error via sftp, the checksum of the raw-disk images however are identical.

The configuration of the virtual machines are essentially the same as well - as far as I can tell.

conf-file on PVE "A" :

Code:

boot: order=sata0;ide2;net0

cores: 4

ide2: none,media=cdrom

memory: 4096

meta: creation-qemu=6.1.0,ctime=1644756394

name: server-core

net0: virtio=0A:63:C6:72:1B:45,bridge=vmbr0,firewall=1,link_down=1

numa: 0

ostype: l26

sata0: local:99503/vm-disk-99503-disk-0.raw,size=60G

scsihw: virtio-scsi-pci

smbios1: uuid=ad518a82-cdb8-4eb7-9868-8ed97ddf04d1

sockets: 1

vmgenid: c6d4883b-6853-4d6d-8fd8-df2338fa8518conf-file on PVE "B":

Code:

agent: 0

balloon: 0

boot: order=sata0;ide2;net0

cores: 8

ide2: none,media=cdrom

memory: 32768

meta: creation-qemu=6.1.0,ctime=1644608369

name: server-core

net0: virtio=22:60:E0:99:E9:43,bridge=vmbr0,firewall=1

numa: 0

onboot: 1

ostype: l26

sata0: vm-hdd:vm-132103-disk-0.raw,size=60G

scsihw: virtio-scsi-pci

smbios1: uuid=05b767c1-4457-4f3c-acd3-e21bcb632e5b

sockets: 1

vmgenid: 2933af48-6f13-4546-a006-173c5d15660cI also compared PVE-versions between the two hypervisors:

"A" - pveversion --verbose

Code:

proxmox-ve: 7.1-1 (running kernel: 5.13.19-3-pve)

pve-manager: 7.1-10 (running version: 7.1-10/6ddebafe)

pve-kernel-helper: 7.1-8

pve-kernel-5.13: 7.1-6

pve-kernel-5.13.19-3-pve: 5.13.19-7

...

libpve-storage-perl: 7.0-15

...PVE "B" (configured as HA-Cluster with 3 nodes):

Code:

proxmox-ve: 7.1-1 (running kernel: 5.13.19-3-pve)

pve-manager: 7.1-10 (running version: 7.1-10/6ddebafe)

pve-kernel-helper: 7.1-8

pve-kernel-5.13: 7.1-6

pve-kernel-5.13.19-3-pve: 5.13.19-7

pve-kernel-5.13.19-2-pve: 5.13.19-4

...

libpve-storage-perl: 7.0-15

...I had migrated VMs like that before successfully. I am having the same trouble with another VM (Windows Server 2019).

So my question is:

What is missing/ wrong with the VM?

Is there another/ better way to migrate the VM?

edit: I also tried to boot up with OVMF (UEFI) as BIOS on PVE "B" which fails as well.

Last edited: