Hi all,

My setup:

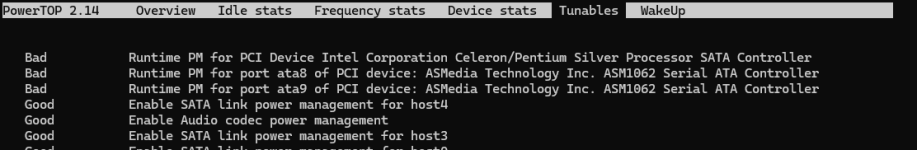

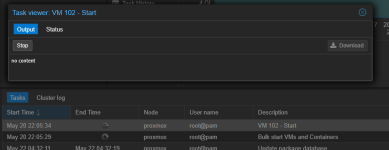

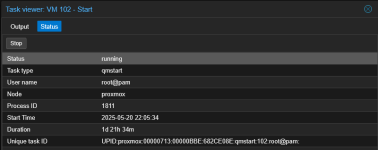

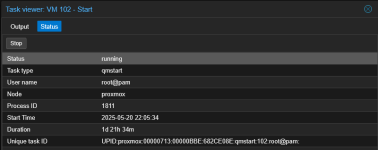

I have recently been having the following issue on my TrueNAS VM (102) with PCIe passthrough of the SATA controllers. When Proxmox starts, it attempts to automatically start VM102 because "Start at boot" is enabled. However, the startup task never finishes, not even after several days. Selecting the task and clicking "stop" has no effect...

Additionally, rebooting the host system no longer works via the GUI or by using "systemctl --force --force reboot" on the CLI. When I forcefully reboot it by unplugging the cable, I get stuck with the same problem again on the next boot.

A month ago I had the same issue, then I installed all updates and power cycled which appeared to have fixed the issue. I assumed the issue was resolved with the update, but now the same problem is back and I'm already fully up to date, so I have no clue what is causing this problem.

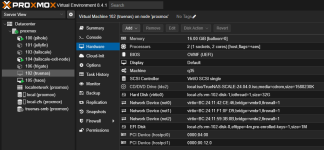

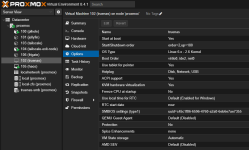

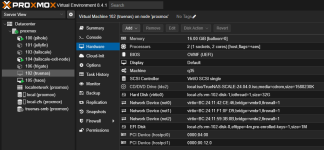

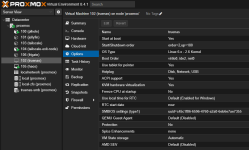

VM configuration:

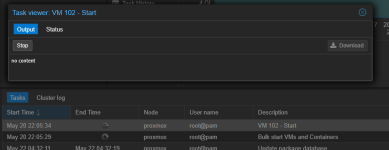

Tasks:

My setup:

- Proxmox VE 8.4.1

- Intel Atom J4105

- 32GB

- Several VMs and containers

- Boot mode: EFI

I have recently been having the following issue on my TrueNAS VM (102) with PCIe passthrough of the SATA controllers. When Proxmox starts, it attempts to automatically start VM102 because "Start at boot" is enabled. However, the startup task never finishes, not even after several days. Selecting the task and clicking "stop" has no effect...

Additionally, rebooting the host system no longer works via the GUI or by using "systemctl --force --force reboot" on the CLI. When I forcefully reboot it by unplugging the cable, I get stuck with the same problem again on the next boot.

A month ago I had the same issue, then I installed all updates and power cycled which appeared to have fixed the issue. I assumed the issue was resolved with the update, but now the same problem is back and I'm already fully up to date, so I have no clue what is causing this problem.

VM configuration:

Tasks:

Last edited: