HI,

Thank you for reading my post .

.

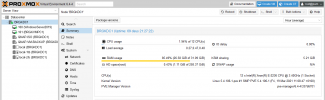

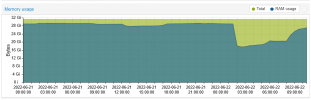

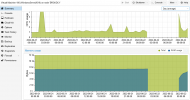

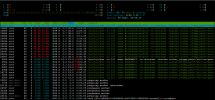

I've 2 VMs. One of them (100WinDerv2019) stops at night (not every). Then what to start it works fine. It starts since 4 days, befor was fine.

Can you please help to locate a problem? Start VM manualy every morniing its painass.

Here is my syslog from 48h to analize.

https://pastebin.com/JBTXFr1p

Thank you for reading my post

I've 2 VMs. One of them (100WinDerv2019) stops at night (not every). Then what to start it works fine. It starts since 4 days, befor was fine.

Can you please help to locate a problem? Start VM manualy every morniing its painass.

Here is my syslog from 48h to analize.

https://pastebin.com/JBTXFr1p