Hi all, Im new to proxmox I seem to have come upon an issue that I cant find any answers to. I have an old Dell Poweredge T130 server, single Xenon 4 core processor 32 gig ram, and a variety of 4 sata HDD's that I am experimenting with. Processor does have Virtalization enabled and I use to run windows 10 with hyper-v and a couple of vms with no problem. The proxmox installation went fine w/o issues or warning, I have been able to initialize and create LVM, LVM-Thin, Directory, and ZFS on drive w/o issue or warning. THE PROBLEM is with VM's .. It appears all my VMs installed correctly (Ubuntu Server and Windows Server) I used all defaults while creating and they too seem to have installed w/o issue or warnings but any one I go to startup throws an error "ERROR START FAILED QEMU EXITED WITH CODE 1" followed by "CONNECTION TIMED OUT" and the VM immediately shuts down. NOW.. I also have a Lenovo W530 laptop intel 2.4ghz 4core processor, 32gig ram, and 2 sata HDD's in it, I installed PROXMOX on that, setup VM's and everything runs perfect. So I cant seem to find the issue with the Poweredge T130? - any help would be highly appreciated it as I really like the way it is running on my laptop, but sure wish I could get it on something with more resources

VM Startup Error "Error start failed QEMU exited with code1"

- Thread starter ndgns1

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Thanks for the reply, I was about to give up, anyways, I just did another fresh install + setup Ubuntu Server as a test VM, went to start and this was the response.

Also: FYI- I installed TrueNAS on the same box earlier as a test and I was able to run VM's under TrueNas w/o issue, not sure if that matters

Nov 20 12:12:19 pve pvedaemon[909]: <root@pam> starting task UPID ve:00000BD1:00012BA7:655BBDA3:qmstart:100:root@pam:

ve:00000BD1:00012BA7:655BBDA3:qmstart:100:root@pam:

Nov 20 12:12:19 pve pvedaemon[3025]: start VM 100: UPID ve:00000BD1:00012BA7:655BBDA3:qmstart:100:root@pam:

ve:00000BD1:00012BA7:655BBDA3:qmstart:100:root@pam:

Nov 20 12:12:20 pve systemd[1]: Started 100.scope.

Nov 20 12:12:21 pve kernel: device tap100i0 entered promiscuous mode

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered blocking state

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwpr100p0 entered promiscuous mode

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered blocking state

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered forwarding state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwln100i0 entered promiscuous mode

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered forwarding state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered disabled state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered forwarding state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered disabled state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwln100i0 left promiscuous mode

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwpr100p0 left promiscuous mode

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Nov 20 12:12:21 pve systemd[1]: 100.scope: Deactivated successfully.

Nov 20 12:12:21 pve systemd[1]: 100.scope: Consumed 1.384s CPU time.

Nov 20 12:12:21 pve pvedaemon[3025]: start failed: QEMU exited with code 1

Nov 20 12:12:22 pve pvedaemon[909]: <root@pam> end task UPID ve:00000BD1:00012BA7:655BBDA3:qmstart:100:root@pam: start failed: QEMU exited with code 1

ve:00000BD1:00012BA7:655BBDA3:qmstart:100:root@pam: start failed: QEMU exited with code 1

Also: FYI- I installed TrueNAS on the same box earlier as a test and I was able to run VM's under TrueNas w/o issue, not sure if that matters

Nov 20 12:12:19 pve pvedaemon[909]: <root@pam> starting task UPID

Nov 20 12:12:19 pve pvedaemon[3025]: start VM 100: UPID

Nov 20 12:12:20 pve systemd[1]: Started 100.scope.

Nov 20 12:12:21 pve kernel: device tap100i0 entered promiscuous mode

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered blocking state

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwpr100p0 entered promiscuous mode

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered blocking state

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered forwarding state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwln100i0 entered promiscuous mode

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered forwarding state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered disabled state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered blocking state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered forwarding state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 2(tap100i0) entered disabled state

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwln100i0 left promiscuous mode

Nov 20 12:12:21 pve kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Nov 20 12:12:21 pve kernel: device fwpr100p0 left promiscuous mode

Nov 20 12:12:21 pve kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Nov 20 12:12:21 pve systemd[1]: 100.scope: Deactivated successfully.

Nov 20 12:12:21 pve systemd[1]: 100.scope: Consumed 1.384s CPU time.

Nov 20 12:12:21 pve pvedaemon[3025]: start failed: QEMU exited with code 1

Nov 20 12:12:22 pve pvedaemon[909]: <root@pam> end task UPID

Could you please also post the output of the following commands:

Code:

pveversion -vproxmox-ve: 8.0.1 (running kernel: 6.2.16-3-pve)

pve-manager: 8.0.3 (running version: 8.0.3/bbf3993334bfa916)

pve-kernel-6.2: 8.0.2

pve-kernel-6.2.16-3-pve: 6.2.16-3

ceph-fuse: 17.2.6-pve1+3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx2

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-3

libknet1: 1.25-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.0

libpve-access-control: 8.0.3

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.5

libpve-guest-common-perl: 5.0.3

libpve-http-server-perl: 5.0.3

libpve-rs-perl: 0.8.3

libpve-storage-perl: 8.0.1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 2.99.0-1

proxmox-backup-file-restore: 2.99.0-1

proxmox-kernel-helper: 8.0.2

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.0.5

pve-cluster: 8.0.1

pve-container: 5.0.3

pve-docs: 8.0.3

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.2

pve-firmware: 3.7-1

pve-ha-manager: 4.0.2

pve-i18n: 3.0.4

pve-qemu-kvm: 8.0.2-3

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.6

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.12-pve1

Code:

qm config <vmid>agent: 1

boot: order=scsi0;ide2;net0

cores: 1

cpu: x86-64-v2-AES

ide2: local:iso/ubuntu-22.04.3-live-server-amd64.iso,media=cdrom,size=2083390K

memory: 6048

meta: creation-qemu=8.0.2,ctime=1700510824

name: WebServer

net0: virtio=02:C6:8F:6D:F0:FB,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: local-lvm:vm-100-disk-0,iothread=1,size=50G

scsihw: virtio-scsi-single

smbios1: uuid=77e6fe36-e164-4888-bf3a-932c42b7e8b9

sockets: 1

vmgenid: aead597c-00cb-4fe7-aefd-502ec8b98e9c

Code:

kvm --versionQEMU emulator version 8.0.2 (pve-qemu-kvm_8.0.2-3)

Copyright (c) 2003-2022 Fabrice Bellard and the QEMU Project developers

I just had exactly the same issue.Hi,

is there any output in theVM 100 - Starttask log (double click on the task in the bottom panel or the VM'sTask Historyin the UI)?

The VM was created using the API and is started after setting the config (hardware + clouding + firewall).

I tried starting the VM manually and that worked without any issues.

But I'd like to figure out why this happened in the first place to prevent this from happening again in the future.

Is Proxmox acquiring a lock on the vm when setting the config (hardware/ cloudinit or firewall)?

Maybe it was just not ready yet.

Code:

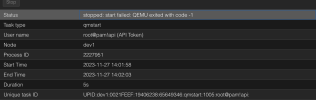

Nov 27 14:01:58 dev1 pvedaemon[2189502]: <root@pam!api> starting task UPID:dev1:0021FEEF:19406238:65649346:qmstart:1005:root@pam!api:

Nov 27 14:01:58 dev1 pvedaemon[2227951]: start VM 1005: UPID:dev1:0021FEEF:19406238:65649346:qmstart:1005:root@pam!api:

Nov 27 14:02:03 dev1 systemd[1]: Started 1005.scope.

Nov 27 14:02:03 dev1 kernel: [4236460.012734] GPT:Primary header thinks Alt. header is not at the end of the disk.

Nov 27 14:02:03 dev1 kernel: [4236460.012738] GPT:4194303 != 41943039

Nov 27 14:02:03 dev1 kernel: [4236460.012739] GPT:Alternate GPT header not at the end of the disk.

Nov 27 14:02:03 dev1 kernel: [4236460.012740] GPT:4194303 != 41943039

Nov 27 14:02:03 dev1 kernel: [4236460.012741] GPT: Use GNU Parted to correct GPT errors.

Nov 27 14:02:03 dev1 kernel: [4236460.012745] zd320: p1 p14 p15

Nov 27 14:02:03 dev1 systemd-udevd[2228615]: Using default interface naming scheme 'v247'.

Nov 27 14:02:03 dev1 systemd-udevd[2228615]: ethtool: autonegotiation is unset or enabled, the speed and duplex are not writable.

Nov 27 14:02:03 dev1 pvedaemon[2227951]: start failed: QEMU exited with code -1

Nov 27 14:02:03 dev1 pvedaemon[2189502]: <root@pam!api> end task UPID:dev1:0021FEEF:19406238:65649346:qmstart:1005:root@pam!api: start failed: QEMU exited with code -1

Code:

root@dev1:~# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.116-1-pve)

pve-manager: 7.4-17 (running version: 7.4-17/513c62be)

pve-kernel-5.15: 7.4-6

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.107-1-pve: 5.15.107-1

pve-kernel-5.15.102-1-pve: 5.15.102-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.3-1

proxmox-backup-file-restore: 2.4.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1

Last edited:

Hmm, might be that something was not fully ready yet after creation, but usually shouldn't happen.The VM was created using the API and is started after setting the config (hardware + clouding + firewall).

Unfortunately, there is no clear error here (would be a bit surprised if the GPT warnings would be it).Code:Nov 27 14:01:58 dev1 pvedaemon[2189502]: <root@pam!api> starting task UPID:dev1:0021FEEF:19406238:65649346:qmstart:1005:root@pam!api: Nov 27 14:01:58 dev1 pvedaemon[2227951]: start VM 1005: UPID:dev1:0021FEEF:19406238:65649346:qmstart:1005:root@pam!api: Nov 27 14:02:03 dev1 systemd[1]: Started 1005.scope. Nov 27 14:02:03 dev1 kernel: [4236460.012734] GPT:Primary header thinks Alt. header is not at the end of the disk. Nov 27 14:02:03 dev1 kernel: [4236460.012738] GPT:4194303 != 41943039 Nov 27 14:02:03 dev1 kernel: [4236460.012739] GPT:Alternate GPT header not at the end of the disk. Nov 27 14:02:03 dev1 kernel: [4236460.012740] GPT:4194303 != 41943039 Nov 27 14:02:03 dev1 kernel: [4236460.012741] GPT: Use GNU Parted to correct GPT errors. Nov 27 14:02:03 dev1 kernel: [4236460.012745] zd320: p1 p14 p15 Nov 27 14:02:03 dev1 systemd-udevd[2228615]: Using default interface naming scheme 'v247'. Nov 27 14:02:03 dev1 systemd-udevd[2228615]: ethtool: autonegotiation is unset or enabled, the speed and duplex are not writable. Nov 27 14:02:03 dev1 pvedaemon[2227951]: start failed: QEMU exited with code -1 Nov 27 14:02:03 dev1 pvedaemon[2189502]: <root@pam!api> end task UPID:dev1:0021FEEF:19406238:65649346:qmstart:1005:root@pam!api: start failed: QEMU exited with code -1

Is there anything in the log output of the task?

Yes, both modifying and starting the VM will take a lock on the configuration file and QEMU will only be invoked after getting the lock. Should it happen again, please provide details about the API calls you are using including parameters.Nope, nothing.

Is Proxmox acquiring a lock on the vm when setting the config (hardware/ cloudinit or firewall)?

Maybe it was just not ready yet.

What kind of storage are you using? Are the GPT errors still there for the manual start?

Thank you! So it should be enough to check if the VM still has a lock and wait until it is released to prevent such issues in the future?Yes, both modifying and starting the VM will take a lock on the configuration file and QEMU will only be invoked after getting the lock. Should it happen again, please provide details about the API calls you are using including parameters.

What kind of storage are you using? Are the GPT errors still there for the manual start?

I'm using ZFS. I did not see the GPT issue again so far

Last edited:

There is nothing to add, it's already there: https://git.proxmox.com/?p=qemu-ser...440f524176575d26e509be70ac1c70c3a94a971#l5624Thank you! So it should be enough to check if the VM still has a lock and wait until it is released to prevent such issues in the future?

I'm using ZFS. I did not see the GPT issue again so far

Before starting the VM, a lock for the configuration file is taken, so it cannot be modified concurrently.

Thank you Fiona, but I might have misunderstood your message. I thought Qemu exited when trying to start the VM because it was unable to get the lock as maybe one of the config requests still had the lock acquired.There is nothing to add, it's already there: https://git.proxmox.com/?p=qemu-ser...440f524176575d26e509be70ac1c70c3a94a971#l5624

Before starting the VM, a lock for the configuration file is taken, so it cannot be modified concurrently.

In this case, I would implement a method to wait until there is no more lock on the VM before trying to start it.

Or did I get something wrong?

The lock is managed by our API backend, not by QEMU. Our API functions make sure to take the lock during modification as well as during startup so they will not cause issues for each other when two API calls are issued soon after each other. QEMU is only started after the configuration lock has been successfully acquired and that means no modification to the configuration can run at the same time. It'll have to wait until startup is over and the lock can be taken again. If the lock can't be acquired in IIRC 10 seconds, the API call will fail.Thank you Fiona, but I might have misunderstood your message. I thought Qemu exited when trying to start the VM because it was unable to get the lock as maybe one of the config requests still had the lock acquired.

In this case, I would implement a method to wait until there is no more lock on the VM before trying to start it.

Or did I get something wrong?

The lock is managed by our API backend, not by QEMU. Our API functions make sure to take the lock during modification as well as during startup so they will not cause issues for each other when two API calls are issued soon after each other. QEMU is only started after the configuration lock has been successfully acquired and that means no modification to the configuration can run at the same time. It'll have to wait until startup is over and the lock can be taken again. If the lock can't be acquired in IIRC 10 seconds, the API call will fail.

I highly appreciate your input, Fiona!

That means waiting until no more lock is held on the VM should prevent any issues with concurrency in the future, did I get that right?

And hopefully, also the qemu start issue I mentioned earlier.

So I don't have to perform an additional check if there are any active tasks for the given VM, right?

Thank you so much again!

It should prevent any issues with concurrency right now, because it's already implemented.That means waiting until no more lock is held on the VM should prevent any issues with concurrency in the future, did I get that right?

And hopefully, also the qemu start issue I mentioned earlier.

In many cases you don't, but it's highly recommended to do so. Otherwise, you might run into the timeout for acquiring the lock and you can't predict the order if you issue multiple API calls that all need to acquire the lock. There are also certain tasks that do not hold the lock for the full duration, e.g. taking a snapshot, backup, but during which many other changes are not allowed. (They do set an explicitSo I don't have to perform an additional check if there are any active tasks for the given VM, right?

lock option inside the config, so other tasks can see that and fail). You most likely also want to wait for those then have your subsequent API calls fail.It should prevent any issues with concurrency right now, because it's already implemented.

I wonder what caused this issue then.

Given I was able to manually start the VM a few seconds later it actually indicates that something wasn't ready yet

Yes, but the VM configuration change should've been ready (except if you issued multiple API calls at the same time and the start call got the lock first before the configuration change could be done).I wonder what caused this issue then.

Given I was able to manually start the VM a few seconds later it actually indicates that something wasn't ready yet

Should it happen again, please provide details about the API calls you are using including parameters.

Not at the same time but sequentially after each other.Yes, but the VM configuration change should've been ready (except if you issued multiple API calls at the same time and the start call got the lock first before the configuration change could be done).

At least per VM. There might be multiple API requests at the same time, but only when configuring multiple VMs, the lock situation shouldn't be an issue then I guess.

This is the list of API requests that were sent before eventually trying to start the VM:

- Create VM:

- Endpoint: POST /nodes/{node}/qemu/{templateId}/clone

- Parameters: newid, full, name

- Set VM Configuration:

- Endpoint: POST /nodes/{node}/qemu/{vmId}/config

- Parameters: Dynamic based on VM configuration (e.g., cores, memory, net0, scsi0)

- Set Key for Cloud Init:

- Endpoint: PUT /nodes/{node}/qemu/{vmId}/config

- Parameters: ciuser, sshkeys

- Configure Networking:

- Endpoint: POST /nodes/{node}/qemu/{vmId}/config

- Parameters: ipconfig0, nameserver

- Configure IP Set:

- Create IP Set Endpoint: POST /nodes/{node}/qemu/{vmId}/firewall/ipset/

- Create IP Set Parameters: name, node, vmid

- Attach IP Set Endpoint: POST /nodes/{node}/qemu/{vmId}/firewall/ipset/ipfilter-net0

- Attach IP Set Parameters: name, node, vmid, cidr

- Apply Firewall Defaults:

- Create Rule Endpoint: POST /nodes/{node}/qemu/{vmId}/firewall/rules/

- Create Rule Parameters: action, type, sport, proto, dport, source, dest, pos, enable, node, vmid