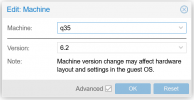

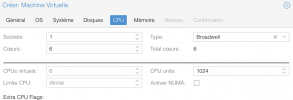

We have a Huawei RH2288 v3 rack server with 2x Xeon E5-2680 v4 (Broadwell-EP) running PVE 7.1

BIOS microcode version is 0xb000038

We have an ubuntu server VM have this problem, but Windows server 2019, 2022 do not ran into this.

In detail: this VM will shutdown because of that assertion fail after up about 11-14 hours (or in midnight, not sure which condition is critical).

We have tried these with no luck:

- Tried different pve-kernel releases: 5.13.19-2 5.15.35(latest), 5.13.19-6(mentioned above, after 28 hour, in midnight, it crashed).

- Update microcode to 0xb000040 (intel marked as "with caveats" because of BDX90 (see https://www.intel.com/content/dam/w...cification-updates/xeon-e7-v4-spec-update.pdf))

- `mitigation=off` kernel arguments

- disable nested kvm via kvm module options

So with information in this thread, we guess:

- This problem only occurs in intel broadwell and haswell cpus

- This problem is a kernel (kvm) bug

- After about 12h running / at midnight with low load, the guest kernel try to do some (cpu or power) state change and trigger this bug

Should we just comment that assertion out to "workaround" it?

Further read:

- https://www.reddit.com/r/VFIO/comments/s1k5yg/win10_guest_crashes_after_a_few_minutes/

- https://access.redhat.com/solutions/5749121

- https://gitlab.com/qemu-project/qemu/-/issues/1047 (may not relative)

- https://bugzilla.kernel.org/show_bug.cgi?id=216003 (may not relative)

BIOS microcode version is 0xb000038

We have an ubuntu server VM have this problem, but Windows server 2019, 2022 do not ran into this.

In detail: this VM will shutdown because of that assertion fail after up about 11-14 hours (or in midnight, not sure which condition is critical).

We have tried these with no luck:

- Tried different pve-kernel releases: 5.13.19-2 5.15.35(latest), 5.13.19-6(mentioned above, after 28 hour, in midnight, it crashed).

- Update microcode to 0xb000040 (intel marked as "with caveats" because of BDX90 (see https://www.intel.com/content/dam/w...cification-updates/xeon-e7-v4-spec-update.pdf))

- `mitigation=off` kernel arguments

- disable nested kvm via kvm module options

So with information in this thread, we guess:

- This problem only occurs in intel broadwell and haswell cpus

- This problem is a kernel (kvm) bug

- After about 12h running / at midnight with low load, the guest kernel try to do some (cpu or power) state change and trigger this bug

Should we just comment that assertion out to "workaround" it?

Further read:

- https://www.reddit.com/r/VFIO/comments/s1k5yg/win10_guest_crashes_after_a_few_minutes/

- https://access.redhat.com/solutions/5749121

- https://gitlab.com/qemu-project/qemu/-/issues/1047 (may not relative)

- https://bugzilla.kernel.org/show_bug.cgi?id=216003 (may not relative)