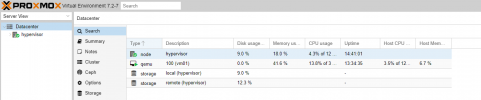

VM shows 0.0% disk usage

- Thread starter Amadex

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

At the moment, disk usage for VMs is not tracked and therefore this value is always displayed as 0%.

There is still an active issue on this problem. Please see this issue on our bug tracker for more information or if you want to contribute.

There is still an active issue on this problem. Please see this issue on our bug tracker for more information or if you want to contribute.

Thanks for the answer. I've switched from Microsoft Hyper-V to Proxmox, so questions questions.At the moment, disk usage for VMs is not tracked and therefore this value is always displayed as 0%.

There is still an active issue on this problem. Please see this issue on our bug tracker for more information or if you want to contribute.

Work-Around Fix for Ceph-Storage Usage for VM Usage in Proxmox Virtual Environment (PVE) Cluster.

Would submit the code in bugzilla too (will have to check Development guideline too)

and would be working on ZFS storage too, soon ; as its another popular in PVE Cluster setup,

sharing it if any one want to refer and want to use and want to give feedback.

Introduction:

This document provides step-by-step instructions on implementing a

work-around fix for Ceph-storage utilization in a Proxmox Virtual Environment (PVE) cluster.

Specifically, this fix addresses the issue of displaying boot disk usage for virtual machines (VMs),

including snapshot space, in a Ceph storage configuration.

Procedure:

1. Backup the Original Perl File:

Before making any changes, it is crucial to create a backup of the original Perl file for safety purposes.

2. Edit the Perl File:

Open the Perl file for editing. You can use any text editor, but we will use the example of using the vi editor.

Inside the editor, locate the line containing "{disk}" (approximately around line 2944).

3. Modify the Perl Code:

You will see the following code block:

After the comment line "# no info available," add the following code to retrieve disk usage from the Ceph pool for VMs:

4. Restart the pvestatd Service:

After making the necessary changes, restart the pvestatd service to apply the modifications.

5. Check for Errors:

Monitor the system logs for any potential errors to ensure that the changes were applied without issues.

6. Verify Disk Usage:

If everything is functioning correctly, you should now be able to see the disk usage, including boot disk usage and percentage used, for VMs when they are in the "ON" state.

I hope it help some, and we get feedback too.

Thanks in advance.

-Deepen.

Would submit the code in bugzilla too (will have to check Development guideline too)

and would be working on ZFS storage too, soon ; as its another popular in PVE Cluster setup,

sharing it if any one want to refer and want to use and want to give feedback.

Introduction:

This document provides step-by-step instructions on implementing a

work-around fix for Ceph-storage utilization in a Proxmox Virtual Environment (PVE) cluster.

Specifically, this fix addresses the issue of displaying boot disk usage for virtual machines (VMs),

including snapshot space, in a Ceph storage configuration.

Procedure:

1. Backup the Original Perl File:

Before making any changes, it is crucial to create a backup of the original Perl file for safety purposes.

Code:

cp /usr/share/perl5/PVE/QemuServer.pm /opt/QemuServer-original-`date +%Y-%m-%d-%s`.pm2. Edit the Perl File:

Open the Perl file for editing. You can use any text editor, but we will use the example of using the vi editor.

Code:

vi /usr/share/perl5/PVE/QemuServer.pmInside the editor, locate the line containing "{disk}" (approximately around line 2944).

3. Modify the Perl Code:

You will see the following code block:

Code:

my $size = PVE::QemuServer::Drive::bootdisk_size($storecfg, $conf);

if (defined($size)) {

$d->{disk} = 0; # no info available

$d->{maxdisk} = $size;

} else {

$d->{disk} = 0;

$d->{maxdisk} = 0;

}After the comment line "# no info available," add the following code to retrieve disk usage from the Ceph pool for VMs:

Code:

##### CODE TO FETCH VM DISK USAGE FROM CEPH POOL START #####

my @bootdiskorder = split('=', $conf->{boot});

my @bootdiskname = split(';', $bootdiskorder[1]);

my @bootdiskinfo = split(",", $conf->{$bootdiskname[0]});

my @bootdiskdetail = split(":", $bootdiskinfo[0]);

my $bootdiskstorage = $bootdiskdetail[0];

my $bootdiskimage = $bootdiskdetail[1];

if (defined $storecfg->{ids}->{$bootdiskstorage}->{type}) {

my $bootdisktype = $storecfg->{ids}->{$bootdiskstorage}->{type};

my $bootdiskpool = $storecfg->{ids}->{$bootdiskstorage}->{pool};

if ($bootdisktype eq "rbd") {

my $cephrbddiskinfocmd = "rbd disk-usage -p " . $bootdiskpool . " " . $bootdiskimage . " --format=json";

my $cephrbddiskinfo = `$cephrbddiskinfocmd`;

$cephrbddiskinfo =~ s/\n/""/eg;

$cephrbddiskinfo =~ s/\r/""/eg;

$cephrbddiskinfo =~ s/\t/""/eg;

$cephrbddiskinfo =~ s/\0/""/eg;

$cephrbddiskinfo =~ s/^[a-zA-z0-9,]//g;

my $total_used_size = 0;

if ($cephrbddiskinfo =~ /$bootdiskimage/) {

my $cephrbddiskinfoarray = decode_json($cephrbddiskinfo);

foreach my $image (@{$cephrbddiskinfoarray->{'images'}}) {

if (defined $image->{'used_size'}) {

$total_used_size += $image->{'used_size'};

}

}

$d->{disk} = $total_used_size;

}

}

}

##### CODE TO FETCH VM DISK USAGE FROM CEPH POOL END #####4. Restart the pvestatd Service:

After making the necessary changes, restart the pvestatd service to apply the modifications.

Code:

systemctl restart pvestatd.service5. Check for Errors:

Monitor the system logs for any potential errors to ensure that the changes were applied without issues.

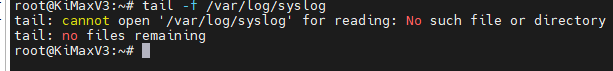

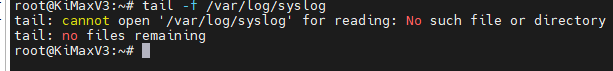

Code:

tail -f /var/log/syslog6. Verify Disk Usage:

If everything is functioning correctly, you should now be able to see the disk usage, including boot disk usage and percentage used, for VMs when they are in the "ON" state.

I hope it help some, and we get feedback too.

Thanks in advance.

-Deepen.

Last edited:

Hello everybody,

Thank you very much for the post and the solution.

But after seriously following it step by step, it didn't work for me.

I'm digging up this post! !

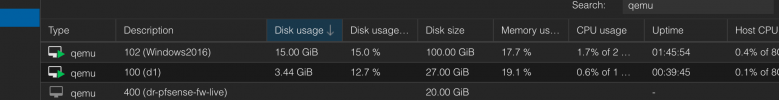

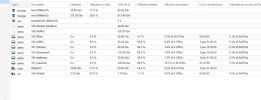

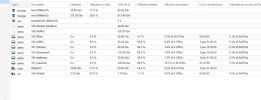

here are 3 screenshots:

- the code inserted in the "QemuServer.pm" file

- the table with all the vms where we can clearly see that disk usage is anamorally at 0%.

we see that on the other hand it still works correctly for the “LXC” container :

- the results of "tail -f /var/log/syslog" which shows the absence of log files and therefore no bug! ! !

Thank you in advance for your help.

Sincerely

@+++

Thank you very much for the post and the solution.

But after seriously following it step by step, it didn't work for me.

I'm digging up this post! !

here are 3 screenshots:

- the code inserted in the "QemuServer.pm" file

- the table with all the vms where we can clearly see that disk usage is anamorally at 0%.

we see that on the other hand it still works correctly for the “LXC” container :

- the results of "tail -f /var/log/syslog" which shows the absence of log files and therefore no bug! ! !

Thank you in advance for your help.

Sincerely

@+++

I hope your storage uses Ceph RBD, as the code is currently designed exclusively for Ceph Storage (I will publish ZFS Storage compatibility soon), authored by me. Additionally, for syslog, which is not installed by default in PVE8, you will need to install the rsyslog package.

I hope your storage uses Ceph RBD, as the code is currently designed exclusively for Ceph Storage (I will publish ZFS Storage compatibility soon), authored by me. Additionally, for syslog, which is not installed by default in PVE8, you will need to install the rsyslog package.

Hi, have you been able to publish the compatibility code somewhere? I've searched for it but without success.

Thanks for you great work!

Complete code with ceph rbd and ZFS is as below , keep in mind zfs would show more size than actual allocated , example 100GB is take as 155GB , is snapshot is attached.

Code:

##### CODE TO FETCH VM DISK USAGE FROM CEPH + ZFS POOL START #####

my @bootdiskorder = split('=', $conf->{boot});

my @bootdiskname = split(';', $bootdiskorder[1]);

my @bootdiskinfo = split(",", $conf->{$bootdiskname[0]});

my @bootdiskdetail = split(":", $bootdiskinfo[0]);

my $bootdiskstorage = $bootdiskdetail[0];

my $bootdiskimage = $bootdiskdetail[1];

if (defined $storecfg->{ids}->{$bootdiskstorage}->{type}) {

my $bootdisktype = $storecfg->{ids}->{$bootdiskstorage}->{type};

my $bootdiskpool = $storecfg->{ids}->{$bootdiskstorage}->{pool};

if ($bootdisktype eq "zfspool") {

my $zfsdiskinfocmd ="zfs get -H -p -oname,value used ".$bootdiskpool."/".$bootdiskimage;

my $zfsdiskinfo=`$zfsdiskinfocmd`;

$zfsdiskinfo =~ s/\n/""/eg;

$zfsdiskinfo =~ s/\r/""/eg;

my $total_used_size = 0;

if ($zfsdiskinfo =~ /$bootdiskimage/) {

my @zfsdiskbytes=split("\t",$zfsdiskinfo);

$total_used_size=$zfsdiskbytes[1];

}

$d->{disk} = $total_used_size;

}

if ($bootdisktype eq "rbd") {

my $cephrbddiskinfocmd = "rbd disk-usage -p " . $bootdiskpool . " " . $bootdiskimage . " --format=json";

my $cephrbddiskinfo = `$cephrbddiskinfocmd`;

$cephrbddiskinfo =~ s/\n/""/eg;

$cephrbddiskinfo =~ s/\r/""/eg;

$cephrbddiskinfo =~ s/\t/""/eg;

$cephrbddiskinfo =~ s/\0/""/eg;

$cephrbddiskinfo =~ s/^[a-zA-z0-9,]//g;

my $total_used_size = 0;

if ($cephrbddiskinfo =~ /$bootdiskimage/) {

my $cephrbddiskinfoarray = decode_json($cephrbddiskinfo);

foreach my $image (@{$cephrbddiskinfoarray->{'images'}}) {

if (defined $image->{'used_size'}) {

$total_used_size += $image->{'used_size'};

}

}

$d->{disk} = $total_used_size;

}

}

}

##### CODE TO FETCH VM DISK USAGE FROM CEPH POOL END #####You can use my modified /usr/share/perl5/PVE/QemuServer.pm file to use the qemu guest agent to retrieve the disk usage:

https://gist.github.com/savalet/f1f34a9de9dfb331505294d96bae9f33

https://gist.github.com/savalet/f1f34a9de9dfb331505294d96bae9f33

Nice point get it from Quest-Agent, can you share section change.. so respective code can be taken ..also does this shows all extra attached disk or only boot disk ?

So looking through this and other threads, and at the bug report it seems this issue has been going on for 5 years. For such a basic hypervisor functionality is there any way to get this looked at with more priority? Not to be obtuse, but we're paying full licensing for 7 servers and this being outstanding and a weird workaround being the only fix is disappointing.

Maybe the clue that it's not implemented already should tip you off that it is not as easy as it seems to. Using the disk usage from qemu is just wrong, as pointed out here. It's the same with the memory usage.So looking through this and other threads, and at the bug report it seems this issue has been going on for 5 years. For such a basic hypervisor functionality is there any way to get this looked at with more priority? Not to be obtuse, but we're paying full licensing for 7 servers and this being outstanding and a weird workaround being the only fix is disappointing.

If there is a recommended workaround that customers are expected to generally perform then I don't think that "it's not as easy as it seems" holds much water. That could be automated behind the scenes.Maybe the clue that it's not implemented already should tip you off that it is not as easy as it seems to. Using the disk usage from qemu is just wrong, as pointed out here. It's the same with the memory usage.

There is no workaround, that's the problem. For e.g. thick-LVM, there will always be 100% disk usage. I don't think that "information" will help anyone.If there is a recommended workaround that customers are expected to generally perform then I don't think that "it's not as easy as it seems" holds much water. That could be automated behind the scenes.

I am not smart enough to understand what is going on yet as I am learning but I was unable to get the storage info for my redhat 9 vms specifically. all others seems to be fine. They all have the qemu-agent. Any way to troubleshoot this?Complete code with ceph rbd and ZFS is as below , keep in mind zfs would show more size than actual allocated , example 100GB is take as 155GB , is snapshot is attached.

Code:##### CODE TO FETCH VM DISK USAGE FROM CEPH + ZFS POOL START ##### my @bootdiskorder = split('=', $conf->{boot}); my @bootdiskname = split(';', $bootdiskorder[1]); my @bootdiskinfo = split(",", $conf->{$bootdiskname[0]}); my @bootdiskdetail = split(":", $bootdiskinfo[0]); my $bootdiskstorage = $bootdiskdetail[0]; my $bootdiskimage = $bootdiskdetail[1]; if (defined $storecfg->{ids}->{$bootdiskstorage}->{type}) { my $bootdisktype = $storecfg->{ids}->{$bootdiskstorage}->{type}; my $bootdiskpool = $storecfg->{ids}->{$bootdiskstorage}->{pool}; if ($bootdisktype eq "zfspool") { my $zfsdiskinfocmd ="zfs get -H -p -oname,value used ".$bootdiskpool."/".$bootdiskimage; my $zfsdiskinfo=`$zfsdiskinfocmd`; $zfsdiskinfo =~ s/\n/""/eg; $zfsdiskinfo =~ s/\r/""/eg; my $total_used_size = 0; if ($zfsdiskinfo =~ /$bootdiskimage/) { my @zfsdiskbytes=split("\t",$zfsdiskinfo); $total_used_size=$zfsdiskbytes[1]; } $d->{disk} = $total_used_size; } if ($bootdisktype eq "rbd") { my $cephrbddiskinfocmd = "rbd disk-usage -p " . $bootdiskpool . " " . $bootdiskimage . " --format=json"; my $cephrbddiskinfo = `$cephrbddiskinfocmd`; $cephrbddiskinfo =~ s/\n/""/eg; $cephrbddiskinfo =~ s/\r/""/eg; $cephrbddiskinfo =~ s/\t/""/eg; $cephrbddiskinfo =~ s/\0/""/eg; $cephrbddiskinfo =~ s/^[a-zA-z0-9,]//g; my $total_used_size = 0; if ($cephrbddiskinfo =~ /$bootdiskimage/) { my $cephrbddiskinfoarray = decode_json($cephrbddiskinfo); foreach my $image (@{$cephrbddiskinfoarray->{'images'}}) { if (defined $image->{'used_size'}) { $total_used_size += $image->{'used_size'}; } } $d->{disk} = $total_used_size; } } } ##### CODE TO FETCH VM DISK USAGE FROM CEPH POOL END #####

For example for the line

Code:

my $zfsdiskinfocmd ="zfs get -H -p -oname,value used ".$bootdiskpool."/".$bootdiskimage;

Code:

my $size = PVE::QemuServer::Drive::bootdisk_size($storecfg, $conf);

if (defined($size)) {

$d->{disk} = 0; # no info available

$d->{maxdisk} = $size;

} else {

$d->{disk} = 0;

$d->{maxdisk} = 0;

}Unless somehow the storage config part from here

Code:

if (defined $storecfg->{ids}->{$bootdiskstorage}->{type}) {EDIT:

ISSUE WAS BOOT ORDER

so cdrom was first boot item, which it should be the case normally right? But i guess changing it to be after disk or disabled will fix this.

Last edited: