Hi guys, proxmox beginner here.

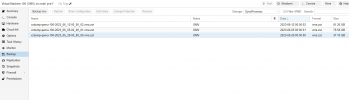

I searched trough the forum but couldn't solve my problem. My VM ran out of space today, seems like the "data" folder is full.

I can't find how to free up space in this folder.

Tried to give you guys some info with these commands, let me know if i can provide anything else useful.. Thanks!

I searched trough the forum but couldn't solve my problem. My VM ran out of space today, seems like the "data" folder is full.

I can't find how to free up space in this folder.

Tried to give you guys some info with these commands, let me know if i can provide anything else useful.. Thanks!

Code:

root@pve1:/dev# df -h

Filesystem Size Used Avail Use% Mounted on

udev 16G 0 16G 0% /dev

tmpfs 3.2G 1.3M 3.2G 1% /run

/dev/mapper/pve-root 68G 14G 51G 22% /

tmpfs 16G 46M 16G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/nvme0n1p2 511M 336K 511M 1% /boot/efi

/dev/fuse 128M 20K 128M 1% /etc/pve

192.168.0.100:/volume1/proxmox 11T 3.7T 6.9T 35% /mnt/pve/SynoProxmox

tmpfs 3.2G 0 3.2G 0% /run/user/0

root@pve1:/dev# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

base-500-disk-0 pve Vri-a-tz-k 8.00g data 29.90

data pve twi-aotzD- 141.91g 100.00 4.30

root pve -wi-ao---- <69.61g

swap pve -wi-ao---- 7.56g

vm-100-disk-0 pve Vwi-aotz-- 64.00g data 79.08

vm-100-disk-1 pve Vwi-aotz-- 64.00g data 85.94

vm-110-disk-0 pve Vwi-aotz-- 4.00g data 75.65

vm-120-disk-0 pve Vwi-a-tz-- 8.00g data 41.69

vm-130-disk-0 pve Vwi-a-tz-- 4.00m data 14.06

vm-130-disk-1 pve Vwi-a-tz-- 32.00g data 30.42

vm-200-disk-0 pve Vwi-a-tz-- 64.00g data 27.82

root@pve1:/dev# dmsetup ls --tree

pve-base--500--disk--0 (253:13)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-data (253:5)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-root (253:1)

└─ (259:3)

pve-swap (253:0)

└─ (259:3)

pve-vm--100--disk--0 (253:11)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-vm--100--disk--1 (253:12)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-vm--110--disk--0 (253:7)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-vm--120--disk--0 (253:8)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-vm--130--disk--0 (253:9)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-vm--130--disk--1 (253:10)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)

pve-vm--200--disk--0 (253:6)

└─pve-data-tpool (253:4)

├─pve-data_tdata (253:3)

│ └─ (259:3)

└─pve-data_tmeta (253:2)

└─ (259:3)