vm resize disk offline error

- Thread starter squirell

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

storage is nfs (debian server)Hi,

what kind of storage is the disk on? What's the output ofqemu-img info /path/to/your/disk.qcow2?

disk type - qcow2

This happens only with nfs storage backend. If disk is based on local storage no error. May be increase operation timeout?

may be heavy io, rightYes, there is a 5 second timeout to read the current size of the disk. But that should normally be enough. Is there much load on your network connection to the NFS or is the latency that high?

but, why running VM do not "unfreeze" after disk size change?

some logs:

Bash:

pvedaemon[9955]: <root@pam> update VM 114: resize --disk scsi0 --size +90G

pvedaemon[9955]: VM 114 qmp command failed - VM 114 qmp command 'block_resize' failed - got timeout

qm[26812]: VM 114 qmp command failed - VM 114 qmp command 'change' failed - got timeout

Last edited:

Unfortunately, in this VM 114 systemd-journald storage option was set to "auto" and no logs saved.

Bash:

proxmox-ve: 6.4-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.4-6 (running version: 6.4-6/be2fa32c)

pve-kernel-5.4: 6.4-2

pve-kernel-helper: 6.4-2

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-2

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.6-2

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-5

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-3

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1try it again (poweroff VM, resize disk), and again got timeout:

manually:

if try it second - ok:

Code:

pvedaemon[7253]: command '/usr/bin/qemu-img info '--output=json' /mnt/pve/vmbackup3-r1/images/139/vm-139-disk-0.qcow2' failed: got timeout

pvedaemon[7253]: could not parse qemu-img info command output for '/mnt/pve/vmbackup3-r1/images/139/vm-139-disk-0.qcow2'manually:

Code:

# qemu-img info /mnt/pve/vmbackup3-r1/images/139/vm-139-disk-0.qcow2

image: /mnt/pve/vmbackup3-r1/images/139/vm-139-disk-0.qcow2

file format: qcow2

virtual size: 300 GiB (322122547200 bytes)

disk size: 271 GiB

cluster_size: 65536

Format specific information:

compat: 1.1

compression type: zlib

lazy refcounts: false

refcount bits: 16

corrupt: false

extended l2: falseif try it second - ok:

Code:

pvedaemon[20968]: <root@pam> update VM 139: resize --disk scsi0 --size +200G

Code:

# qemu-img info --output=json /mnt/pve/vmbackup3-r1/images/139/vm-139-disk-0.qcow2

{

"virtual-size": 536870912000,

"filename": "/mnt/pve/vmbackup3-r1/images/139/vm-139-disk-0.qcow2",

"cluster-size": 65536,

"format": "qcow2",

"actual-size": 290950795264,

"format-specific": {

"type": "qcow2",

"data": {

"compat": "1.1",

"compression-type": "zlib",

"lazy-refcounts": false,

"refcount-bits": 16,

"corrupt": false,

"extended-l2": false

}

},

"dirty-flag": false

}

Last edited:

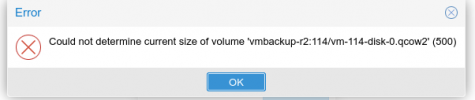

For people looking for "Could not determine current size of volume", this is the place

I'm having the same problem with a qcow on an NFS mount, qemu-img info returns instantly with correct data.

I'm having the same problem with a qcow on an NFS mount, qemu-img info returns instantly with correct data.

Hi,

what operation leads to that error? Does it happen every time?For people looking for "Could not determine current size of volume", this is the place

How long is "instantly"? Note that Proxmox VE also just usesI'm having the same problem with a qcow on an NFS mount, qemu-img info returns instantly with correct data.

qemu-img info. Can you time the command and execute it multiple times?When trying to grow one particular disk for a VM. The "please wait" takes about 4 seconds.Hi,

what operation leads to that error? Does it happen every time?

Between 0.025 and 0.040 seconds.How long is "instantly"? Note that Proxmox VE also just usesqemu-img info. Can you time the command and execute it multiple times?

Maybe there's some lock involved somewhere?

The current timeout is 5 seconds. Unfortunately, we can't increase it just yet for technical reasons. The resize API would need to be converted into a worker task, which is a breaking change and can only happen for a major release (i.e. Proxmox VE 8).When trying to grow one particular disk for a VM. The "please wait" takes about 4 seconds.

Between 0.025 and 0.040 seconds.

Maybe there's some lock involved somewhere?

What if you create the following script

Code:

root@pve701 ~ # cat get-size.pl

#!/bin/perl

use strict;

use warnings;

use PVE::Storage;

my $volid = shift or die "need to specify volume ID\n";

my $storecfg = PVE::Storage::config();

PVE::Storage::activate_volumes($storecfg, [$volid]);

my $size = PVE::Storage::volume_size_info($storecfg, $volid, 5);

die "did not get any size\n" if !defined($size);

print "size is $size\n";

Code:

root@pve701 ~ # perl get-size.pl nfspve703:124/vm-124-disk-0.qcow2

size is 5368709120Works, but a bit slower: avg 0.51 secondsThe current timeout is 5 seconds. Unfortunately, we can't increase it just yet for technical reasons. The resize API would need to be converted into a worker task, which is a breaking change and can only happen for a major release (i.e. Proxmox VE 8).

What if you create the following script

and run it, replacing the volume ID with your own of course?Code:root@pve701 ~ # cat get-size.pl #!/bin/perl use strict; use warnings; use PVE::Storage; my $volid = shift or die "need to specify volume ID\n"; my $storecfg = PVE::Storage::config(); PVE::Storage::activate_volumes($storecfg, [$volid]); my $size = PVE::Storage::volume_size_info($storecfg, $volid, 5); die "did not get any size\n" if !defined($size); print "size is $size\n";

Code:root@pve701 ~ # perl get-size.pl nfspve703:124/vm-124-disk-0.qcow2 size is 5368709120

The resize API call does the very same thing: https://git.proxmox.com/?p=qemu-ser...7cf3044509721bc09cfd1eccf4e1d8e23df62bf#l4733 So really not sure why it doesn't work for you.Works, but a bit slower: avg 0.51 seconds

Can you try to execute the resize via CLI to see if there's a warning or additional information logged? E.g.

qm disk resize 102 scsi0 +1G replacing the VM ID, drive name and size accordingly.Right, in our caseThe resize API call does the very same thing: https://git.proxmox.com/?p=qemu-ser...7cf3044509721bc09cfd1eccf4e1d8e23df62bf#l4733 So really not sure why it doesn't work for you.

Can you try to execute the resize via CLI to see if there's a warning or additional information logged? E.g.qm disk resize 102 scsi0 +1Greplacing the VM ID, drive name and size accordingly.

qm resize[\ICODE], but that works. Since that cluster is due for upgrade i suppose it's a problem that will go away.

Thanks.Hi,

almost all timeouts in Proxmox VE are not configurable. Please describe your issue in detail and what exact timeout you get.Where can I change the default timeouts? I didn't find it in the configuration. I use terraform, you can't set it there.