Hi all, quite a noob here and running Proxmox on my own home server so please bear with me.

After upgrading the Proxmox kernel this morning (via a simple apt upgrade) and rebooting, one of my 2 VM is refusing to start. From this post I've tried running a

And of course, when trying to restart it I get the following error:

For what is worth, I'm running pve-manager/7.1-10/6ddebafe (running kernel: 5.13.19-4-pve), on a Dell T710 server, witha a hardware RAID5 configuration.

If you need any more details I'll be happy to oblige.

EDIT: just in case you need pveversion --verbose:

After upgrading the Proxmox kernel this morning (via a simple apt upgrade) and rebooting, one of my 2 VM is refusing to start. From this post I've tried running a

systemctl stop 100.slice command (100 is the ID of the offending VM), but it's still showing up as

Bash:

root@server:~# systemctl status qemu.slice

● qemu.slice

Loaded: loaded

Active: active since Fri 2022-02-04 08:44:52 GMT; 9min ago

Tasks: 31

Memory: 586.6M

CPU: 33.558s

CGroup: /qemu.slice

├─100.scope

│ └─1691 [kvm]

└─101.scope

└─2015 /usr/bin/kvm -id 101 -name Pihole -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/101.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.sock,reco>And of course, when trying to restart it I get the following error:

root@server:~# qm start 100

timeout waiting on systemd

For what is worth, I'm running pve-manager/7.1-10/6ddebafe (running kernel: 5.13.19-4-pve), on a Dell T710 server, witha a hardware RAID5 configuration.

If you need any more details I'll be happy to oblige.

EDIT: just in case you need pveversion --verbose:

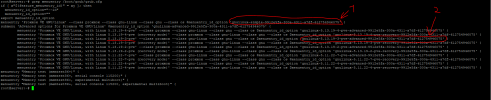

Bash:

root@server:~# pveversion --verbose

proxmox-ve: 7.1-1 (running kernel: 5.13.19-4-pve)

pve-manager: 7.1-10 (running version: 7.1-10/6ddebafe)

pve-kernel-helper: 7.1-9

pve-kernel-5.13: 7.1-7

pve-kernel-5.11: 7.0-10

pve-kernel-5.13.19-4-pve: 5.13.19-8

pve-kernel-5.13.19-3-pve: 5.13.19-7

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-4-pve: 5.11.22-9

ceph: 16.2.7

ceph-fuse: 16.2.7

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-6

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-2

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.0-15

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-1

proxmox-backup-client: 2.1.5-1

proxmox-backup-file-restore: 2.1.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-5

pve-cluster: 7.1-3

pve-container: 4.1-3

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-4

pve-ha-manager: 3.3-3

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.1-1

pve-xtermjs: 4.16.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

zfsutils-linux: 2.1.2-pve1

root@server:~#

Last edited: