Hello,

I 'm a newbe in promox's world and i had got a problem with a VM under Windows server 2019 whot it stoppped by itself.

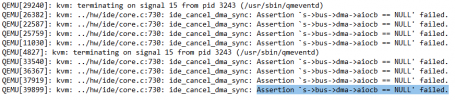

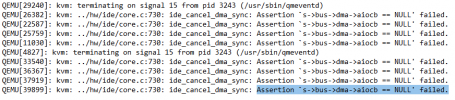

In the syslog file, i found this message :

Somebody knows what it happens ?

I 'm a newbe in promox's world and i had got a problem with a VM under Windows server 2019 whot it stoppped by itself.

In the syslog file, i found this message :

Code:

Feb 3 13:15:06 promox2 QEMU[1946]: kvm: hw/ide/core.c:724: ide_cancel_dma_sync: Assertion `s->bus->dma->aiocb == NULL' failed.

Feb 3 13:15:09 promox2 pvestatd[1739]: VM 100 qmp command failed - VM 100 not runningSomebody knows what it happens ?