Hi,

I have built a new PVE 3 node Cluster v7.2.7 with iSCSI shared storage.

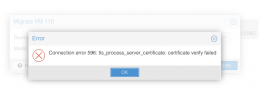

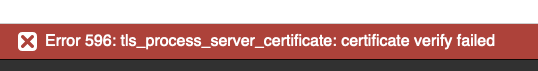

I can migrate from node 1 to node 2 no issues, although am unable to migrate from node 1 or node 2 to node 3, I am receiving the following error;

2022-07-22 11:20:22 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=pve3' root@172.16.60.103 /bin/true

2022-07-22 11:20:22 @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

2022-07-22 11:20:22 @ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @

2022-07-22 11:20:22 @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

2022-07-22 11:20:22 IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY!

2022-07-22 11:20:22 Someone could be eavesdropping on you right now (man-in-the-middle attack)!

2022-07-22 11:20:22 It is also possible that a host key has just been changed.

2022-07-22 11:20:22 The fingerprint for the RSA key sent by the remote host is

2022-07-22 11:20:22 SHA256:jbeo+3cJxCdtiBcd1tUGS2q0xsiKEcm0SLQRB5AR/MY.

2022-07-22 11:20:22 Please contact your system administrator.

2022-07-22 11:20:22 Add correct host key in /root/.ssh/known_hosts to get rid of this message.

2022-07-22 11:20:22 Offending RSA key in /etc/ssh/ssh_known_hosts:1

2022-07-22 11:20:22 remove with:

2022-07-22 11:20:22 ssh-keygen -f "/etc/ssh/ssh_known_hosts" -R "pve3"

2022-07-22 11:20:22 RSA host key for pve3 has changed and you have requested strict checking.

2022-07-22 11:20:22 Host key verification failed.

2022-07-22 11:20:22 ERROR: migration aborted (duration 00:00:00): Can't connect to destination address using public key

TASK ERROR: migration aborted

Any assistance would be very much appreciate, I followed the above step to rectify the issue and still no luck.

Cheers

I have built a new PVE 3 node Cluster v7.2.7 with iSCSI shared storage.

I can migrate from node 1 to node 2 no issues, although am unable to migrate from node 1 or node 2 to node 3, I am receiving the following error;

2022-07-22 11:20:22 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=pve3' root@172.16.60.103 /bin/true

2022-07-22 11:20:22 @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

2022-07-22 11:20:22 @ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @

2022-07-22 11:20:22 @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

2022-07-22 11:20:22 IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY!

2022-07-22 11:20:22 Someone could be eavesdropping on you right now (man-in-the-middle attack)!

2022-07-22 11:20:22 It is also possible that a host key has just been changed.

2022-07-22 11:20:22 The fingerprint for the RSA key sent by the remote host is

2022-07-22 11:20:22 SHA256:jbeo+3cJxCdtiBcd1tUGS2q0xsiKEcm0SLQRB5AR/MY.

2022-07-22 11:20:22 Please contact your system administrator.

2022-07-22 11:20:22 Add correct host key in /root/.ssh/known_hosts to get rid of this message.

2022-07-22 11:20:22 Offending RSA key in /etc/ssh/ssh_known_hosts:1

2022-07-22 11:20:22 remove with:

2022-07-22 11:20:22 ssh-keygen -f "/etc/ssh/ssh_known_hosts" -R "pve3"

2022-07-22 11:20:22 RSA host key for pve3 has changed and you have requested strict checking.

2022-07-22 11:20:22 Host key verification failed.

2022-07-22 11:20:22 ERROR: migration aborted (duration 00:00:00): Can't connect to destination address using public key

TASK ERROR: migration aborted

Any assistance would be very much appreciate, I followed the above step to rectify the issue and still no luck.

Cheers