Hello everyone,

I encountered a problem while trying to perform a migration:

Does anyone know the cause of this issue?

It would be great if you could help me

(Note: I replaced the actual IP address with 'x.x.x.x' for privacy.)

I encountered a problem while trying to perform a migration:

Code:

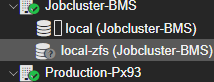

2023-07-26 13:51:17 starting migration of VM 2000 to node 'Jobcluster-BMS' (x.x.x.x)

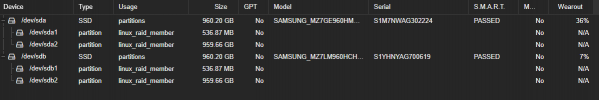

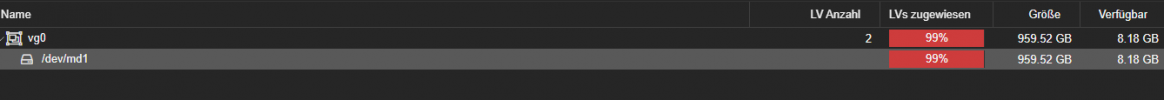

2023-07-26 13:51:17 found local disk 'local-zfs:vm-2000-disk-0' (in current VM config)

2023-07-26 13:51:17 copying local disk images

2023-07-26 13:51:18 full send of rpool/data/vm-2000-disk-0@__migration__ estimated size is 16.9G

2023-07-26 13:51:18 total estimated size is 16.9G

2023-07-26 13:51:19 command 'zfs recv -F -- rpool/data/vm-2000-disk-0' failed: open3: exec of zfs recv -F -- rpool/data/vm-2000-disk-0 failed: No such file or directory at /usr/share/perl5/PVE/Tools.pm line 455.

2023-07-26 13:51:19 command 'zfs send -Rpv -- rpool/data/vm-2000-disk-0@__migration__' failed: got signal 13

send/receive failed, cleaning up snapshot(s)..

2023-07-26 13:51:19 ERROR: storage migration for 'local-zfs:vm-2000-disk-0' to storage 'local-zfs' failed - command 'set -o pipefail && pvesm export local-zfs:vm-2000-disk-0 zfs - -with-snapshots 0 -snapshot __migration__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=Jobcluster-BMS' root@x.x.x.x -- pvesm import local-zfs:vm-2000-disk-0 zfs - -with-snapshots 0 -snapshot __migration__ -delete-snapshot __migration__ -allow-rename 1' failed: exit code 2

2023-07-26 13:51:19 aborting phase 1 - cleanup resources

2023-07-26 13:51:19 ERROR: migration aborted (duration 00:00:02): storage migration for 'local-zfs:vm-2000-disk-0' to storage 'local-zfs' failed - command 'set -o pipefail && pvesm export local-zfs:vm-2000-disk-0 zfs - -with-snapshots 0 -snapshot __migration__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=Jobcluster-BMS' root@x.x.x.x -- pvesm import local-zfs:vm-2000-disk-0 zfs - -with-snapshots 0 -snapshot __migration__ -delete-snapshot __migration__ -allow-rename 1' failed: exit code 2

TASK ERROR: migration abortedDoes anyone know the cause of this issue?

It would be great if you could help me

(Note: I replaced the actual IP address with 'x.x.x.x' for privacy.)

Last edited: