PVE 7.4

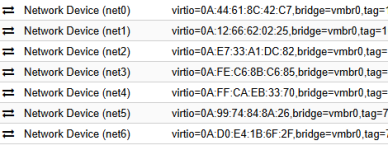

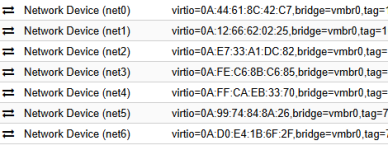

The 7th vnic in VM configuration was added as ens1 and not ens24. Why? It's same in 8.x?

Code:

/var/log# ip l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 0a:44:61:8c:42:c7 brd ff:ff:ff:ff:ff:ff

altname enp0s18

3: ens19: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 0a:12:66:62:02:25 brd ff:ff:ff:ff:ff:ff

altname enp0s19

4: ens20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 0a:e7:33:a1:dc:82 brd ff:ff:ff:ff:ff:ff

altname enp0s20

5: ens21: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 0a:fe:c6:8b:c6:85 brd ff:ff:ff:ff:ff:ff

altname enp0s21

6: ens22: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 0a:ff:ca:eb:33:70 brd ff:ff:ff:ff:ff:ff

altname enp0s22

7: ens23: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 0a:99:74:84:8a:26 brd ff:ff:ff:ff:ff:ff

altname enp0s23

8: ens1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 0a:d0:e4:1b:6f:2f brd ff:ff:ff:ff:ff:ff

altname enp1s1

The 7th vnic in VM configuration was added as ens1 and not ens24. Why? It's same in 8.x?