Hi, I recently upgraded from Proxmox 6 to 7, I have 1 VM and about 7 LXC containers running on it.

My VM runs OpenMediaVault with 4 passthrough 3TB WD Red disks and another WB Black 12Tb connected through USB.

Today I noticed that my network shares were really slow, so I checked the OMV VM and I discovered I/O errors on all the disks, even the 12TB one which is brand new:

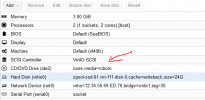

lsblk output:

I honestly doubt that all the disks are failing at once, even the brand new one, so my fear is that there's something wrong with the VM, or something got messed up with the upgrade to Proxmox 7.

Can anyone give me some hints on what could be happening here?

My VM runs OpenMediaVault with 4 passthrough 3TB WD Red disks and another WB Black 12Tb connected through USB.

Today I noticed that my network shares were really slow, so I checked the OMV VM and I discovered I/O errors on all the disks, even the 12TB one which is brand new:

Code:

[413289.620177] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1377092879)

[413289.620401] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1377092623)

[413289.620672] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1377092335)

[413289.620895] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1377092079)

[413289.621122] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1377091584)

[413289.621238] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1377091577)

[415979.193504] blk_update_request: I/O error, dev vdc, sector 2561749528 op 0x1:(WRITE) flags 0x0 phys_seg 61 prio class 0

[415979.196641] blk_update_request: I/O error, dev vdc, sector 2561750016 op 0x1:(WRITE) flags 0x0 phys_seg 65 prio class 0

[415979.197571] blk_update_request: I/O error, dev vdc, sector 2561750536 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[415979.198623] blk_update_request: I/O error, dev vdc, sector 2561750552 op 0x1:(WRITE) flags 0x0 phys_seg 61 prio class 0

[415979.199583] blk_update_request: I/O error, dev vdc, sector 2561751040 op 0x1:(WRITE) flags 0x0 phys_seg 65 prio class 0

[415979.200678] blk_update_request: I/O error, dev vdc, sector 2561751560 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[415979.201701] blk_update_request: I/O error, dev vdc, sector 2561751576 op 0x1:(WRITE) flags 0x0 phys_seg 61 prio class 0

[415979.202722] blk_update_request: I/O error, dev vdc, sector 2561752064 op 0x1:(WRITE) flags 0x0 phys_seg 65 prio class 0

[415979.203610] blk_update_request: I/O error, dev vdc, sector 2561752584 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[415979.204614] blk_update_request: I/O error, dev vdc, sector 2561752600 op 0x1:(WRITE) flags 0x0 phys_seg 61 prio class 0

[415987.268192] blk_update_request: I/O error, dev vdb, sector 4099882560 op 0x1:(WRITE) flags 0x0 phys_seg 56 prio class 0

[415987.269386] blk_update_request: I/O error, dev vdb, sector 4099883008 op 0x1:(WRITE) flags 0x0 phys_seg 70 prio class 0

[415987.270351] blk_update_request: I/O error, dev vdb, sector 4099883568 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[415987.271278] blk_update_request: I/O error, dev vdb, sector 4099883584 op 0x1:(WRITE) flags 0x0 phys_seg 56 prio class 0

[415987.732732] blk_update_request: I/O error, dev vda, sector 4895432704 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[415987.733908] blk_update_request: I/O error, dev vda, sector 4895433728 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[415987.734936] blk_update_request: I/O error, dev vda, sector 4895434752 op 0x1:(WRITE) flags 0x0 phys_seg 31 prio class 0

[415987.927613] blk_update_request: I/O error, dev vdd, sector 4895432704 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[415987.928717] blk_update_request: I/O error, dev vdd, sector 4895433728 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[416032.007461] blk_update_request: I/O error, dev vda, sector 4379576800 op 0x1:(WRITE) flags 0x0 phys_seg 68 prio class 0

[416032.009257] blk_update_request: I/O error, dev vda, sector 4379577344 op 0x1:(WRITE) flags 0x0 phys_seg 58 prio class 0

[416032.010790] blk_update_request: I/O error, dev vda, sector 4379577808 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[416032.011669] blk_update_request: I/O error, dev vda, sector 4379577824 op 0x1:(WRITE) flags 0x0 phys_seg 68 prio class 0

[416032.012558] blk_update_request: I/O error, dev vda, sector 4379578368 op 0x1:(WRITE) flags 0x0 phys_seg 72 prio class 0

[416032.013533] blk_update_request: I/O error, dev vda, sector 4379579072 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[416032.014630] blk_update_request: I/O error, dev vda, sector 4379579088 op 0x1:(WRITE) flags 0x0 phys_seg 38 prio class 0

[416032.015524] blk_update_request: I/O error, dev vda, sector 4379579392 op 0x1:(WRITE) flags 0x0 phys_seg 88 prio class 0

[416032.016408] blk_update_request: I/O error, dev vda, sector 4379580096 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[416032.017554] blk_update_request: I/O error, dev vda, sector 4379580112 op 0x1:(WRITE) flags 0x0 phys_seg 38 prio class 0

[416548.442209] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1094829952)

[416548.442222] Buffer I/O error on device md127, logical block 1094829801

[416548.443247] Buffer I/O error on device md127, logical block 1094829802

[416548.444437] Buffer I/O error on device md127, logical block 1094829803

[416548.446862] Buffer I/O error on device md127, logical block 1094829804

[416548.447871] Buffer I/O error on device md127, logical block 1094829805

[416548.448611] Buffer I/O error on device md127, logical block 1094829806

[416548.449310] Buffer I/O error on device md127, logical block 1094829807

[416548.449977] Buffer I/O error on device md127, logical block 1094829808

[416548.450694] Buffer I/O error on device md127, logical block 1094829809

[416548.451294] Buffer I/O error on device md127, logical block 1094829810

[416548.452166] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1094829696)

[416548.452397] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1094829528)

[416548.452618] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1094829272)

[416548.452863] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1094828986)

[416548.453088] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1223793150)

[416548.453315] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 1223792894)

[416548.454021] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 640372608)

[416548.454253] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 640372545)

[416548.454501] EXT4-fs warning (device md127): ext4_end_bio:349: I/O error 10 writing to inode 72089625 starting block 640372289)

[441221.485209] blk_update_request: I/O error, dev vdd, sector 2759243088 op 0x1:(WRITE) flags 0x0 phys_seg 86 prio class 0

[441221.488351] blk_update_request: I/O error, dev vdd, sector 2759243776 op 0x1:(WRITE) flags 0x0 phys_seg 40 prio class 0

[441221.489100] blk_update_request: I/O error, dev vdd, sector 2759244096 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[441221.489862] blk_update_request: I/O error, dev vdd, sector 2759244112 op 0x1:(WRITE) flags 0x0 phys_seg 85 prio class 0

[454841.456051] blk_update_request: I/O error, dev vde, sector 23281261272 op 0x1:(WRITE) flags 0x0 phys_seg 113 prio class 0

[454841.459244] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 363726718 starting block 2910157661)

[454841.459255] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 363726718 starting block 2910157772)

[454841.459282] blk_update_request: I/O error, dev vde, sector 23281262176 op 0x1:(WRITE) flags 0x4000 phys_seg 254 prio class 0

[454841.459835] Buffer I/O error on device vde1, logical block 2910157405

[454841.462156] Buffer I/O error on device vde1, logical block 2910157406

[454841.463603] Buffer I/O error on device vde1, logical block 2910157407

[454841.465096] Buffer I/O error on device vde1, logical block 2910157408

[454841.466715] Buffer I/O error on device vde1, logical block 2910157409

[454841.468061] Buffer I/O error on device vde1, logical block 2910157410

[454841.469534] Buffer I/O error on device vde1, logical block 2910157411

[454841.470388] Buffer I/O error on device vde1, logical block 2910157412

[454841.471189] Buffer I/O error on device vde1, logical block 2910157413

[454841.472272] Buffer I/O error on device vde1, logical block 2910157414

[454841.486661] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 363726719 starting block 2910158028)

[454846.579262] blk_update_request: I/O error, dev vde, sector 23281099800 op 0x1:(WRITE) flags 0x0 phys_seg 27 prio class 0

[454846.580206] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 363726729 starting block 2910137477)

[454846.580212] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 363726729 starting block 2910137502)

[454846.580231] blk_update_request: I/O error, dev vde, sector 23281100016 op 0x1:(WRITE) flags 0x4000 phys_seg 254 prio class 0

[454846.582065] Buffer I/O error on device vde1, logical block 2910137221

[454846.582992] Buffer I/O error on device vde1, logical block 2910137222

[454846.583926] Buffer I/O error on device vde1, logical block 2910137223

[454846.584697] Buffer I/O error on device vde1, logical block 2910137224

[454846.585543] Buffer I/O error on device vde1, logical block 2910137225

[454846.586573] Buffer I/O error on device vde1, logical block 2910137226

[454846.587367] Buffer I/O error on device vde1, logical block 2910137227

[454846.588141] Buffer I/O error on device vde1, logical block 2910137228

[454846.588861] Buffer I/O error on device vde1, logical block 2910137229

[454846.589843] Buffer I/O error on device vde1, logical block 2910137230

[454846.679274] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 363726730 starting block 2910137758)

[454890.033586] blk_update_request: I/O error, dev vde, sector 8844595184 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[454890.034461] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 337383482 starting block 1105574400)

[454890.034475] blk_update_request: I/O error, dev vde, sector 8844595200 op 0x1:(WRITE) flags 0x4000 phys_seg 254 prio class 0

[454890.081856] EXT4-fs warning (device vde1): ext4_end_bio:349: I/O error 10 writing to inode 337383482 starting block 1105574657)

[454890.082167] Buffer I/O error on device vde1, logical block 1105574144

[454890.083020] Buffer I/O error on device vde1, logical block 1105574145

[454890.083927] Buffer I/O error on device vde1, logical block 1105574146

[454890.084724] Buffer I/O error on device vde1, logical block 1105574147

[454890.085560] Buffer I/O error on device vde1, logical block 1105574148

[454890.086413] Buffer I/O error on device vde1, logical block 1105574149

[454890.087190] Buffer I/O error on device vde1, logical block 1105574150

[454890.087959] Buffer I/O error on device vde1, logical block 1105574151

[454890.088672] Buffer I/O error on device vde1, logical block 1105574152

[454890.089426] Buffer I/O error on device vde1, logical block 1105574153

[502429.263152] blk_update_request: I/O error, dev vdc, sector 4371581952 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[502429.265592] blk_update_request: I/O error, dev vdc, sector 4371582976 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[502429.266449] blk_update_request: I/O error, dev vdc, sector 4371584000 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[502429.267305] blk_update_request: I/O error, dev vdc, sector 4371585024 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[502429.268211] blk_update_request: I/O error, dev vdc, sector 4371586048 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[502429.269015] blk_update_request: I/O error, dev vdc, sector 4371587072 op 0x1:(WRITE) flags 0x0 phys_seg 127 prio class 0

[513402.941444] blk_update_request: I/O error, dev vda, sector 1401241600 op 0x1:(WRITE) flags 0x0 phys_seg 87 prio class 0

[513402.942215] blk_update_request: I/O error, dev vda, sector 1401242296 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[513402.943011] blk_update_request: I/O error, dev vda, sector 1401242312 op 0x1:(WRITE) flags 0x0 phys_seg 39 prio class 0

[513402.943751] blk_update_request: I/O error, dev vda, sector 1401242624 op 0x1:(WRITE) flags 0x0 phys_seg 94 prio class 0

[513402.944439] blk_update_request: I/O error, dev vda, sector 1401243424 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[513402.945132] blk_update_request: I/O error, dev vda, sector 1401243456 op 0x1:(WRITE) flags 0x0 phys_seg 23 prio class 0

[513840.231868] blk_update_request: I/O error, dev vdc, sector 965668864 op 0x1:(WRITE) flags 0x0 phys_seg 128 prio class 0

[513840.233526] blk_update_request: I/O error, dev vdc, sector 965669888 op 0x1:(WRITE) flags 0x0 phys_seg 116 prio class 0

[513840.234270] blk_update_request: I/O error, dev vdc, sector 965670912 op 0x1:(WRITE) flags 0x0 phys_seg 8 prio class 0

[513840.235015] blk_update_request: I/O error, dev vdc, sector 965670976 op 0x1:(WRITE) flags 0x0 phys_seg 2 prio class 0

[513840.235761] blk_update_request: I/O error, dev vdc, sector 965670992 op 0x1:(WRITE) flags 0x0 phys_seg 118 prio class 0lsblk output:

Code:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 200G 0 loop /srv/dev-disk-by-label-Array/CCTV1

loop1 7:1 0 200G 0 loop /srv/dev-disk-by-label-Array/CCTV2

loop2 7:2 0 200G 0 loop /srv/dev-disk-by-label-Array/CCTV3

loop3 7:3 0 200G 0 loop /srv/dev-disk-by-label-Array/CCTV4

loop4 7:4 0 200G 0 loop /srv/dev-disk-by-label-Array/CCTV5

sda 8:0 0 6G 0 disk

├─sda1 8:1 0 4G 0 part /

├─sda2 8:2 0 1K 0 part

└─sda5 8:5 0 2G 0 part [SWAP]

sr0 11:0 1 580M 0 rom

vda 254:0 0 2.7T 0 disk

└─md127 9:127 0 5.5T 0 raid10 /srv/dev-disk-by-label-Array

vdb 254:16 0 2.7T 0 disk

└─md127 9:127 0 5.5T 0 raid10 /srv/dev-disk-by-label-Array

vdc 254:32 0 2.7T 0 disk

└─md127 9:127 0 5.5T 0 raid10 /srv/dev-disk-by-label-Array

vdd 254:48 0 2.7T 0 disk

└─md127 9:127 0 5.5T 0 raid10 /srv/dev-disk-by-label-Array

vde 254:64 0 10.9T 0 disk

└─vde1 254:65 0 10.9T 0 part /srv/dev-disk-by-label-WD12I honestly doubt that all the disks are failing at once, even the brand new one, so my fear is that there's something wrong with the VM, or something got messed up with the upgrade to Proxmox 7.

Can anyone give me some hints on what could be happening here?

Last edited: