Hello!

We have a 4 node cluster with Proxmox and Ceph installed on them.

The Ceph cluster runs on a separate 10G network and on 15K RPM HDDs.

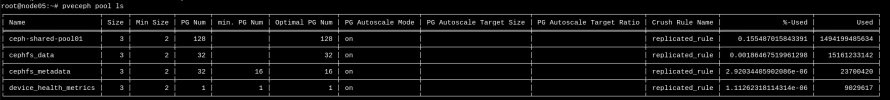

We've recently had one of the nodes fail and all of our VMs that ran on that storage, froze over or had a kernel panic. After the Ceph storage was rebuilt and recovered, everything was fine but, we were wondering if there was any configuration issue that caused the VMs to freeze, instead of to keep on running. We have a 3/2 pool for all of our storage.

If we have misinterpreted how Ceph works, please give us any sort of suggestion of what to do or an alternate solution that is within reason. We want our VMs to keep running unless all of our nodes fail. (We've also setup HA between the nodes.)

We have a 4 node cluster with Proxmox and Ceph installed on them.

The Ceph cluster runs on a separate 10G network and on 15K RPM HDDs.

We've recently had one of the nodes fail and all of our VMs that ran on that storage, froze over or had a kernel panic. After the Ceph storage was rebuilt and recovered, everything was fine but, we were wondering if there was any configuration issue that caused the VMs to freeze, instead of to keep on running. We have a 3/2 pool for all of our storage.

If we have misinterpreted how Ceph works, please give us any sort of suggestion of what to do or an alternate solution that is within reason. We want our VMs to keep running unless all of our nodes fail. (We've also setup HA between the nodes.)