Hi all,

Preface, I have limited knowledge here so please bare with me.

I have a VM that has suddenly decided to not start. Below is the error I see in the task history of the vm and below that is the entries i see in the Nodes Syslog

As far as troubleshooting goes other than changing the VM to use less memory (thinking perhaps its a resource issues - which it doesnt appear to be) I have no real idea what else to try other than shutdown everything on the node, reboot the node and start again (ideally something i dont want to do).

Appriciate any input - if more information is needed explain it like I am five and I'll get that for you.

Preface, I have limited knowledge here so please bare with me.

I have a VM that has suddenly decided to not start. Below is the error I see in the task history of the vm and below that is the entries i see in the Nodes Syslog

As far as troubleshooting goes other than changing the VM to use less memory (thinking perhaps its a resource issues - which it doesnt appear to be) I have no real idea what else to try other than shutdown everything on the node, reboot the node and start again (ideally something i dont want to do).

Appriciate any input - if more information is needed explain it like I am five and I'll get that for you.

root@pve-atlas:~# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.102-1-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.3-3

pve-kernel-5.15.102-1-pve: 5.15.102-1

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-2

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-3

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-1

libpve-rs-perl: 0.7.5

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.3.3-1

proxmox-backup-file-restore: 2.3.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.1-1

proxmox-widget-toolkit: 3.6.3

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20221111-2

pve-firewall: 4.3-1

pve-firmware: 3.6-4

pve-ha-manager: 3.6.0

pve-i18n: 2.11-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

root@pve-atlas:~#

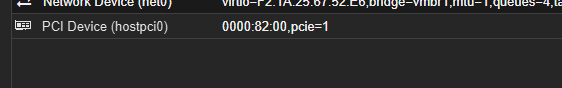

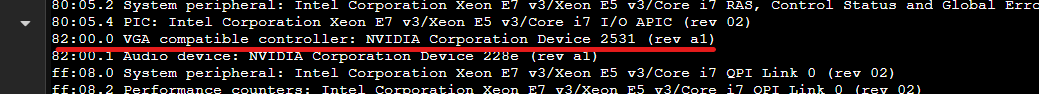

TASK ERROR: start failed: command '/usr/bin/taskset --cpu-list --all-tasks 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31 /usr/bin/kvm -id 30002 -name 'NiceDCV-Main,debug-threads=on' -no-shutdown -chardev 'socket,id=qmp,path=/var/run/qemu-server/30002.qmp,server=on,wait=off' -mon 'chardev=qmp,mode=control' -chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' -mon 'chardev=qmp-event,mode=control' -pidfile /var/run/qemu-server/30002.pid -daemonize -smbios 'type=1,uuid=98d99f3c-1bd0-49fe-8d99-8e621524d520' -smp '8,sockets=1,cores=8,maxcpus=8' -nodefaults -boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' -vnc 'unix:/var/run/qemu-server/30002.vnc,password=on' -cpu 'host,+aes,kvm=off,+kvm_pv_eoi,+kvm_pv_unhalt,+pcid,+pdpe1gb,+spec-ctrl' -m 81920 -object 'memory-backend-ram,id=ram-node0,size=81920M' -numa 'node,nodeid=0,cpus=0-7,memdev=ram-node0' -object 'iothread,id=iothread-virtioscsi0' -object 'iothread,id=iothread-virtioscsi1' -readconfig /usr/share/qemu-server/pve-q35-4.0.cfg -device 'vmgenid,guid=4505f111-bcfa-458c-a84a-7dda368215d8' -device 'usb-tablet,id=tablet,bus=ehci.0,port=1' -device 'vfio-pci,host=0000:82:00.0,id=hostpci0.0,bus=ich9-pcie-port-1,addr=0x0.0,multifunction=on' -device 'vfio-pci,host=0000:82:00.1,id=hostpci0.1,bus=ich9-pcie-port-1,addr=0x0.1' -device 'VGA,id=vga,bus=pcie.0,addr=0x1' -iscsi 'initiator-name=iqn.1993-08.org.debian:01:3b4faca4b27b' -drive 'if=none,id=drive-ide2,media=cdrom,aio=io_uring' -device 'ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2,bootindex=101' -device 'virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0' -drive 'file=/dev/zvol/configssd/vms/vm-30002-disk-0,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on' -device 'scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,rotation_rate=1,bootindex=100' -device 'virtio-scsi-pci,id=virtioscsi1,bus=pci.3,addr=0x2,iothread=iothread-virtioscsi1' -drive 'file=/dev/zvol/configssd/vms/vm-30002-disk-1,if=none,id=drive-scsi1,format=raw,cache=none,aio=io_uring,detect-zeroes=on' -device 'scsi-hd,bus=virtioscsi1.0,channel=0,scsi-id=0,lun=1,drive=drive-scsi1,id=scsi1,rotation_rate=1' -netdev 'type=tap,id=net0,ifname=tap30002i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on,queues=4' -device 'virtio-net-pci,mac=F2:1A:25:67:52:E6,netdev=net0,bus=pci.0,addr=0x12,id=net0,vectors=10,mq=on,packed=on,rx_queue_size=1024,tx_queue_size=1024,bootindex=102,host_mtu=9000' -machine 'type=q35+pve0'' failed: got timeout

Feb 05 09:20:52 pve-atlas pvedaemon[390825]: start VM 30002: UPIDve-atlas:0005F6A9:F294E350:67A31F64:qmstart:30002:root@pam:

Feb 05 09:20:52 pve-atlas pvedaemon[3960192]: <root@pam> starting task UPIDve-atlas:0005F6A9:F294E350:67A31F64:qmstart:30002:root@pam:

Feb 05 09:20:52 pve-atlas systemd[1]: Started 30002.scope.

Feb 05 09:20:53 pve-atlas systemd-udevd[391049]: Using default interface naming scheme 'v247'.

Feb 05 09:20:53 pve-atlas systemd-udevd[391049]: ethtool: autonegotiation is unset or enabled, the speed and duplex are not writable.

Feb 05 09:20:53 pve-atlas kernel: device tap30002i0 entered promiscuous mode

Feb 05 09:20:53 pve-atlas kernel: vmbr1: port 8(tap30002i0) entered blocking state

Feb 05 09:20:53 pve-atlas kernel: vmbr1: port 8(tap30002i0) entered disabled state

Feb 05 09:20:53 pve-atlas kernel: vmbr1: port 8(tap30002i0) entered blocking state

Feb 05 09:20:53 pve-atlas kernel: vmbr1: port 8(tap30002i0) entered forwarding state

Feb 05 09:21:01 pve-atlas pvedaemon[3960192]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - got timeout

Feb 05 09:21:10 pve-atlas pvestatd[7259]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - unable to connect to VM 30002 qmp socket - timeout after 51 retries

Feb 05 09:21:11 pve-atlas pvestatd[7259]: status update time (8.625 seconds)

Feb 05 09:21:20 pve-atlas pvestatd[7259]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - unable to connect to VM 30002 qmp socket - timeout after 51 retries

Feb 05 09:21:20 pve-atlas pvestatd[7259]: status update time (8.621 seconds)

Feb 05 09:21:30 pve-atlas pvestatd[7259]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - unable to connect to VM 30002 qmp socket - timeout after 51 retries

Feb 05 09:21:31 pve-atlas pvestatd[7259]: status update time (8.696 seconds)

Feb 05 09:21:40 pve-atlas pvestatd[7259]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - unable to connect to VM 30002 qmp socket - timeout after 51 retries

Feb 05 09:21:41 pve-atlas pvestatd[7259]: status update time (8.683 seconds)

Feb 05 09:21:50 pve-atlas pvestatd[7259]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - unable to connect to VM 30002 qmp socket - timeout after 51 retries

Feb 05 09:21:50 pve-atlas pvestatd[7259]: status update time (8.691 seconds)

Feb 05 09:22:00 pve-atlas pvestatd[7259]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - unable to connect to VM 30002 qmp socket - timeout after 51 retries

Feb 05 09:22:01 pve-atlas pvestatd[7259]: status update time (8.752 seconds)

Feb 05 09:22:10 pve-atlas pvestatd[7259]: VM 30002 qmp command failed - VM 30002 qmp command 'query-proxmox-support' failed - unable to connect to VM 30002 qmp socket - timeout after 51 retries

Feb 05 09:22:11 pve-atlas pvestatd[7259]: status update time (8.689 seconds)

Feb 05 09:22:12 pve-atlas pvedaemon[390825]: start failed: command '/usr/bin/taskset --cpu-list --all-tasks 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31 /usr/bin/kvm -id 30002 -name 'NiceDCV-Main,debug-threads=on' -no-shutdown -chardev 'socket,id=qmp,path=/var/run/qemu-server/30002.qmp,server=on,wait=off' -mon 'chardev=qmp,mode=control' -chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' -mon 'chardev=qmp-event,mode=control' -pidfile /var/run/qemu-server/30002.pid -daemonize -smbios 'type=1,uuid=98d99f3c-1bd0-49fe-8d99-8e621524d520' -smp '8,sockets=1,cores=8,maxcpus=8' -nodefaults -boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' -vnc 'unix:/var/run/qemu-server/30002.vnc,password=on' -cpu 'host,+aes,kvm=off,+kvm_pv_eoi,+kvm_pv_unhalt,+pcid,+pdpe1gb,+spec-ctrl' -m 81920 -object 'memory-backend-ram,id=ram-node0,size=81920M' -numa 'node,nodeid=0,cpus=0-7,memdev=ram-node0' -object 'iothread,id=iothread-virtioscsi0' -object 'iothread,id=iothread-virtioscsi1' -readconfig /usr/share/qemu-server/pve-q35-4.0.cfg -device 'vmgenid,guid=4505f111-bcfa-458c-a84a-7dda368215d8' -device 'usb-tablet,id=tablet,bus=ehci.0,port=1' -device 'vfio-pci,host=0000:82:00.0,id=hostpci0.0,bus=ich9-pcie-port-1,addr=0x0.0,multifunction=on' -device 'vfio-pci,host=0000:82:00.1,id=hostpci0.1,bus=ich9-pcie-port-1,addr=0x0.1' -device 'VGA,id=vga,bus=pcie.0,addr=0x1' -iscsi 'initiator-name=iqn.1993-08.org.debian:01:3b4faca4b27b' -drive 'if=none,id=drive-ide2,media=cdrom,aio=io_uring' -device 'ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2,bootindex=101' -device 'virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0' -drive 'file=/dev/zvol/configssd/vms/vm-30002-disk-0,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on' -device 'scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,rotation_rate=1,bootindex=100' -device 'virtio-scsi-pci,id=virtioscsi1,bus=pci.3,addr=0x2,iothread=iothread-virtioscsi1' -drive 'file=/dev/zvol/configssd/vms/vm-30002-disk-1,if=none,id=drive-scsi1,format=raw,cache=none,aio=io_uring,detect-zeroes=on' -device 'scsi-hd,bus=virtioscsi1.0,channel=0,scsi-id=0,lun=1,drive=drive-scsi1,id=scsi1,rotation_rate=1' -netdev 'type=tap,id=net0,ifname=tap30002i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on,queues=4' -device 'virtio-net-pci,mac=F2:1A:25:67:52:E6,netdev=net0,bus=pci.0,addr=0x12,id=net0,vectors=10,mq=on,packed=on,rx_queue_size=1024,tx_queue_size=1024,bootindex=102,host_mtu=9000' -machine 'type=q35+pve0'' failed: got timeout