I need a bit of guidance on where I have gone wrong with storage.

I have had Proxmox up and running for about 6 months and all been ok until this week when one of my VMs suddenly started fail, and looking in to it, it looks to be a disk space issue. Getting the following errors soon after re-starting the failed VM:

I really don't understand how the storage works with Proxmox, I thought I had configured my storage for around 50GB for the system and the rest for VM storage, and within that split the remaining storage between the only two VMs that i need to host, filling up pretty much all the storage, But now the size of the disks looks to have grown to larger than I allocated?

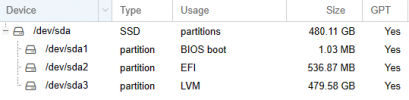

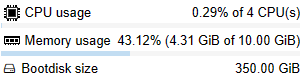

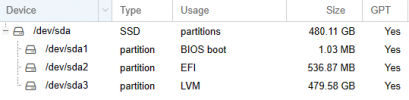

I have only one 480GB SSD in a NUC with the following setup:

Under Storage I have

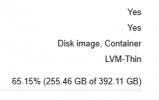

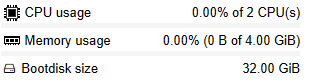

local at 52GB:

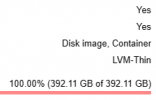

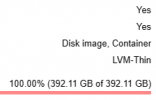

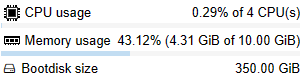

and local-lvm 392GB:

Adding those two up should still leave a bit of space not allocated (around 35GB)?

For the two VMs I have allocated:

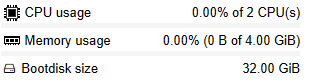

vm100 32GB

vm101 350GB

So again, adding those together there should be a little free space out of the 392GB.

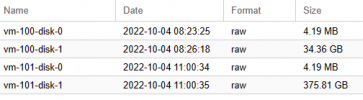

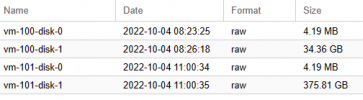

But here is where I am confused, looking at VM Disks view, it looks like the 32GB disk has grown larger to 34GB and the 350GB disk has grown larger to 375GB:

So I am assuming this is where the issue is, the containing volume has no free space left for them to grow, but then they should not grow larger than what has been allocated?

Within each VM, vm100 is only 26% used and vm101 is 68% used, so they are not all that close to filling up, so I dont understand what is going on really.

Any advise would be greatly appreciated.

I have had Proxmox up and running for about 6 months and all been ok until this week when one of my VMs suddenly started fail, and looking in to it, it looks to be a disk space issue. Getting the following errors soon after re-starting the failed VM:

proxmox kernel: device-mapper: thin: 253:4: reached low water mark for data device: sending event.

proxmox kernel: device-mapper: thin: 253:4: switching pool to out-of-data-space (queue IO) mode

I really don't understand how the storage works with Proxmox, I thought I had configured my storage for around 50GB for the system and the rest for VM storage, and within that split the remaining storage between the only two VMs that i need to host, filling up pretty much all the storage, But now the size of the disks looks to have grown to larger than I allocated?

I have only one 480GB SSD in a NUC with the following setup:

Under Storage I have

local at 52GB:

and local-lvm 392GB:

Adding those two up should still leave a bit of space not allocated (around 35GB)?

For the two VMs I have allocated:

vm100 32GB

vm101 350GB

So again, adding those together there should be a little free space out of the 392GB.

But here is where I am confused, looking at VM Disks view, it looks like the 32GB disk has grown larger to 34GB and the 350GB disk has grown larger to 375GB:

So I am assuming this is where the issue is, the containing volume has no free space left for them to grow, but then they should not grow larger than what has been allocated?

Within each VM, vm100 is only 26% used and vm101 is 68% used, so they are not all that close to filling up, so I dont understand what is going on really.

Any advise would be greatly appreciated.