Hi everyone,

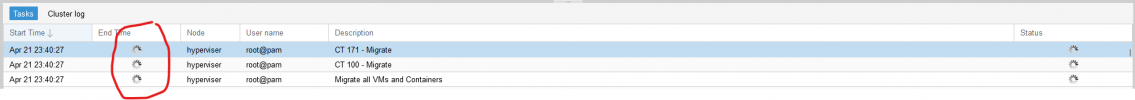

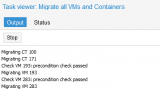

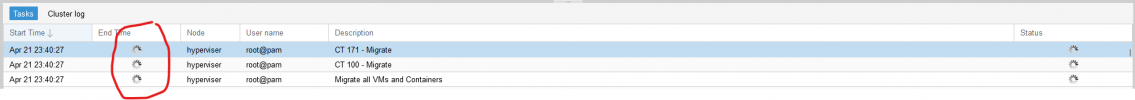

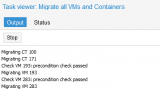

I migrated all my CT/container with bulk migration from PVE1 to PVE2. It's been running for 8 hours, and it keeps running but the log is saying that storage has been imported successfuly and I can see my vm/CT storage in both PVE. Container's storages on PVE 2 are not fully imported, it's missing 1GB for both CT. The migration process seems to be stuck.

What should I do, keep waiting migration process to finish or abort and start again ?

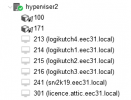

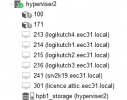

CT config:

I migrated all my CT/container with bulk migration from PVE1 to PVE2. It's been running for 8 hours, and it keeps running but the log is saying that storage has been imported successfuly and I can see my vm/CT storage in both PVE. Container's storages on PVE 2 are not fully imported, it's missing 1GB for both CT. The migration process seems to be stuck.

What should I do, keep waiting migration process to finish or abort and start again ?

Code:

root@hyperviser:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 115G 315G 104K /rpool

rpool/ROOT 20.3G 315G 96K /rpool/ROOT

rpool/ROOT/pve-1 20.3G 315G 20.3G /

rpool/data 96K 315G 96K /rpool/data

rpool/vm-193-disk-0 17.6G 315G 17.6G -

rpool/vm-283-disk-0 77.2G 315G 77.2G -

storage 4.30T 875G 140K /storage

storage/data 4.30T 875G 1.44T /storage/data

storage/data/subvol-100-disk-0 1.93T 875G 1.93T /storage/data/subvol-100-disk-0

storage/data/subvol-171-disk-0 888G 875G 888G /storage/data/subvol-171-disk-0

storage/data/vm-102-disk-0 63.9G 875G 63.9G -

Code:

root@hyperviser2:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 156G 72.6G 104K /rpool

rpool/ROOT 30.2G 72.6G 96K /rpool/ROOT

rpool/ROOT/pve-1 30.2G 72.6G 30.2G /

rpool/data 96K 72.6G 96K /rpool/data

rpool/vm-193-disk-0 17.6G 72.6G 17.6G -

rpool/vm-241-disk-0 108G 111G 69.9G -

storage 5.65T 1.49T 100K /storage

storage/data 5.65T 1.49T 2.26T /storage/data

storage/data/subvol-100-disk-0 1.92T 1.15T 1.92T /storage/data/subvol-100-disk-0

storage/data/subvol-171-disk-0 887G 1013G 887G /storage/data/subvol-171-disk-0 -

Code:

2023-04-22 04:34:15 04:34:15 885G storage/data/subvol-171-disk-0@__migration__

2023-04-22 04:34:16 04:34:16 886G storage/data/subvol-171-disk-0@__migration__

2023-04-22 04:34:22 successfully imported 'storage:subvol-171-disk-0'

2023-04-22 04:34:23 volume 'storage:subvol-171-disk-0' is 'storage:subvol-171-disk-0' on the target

2023-04-22 04:34:23 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=hyperviser2' root@192.168.1.111 pvesr set-state 171 \''{}'\' -

Code:

2023-04-22 07:12:32 07:12:32 1.91T storage/data/subvol-100-disk-0@__migration__

2023-04-22 07:12:33 07:12:33 1.91T storage/data/subvol-100-disk-0@__migration__

2023-04-22 07:12:44 successfully imported 'storage:subvol-100-disk-0'

2023-04-22 07:12:45 volume 'storage:subvol-100-disk-0' is 'storage:subvol-100-disk-0' on the target

2023-04-22 07:12:45 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=hyperviser2' root@192.168.1.111 pvesr set-state 100 \''{}'\' -

CT config:

Code:

root@hyperviser:~# cat /etc/pve/nodes/hyperviser/lxc/100.conf

arch: amd64

cores: 6

cpuunits: 10000

features: nesting=1

hostname: srvdc.eec31.local

lock: migrate

memory: 16384

nameserver: 192.168.1.101 192.168.31.10 192.168.1.120

net0: name=eth0,bridge=vmbr0,firewall=1,gw=192.168.1.1,hwaddr=F2:5A:DA:AB:81:C2,ip=192.168.1.101/24,type=veth

net1: name=net31,bridge=vmbr31,firewall=1,hwaddr=72:7E:E5:D6:B3:C6,ip=192.168.31.10/24,type=veth

onboot: 0

ostype: ubuntu

protection: 1

rootfs: storage:subvol-100-disk-0,acl=1,size=3148G

searchdomain: eec31.local

swap: 8192Attachments

Last edited: