Hello,

I'm trying to create a vm template to use for spinning VMs:

The script is based on https://git.proxmox.com/?p=pve-docs...c011c659440e4b4b91d985dea79f98e5f083c;hb=HEAD

Unfortunately, when I boot the 123 VM it halts at booting from the hard drive... anything I missed?

I'm trying to create a vm template to use for spinning VMs:

Bash:

#!/bin/bash

# download the image

wget https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img

# create a new VM

qm create 9000 --memory 2048 --net0 virtio,bridge=vmbr0

# import the downloaded disk to local-lvm storage

qm importdisk 9000 jammy-server-cloudimg-amd64.img local-lvm

# finally attach the new disk to the VM as scsi drive

qm set 9000 --scsihw virtio-scsi-pci --scsi0 local-lvm:vm-9000-disk-0

# configure a CDROM drive, used to pass the

qm set 9000 --ide2 local-lvm:cloudinit

# boot directly from the Cloud-Init image

qm set 9000 --boot c --bootdisk scsi0

# configure a serial console and use that as display

qm set 9000 --serial0 socket --vga serial0

# transform VM into a template.

qm template 9000

# deploy Cloud-Init Templates

qm clone 9000 123 --name ubuntu-test

qm set 123 --sshkey artkrz.pub

qm set 123 --ipconfig0 ip=dhcpThe script is based on https://git.proxmox.com/?p=pve-docs...c011c659440e4b4b91d985dea79f98e5f083c;hb=HEAD

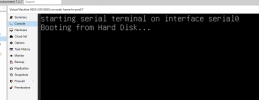

Unfortunately, when I boot the 123 VM it halts at booting from the hard drive... anything I missed?