Hi guys. Can't backup VM. Nothing I've googled helped so I need some advice. here is log from back up job:

INFO: Starting Backup of VM 3617 (qemu)

INFO: Backup started at 2023-01-08 08:06:15

INFO: status = running

INFO: VM Name: 3617

INFO: include disk 'sata0' 'local-zfs:vm-3617-disk-0' 50G

INFO: exclude disk 'sata1' 'hddzfs:vm-3617-disk-0' (backup=no)

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating vzdump archive '/var/lib/vz/dump/vzdump-qemu-3617-2023_01_08-08_06_15.vma.zst'

INFO: started backup task 'cb27f70b-9ab0-47e5-a510-38d13c8a254a'

INFO: resuming VM again

INFO: 0% (348.0 MiB of 50.0 GiB) in 3s, read: 116.0 MiB/s, write: 73.6 MiB/s

INFO: 2% (1.1 GiB of 50.0 GiB) in 6s, read: 245.3 MiB/s, write: 75.4 MiB/s

INFO: 3% (1.9 GiB of 50.0 GiB) in 10s, read: 225.7 MiB/s, write: 68.9 MiB/s

INFO: 8% (4.0 GiB of 50.0 GiB) in 13s, read: 708.1 MiB/s, write: 76.3 MiB/s

INFO: 8% (4.2 GiB of 50.0 GiB) in 14s, read: 184.0 MiB/s, write: 65.0 MiB/s

ERROR: job failed with err -5 - Input/output error

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 3617 failed - job failed with err -5 - Input/output error

INFO: Failed at 2023-01-08 08:06:29

INFO: Backup job finished with errors

TASK ERROR: job errors

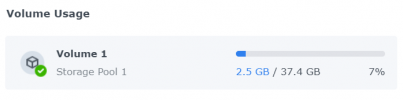

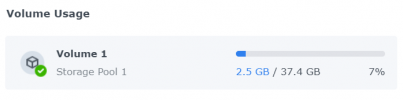

It always stop at 8% (Thats what I get from 50gib inside of VM: )

)

My backup destination shows 400gb free space.

I've tried default and 4096 size vzdump.conf

INFO: Starting Backup of VM 3617 (qemu)

INFO: Backup started at 2023-01-08 08:06:15

INFO: status = running

INFO: VM Name: 3617

INFO: include disk 'sata0' 'local-zfs:vm-3617-disk-0' 50G

INFO: exclude disk 'sata1' 'hddzfs:vm-3617-disk-0' (backup=no)

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating vzdump archive '/var/lib/vz/dump/vzdump-qemu-3617-2023_01_08-08_06_15.vma.zst'

INFO: started backup task 'cb27f70b-9ab0-47e5-a510-38d13c8a254a'

INFO: resuming VM again

INFO: 0% (348.0 MiB of 50.0 GiB) in 3s, read: 116.0 MiB/s, write: 73.6 MiB/s

INFO: 2% (1.1 GiB of 50.0 GiB) in 6s, read: 245.3 MiB/s, write: 75.4 MiB/s

INFO: 3% (1.9 GiB of 50.0 GiB) in 10s, read: 225.7 MiB/s, write: 68.9 MiB/s

INFO: 8% (4.0 GiB of 50.0 GiB) in 13s, read: 708.1 MiB/s, write: 76.3 MiB/s

INFO: 8% (4.2 GiB of 50.0 GiB) in 14s, read: 184.0 MiB/s, write: 65.0 MiB/s

ERROR: job failed with err -5 - Input/output error

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 3617 failed - job failed with err -5 - Input/output error

INFO: Failed at 2023-01-08 08:06:29

INFO: Backup job finished with errors

TASK ERROR: job errors

It always stop at 8% (Thats what I get from 50gib inside of VM:

)

)My backup destination shows 400gb free space.

I've tried default and 4096 size vzdump.conf

# vzdump default settings

#tmpdir: DIR

#dumpdir: DIR

#storage: STORAGE_ID

#mode: snapshot|suspend|stop

#bwlimit: KBPS

#performance: max-workers=N

#ionice: PRI

#lockwait: MINUTES

#stopwait: MINUTES

#stdexcludes: BOOLEAN

#mailto: ADDRESSLIST

#prune-backups: keep-INTERVAL=N[,...]

#script: FILENAME

#exclude-path: PATHLIST

#pigz: N

#notes-template: {{guestname}}

size: 4096

#tmpdir: DIR

#dumpdir: DIR

#storage: STORAGE_ID

#mode: snapshot|suspend|stop

#bwlimit: KBPS

#performance: max-workers=N

#ionice: PRI

#lockwait: MINUTES

#stopwait: MINUTES

#stdexcludes: BOOLEAN

#mailto: ADDRESSLIST

#prune-backups: keep-INTERVAL=N[,...]

#script: FILENAME

#exclude-path: PATHLIST

#pigz: N

#notes-template: {{guestname}}

size: 4096

root@prox:~# pveversion -v

proxmox-ve: 7.3-1 (running kernel: 5.15.83-1-pve)

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-5.15: 7.3-1

pve-kernel-helper: 7.3-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-1

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-1

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-2

pve-ha-manager: 3.5.1

pve-i18n: 2.8-1

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.7-pve2

proxmox-ve: 7.3-1 (running kernel: 5.15.83-1-pve)

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-5.15: 7.3-1

pve-kernel-helper: 7.3-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-1

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-1

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-2

pve-ha-manager: 3.5.1

pve-i18n: 2.8-1

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.7-pve2

Last edited: