Hey Proxmoxers,

im facing a weird problem.

Proxmox Version 8.2.4.

I have a bond0, which is split into vlans. I have vmbr0 which is VLAN aware.

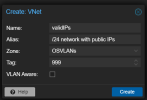

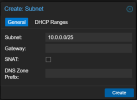

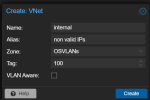

Lets say i have a private network 192.168.1.0/24 and some VMs there with a virtio NIC with vlan tag 100. Now I am adding another VM, that has two virtio NICs with vmbr0 with tag 999 and vmbr0 with tag 100. While 999 is the public network. This machine, lets call it gateway, MASQUERADEs (NATs) the traffic for the public network machines.

This does work, but it is INSANELY slow and i am super confused why. I could have sworn, that this was working, but i cannot pinpoint since when it got so slow.

iperf between a "private client vm" with a vmbr0 and vlan-tag 100 and the gateway machine is fast (enough) ~8 Gbits.

iperf between "internet" and the gateway machine on vmbr0 with vlan-tag 999 is also fast (enough).

Now, downloading from private client vm through the gateway is utterly slow. < 80kb/s. I tried to replace virtio for E1000E, i fiddled with MTUs. It didnt change... what am i missing?

I also tried using opnsense as my gateway, since i was seeing the problems, i thought opnsense was the problem, but no. A regular Ubuntu VM acting as GW is showing the same thing.

Any hints are appreciated. I spent many hours already on this...

All nics have at least multiqueue=2.

Best

inDane

im facing a weird problem.

Proxmox Version 8.2.4.

I have a bond0, which is split into vlans. I have vmbr0 which is VLAN aware.

Lets say i have a private network 192.168.1.0/24 and some VMs there with a virtio NIC with vlan tag 100. Now I am adding another VM, that has two virtio NICs with vmbr0 with tag 999 and vmbr0 with tag 100. While 999 is the public network. This machine, lets call it gateway, MASQUERADEs (NATs) the traffic for the public network machines.

This does work, but it is INSANELY slow and i am super confused why. I could have sworn, that this was working, but i cannot pinpoint since when it got so slow.

iperf between a "private client vm" with a vmbr0 and vlan-tag 100 and the gateway machine is fast (enough) ~8 Gbits.

iperf between "internet" and the gateway machine on vmbr0 with vlan-tag 999 is also fast (enough).

Now, downloading from private client vm through the gateway is utterly slow. < 80kb/s. I tried to replace virtio for E1000E, i fiddled with MTUs. It didnt change... what am i missing?

I also tried using opnsense as my gateway, since i was seeing the problems, i thought opnsense was the problem, but no. A regular Ubuntu VM acting as GW is showing the same thing.

Any hints are appreciated. I spent many hours already on this...

All nics have at least multiqueue=2.

Best

inDane