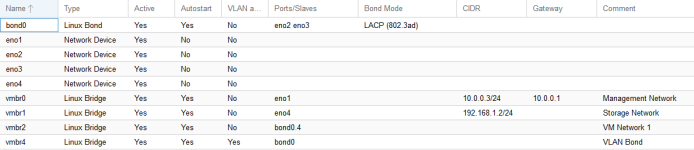

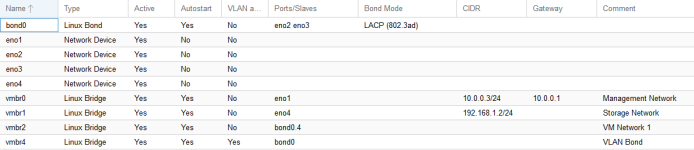

I recently updated my 4-node Proxmox cluster to version 6.0-11 and after the update machines that are on a VLAN are no longer able to access the internet or connect to any other machine, or get an IP address. VM's and containers not on a VLAN continue to work just fine. There were no other changes to the environment made besides the update to proxmox. Each of my 4 nodes have 4 NIC's and all share an identical network configuration. They are all Dell PowerEdge R610 servers.

Does anyone have any ideas as to how I can resolve this? Currently about 700 vms/containers are unable to connect to the internet!!!

Does anyone have any ideas as to how I can resolve this? Currently about 700 vms/containers are unable to connect to the internet!!!

Last edited: