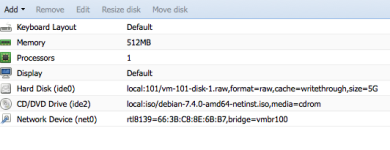

Hi, I'm trying to configure 2 nodes of a cluster. Heach node has 2 NIC.

I've thought to use NIC 1, of each node, as internal comunication (gluster..); NIC 2 of each node should be the bridge for the virtual machines.

Since VMs have different lan, I need to configure NIC 2 with vlan support; I've thought to use another vlan for management the nodes.

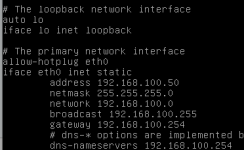

Here the configuration:

With this configuration I can reach the node and from the node I can reach internet correctly (so the switch has the port tagged correctly).

But the VMs need a bridge like vmbr0 and If I enable this then I can't reach the node.

Furthermore from this http://188.165.151.221/threads/12850-Problems-with-VLAN-s

Is there any way to have a bonding vlan bridge with a management vlan for nodes?

Thanks.

I've thought to use NIC 1, of each node, as internal comunication (gluster..); NIC 2 of each node should be the bridge for the virtual machines.

Since VMs have different lan, I need to configure NIC 2 with vlan support; I've thought to use another vlan for management the nodes.

Here the configuration:

Code:

auto lo

iface lo inet loopback

auto bond0

iface bond0 inet manual

slaves eth1

bond_mode 0

bond_xmit_hash_policy layer2

bond_miimon 100

bond_downdelay 0

bond_updelay 0

#bridge for VMs

#auto vmbr0

#iface vmbr0 inet manual

# bridge_ports bond0

# bridge_stp off

# bridge_fd 0

# Management vlan

auto vmbr0v99

iface vmbr0v99 inet static

address 192.168.99.3

netmask 255.255.255.0

network 192.168.99.0

gateway 192.168.99.254

bridge_ports bond0.99

bridge_stp off

bridge_fd 0

#Internal comunication

auto eth0

iface eth0 inet static

address 192.168.98.3

netmask 255.255.255.0With this configuration I can reach the node and from the node I can reach internet correctly (so the switch has the port tagged correctly).

But the VMs need a bridge like vmbr0 and If I enable this then I can't reach the node.

Furthermore from this http://188.165.151.221/threads/12850-Problems-with-VLAN-s

Code:

...

and if you create a vm, with network interface on vmbr1 + vlan tag 4, proxmox create a

vmbr1v4

iface vmbr1v4 inet manual

bridge_ports bond0.4

bridge_stp off

bridge_fd 0

(not in /etc/network/interfaces, but directly in memory)Is there any way to have a bonding vlan bridge with a management vlan for nodes?

Thanks.