Hi,

I am out of ideas, so I really hope someone can help me on the right track. I am building a few Proxmox nodes for network virtualization, and I have configured open-vswitch with DPDK support. All that is working fine, and I do not think the performance problems are directly related to DKDP or OVS.

Now I am trying to run some tests with iperf from a couple of VMs on the Proxmox nodes. I can only get 7.5Gbps TCP and 3.5Gbps UDP traffic, which seems really slow. I have been diagnosing OVS and DPDK and there is next to no load here when running the tests (PMD threads are at a few percent utilization). However, the guests, which are running iperf3 on ubuntu are maxed out on CPU. I cannot understand why that would be the case for such low traffic volumes.

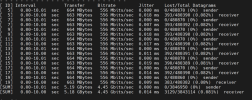

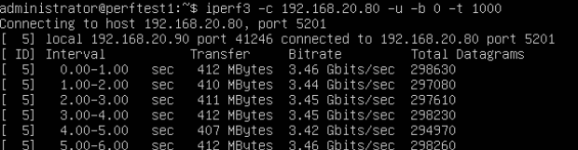

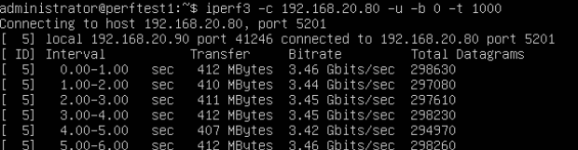

UDP Results:

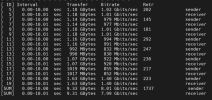

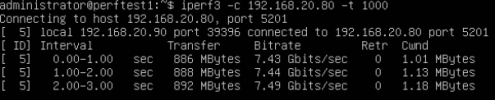

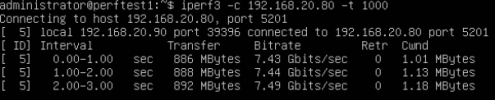

TCP Results:

Running with multiple TCP streams (like -P 4) does not change the results; same bitrate and the same observed CPU load.

Here is the config from one of the test VM's

args: -machine q35+pve0,kernel_irqchip=split -device intel-iommu,intremap=on,caching-mode=on -chardev socket,id=char1,path=/var/run/vhostuserclient/vhost-int-105-100,server=on -netdev type=vhost-user,id=net0,cnet0,chardev=char1,vhostforce=on,queues=4 -device virtio-net-pci,mac=00:00:00:00:00:07,mq=on,vectors=10,netdev=net0 -chardev socket,id=char2,path=/var/run/vhostuserclient/vhost-int-105-17,server=on -netdev type=vhost-user,id=net1,chardev=char2,vhostforce=on,queues=4 -device virtio-net-pci,mac=00:00:00:00:00:08,mq=on,vectors=10,netdev=net1

balloon: 0

boot: order=scsi0;ide2

cores: 8

cpu: host

ide2: none,media=cdrom

machine: q35

memory: 2048

hugepages: 1024

meta: creation-qemu=6.2.0,ctime=1651834160

name: perftest1

numa: 1

ostype: l26

scsi0: local:105/vm-105-disk-0.qcow2,size=32G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=c7e1da1a-1117-49fe-9119-aad1085225d8

sockets: 1

vmgenid: 80607b5b-5ba0-4c21-b4ae-fc9d5594ec0d

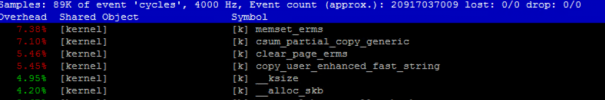

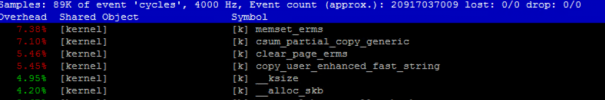

Running perf top in the iperf client VM shows the following

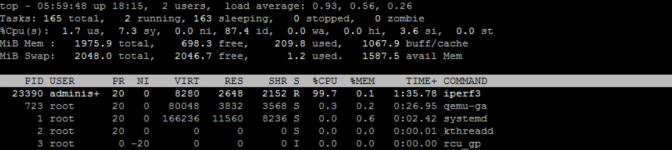

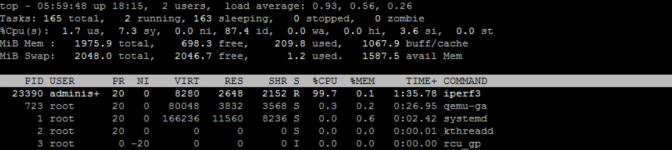

While a top view shows that the iperf process is maxing out a core.

I have tried with and without multi-queue and various configurations of CPUs on the test client VMs, all without any significant impact.

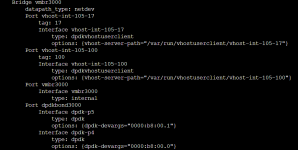

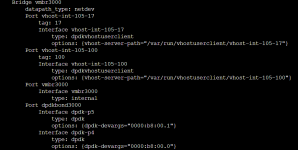

For reference, here is the OVS config. The host CPU is Intel D-2187 and NICs are Intel X710

I am out of ideas, so I really hope someone can help me on the right track. I am building a few Proxmox nodes for network virtualization, and I have configured open-vswitch with DPDK support. All that is working fine, and I do not think the performance problems are directly related to DKDP or OVS.

Now I am trying to run some tests with iperf from a couple of VMs on the Proxmox nodes. I can only get 7.5Gbps TCP and 3.5Gbps UDP traffic, which seems really slow. I have been diagnosing OVS and DPDK and there is next to no load here when running the tests (PMD threads are at a few percent utilization). However, the guests, which are running iperf3 on ubuntu are maxed out on CPU. I cannot understand why that would be the case for such low traffic volumes.

UDP Results:

TCP Results:

Running with multiple TCP streams (like -P 4) does not change the results; same bitrate and the same observed CPU load.

Here is the config from one of the test VM's

args: -machine q35+pve0,kernel_irqchip=split -device intel-iommu,intremap=on,caching-mode=on -chardev socket,id=char1,path=/var/run/vhostuserclient/vhost-int-105-100,server=on -netdev type=vhost-user,id=net0,cnet0,chardev=char1,vhostforce=on,queues=4 -device virtio-net-pci,mac=00:00:00:00:00:07,mq=on,vectors=10,netdev=net0 -chardev socket,id=char2,path=/var/run/vhostuserclient/vhost-int-105-17,server=on -netdev type=vhost-user,id=net1,chardev=char2,vhostforce=on,queues=4 -device virtio-net-pci,mac=00:00:00:00:00:08,mq=on,vectors=10,netdev=net1

balloon: 0

boot: order=scsi0;ide2

cores: 8

cpu: host

ide2: none,media=cdrom

machine: q35

memory: 2048

hugepages: 1024

meta: creation-qemu=6.2.0,ctime=1651834160

name: perftest1

numa: 1

ostype: l26

scsi0: local:105/vm-105-disk-0.qcow2,size=32G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=c7e1da1a-1117-49fe-9119-aad1085225d8

sockets: 1

vmgenid: 80607b5b-5ba0-4c21-b4ae-fc9d5594ec0d

Running perf top in the iperf client VM shows the following

While a top view shows that the iperf process is maxing out a core.

I have tried with and without multi-queue and various configurations of CPUs on the test client VMs, all without any significant impact.

For reference, here is the OVS config. The host CPU is Intel D-2187 and NICs are Intel X710

Last edited: