I reinstalled the license server as a docker container this time and it all worked. The VM received a 3 month licence. I am not sure what is going on in lxc container, may be something to do with openssl or other libraries. The Failed to verify signature on lease response error is a cryptographic and configuration problem and so is Failed to acquire license from 10.10.1.135.

[SOLVED] vGPU just stopped working randomly (solution includes 6.14, pascal fixes for 17.5, changing mock p4 to A5500 thanks to GreenDam )

- Thread starter zenowl77

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I believe I only got this error with kernel version after (not including) 6.8 (doesn't seem to matter the driver version, official supported 16.11, or older "patched" 16.x drivers, or 17.x (patched/unpatched).@Randell - FYI I stopped getting this error when I did a clean install with PVE8. Haven't worked out what the cause is yet, but so far on a clean install with only the 570 unlocked drivers that error is gone. now checking whether it has actually made my GPU encoding stable, and then if it has, will upgrade to PVE9 again and see whether anything changes.

I have yet to try patching everything with patched 18 series drivers yet. I'm considering just finding a Turing based card so I can use newer drivers without worry about patching but since I play around with a 3 node cluster at home, I don't want to buy 3 cards. (and I expect Turing will get dropped before I know it and I'll be right back here in the next release)

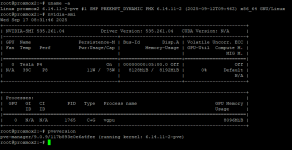

Hi everyone, I'm running a TESLA P4 on PVE9 kernel 6.14.11-2 using non-patched 16.11 (535.261.04) drivers.

What I've done was basically nvidia-uninstall the last working drivers for PVE8, keeping all the vGPU shenanigans untouched except recompiling the unlock repo, and install unpatched 16.11 drivers (I wasn't able to patch it on any kernel I have installed).

Simply ./NVIDIA.......run, reboot et voila!

P4 is shown as a T4 in mediated devices, but everything works fine as before as T4-8Q on a Win10 machine on which I successfully updated the guest drivers as well.

I also have a P40 but I use it on a direct passthrough to a Linux VM, so it's not shown on nvidia-smi output. Lmk if you want me to investigate more on that card as well.

What I've done was basically nvidia-uninstall the last working drivers for PVE8, keeping all the vGPU shenanigans untouched except recompiling the unlock repo, and install unpatched 16.11 drivers (I wasn't able to patch it on any kernel I have installed).

Simply ./NVIDIA.......run, reboot et voila!

P4 is shown as a T4 in mediated devices, but everything works fine as before as T4-8Q on a Win10 machine on which I successfully updated the guest drivers as well.

I also have a P40 but I use it on a direct passthrough to a Linux VM, so it's not shown on nvidia-smi output. Lmk if you want me to investigate more on that card as well.

Attachments

@zenowl77

Hello, are you able to use the override_profiles with your setup? I run proxmox 9, with a 1060 6GB gpu, 18.4 driver on host and guest. All working but without overrides, so frame rate is limited to 45fps or the resolution is 1280x0124.It worked fine on the 6.8 kernel with 16.5 drivers but now it ignores the override file? What am i missing?

yes it works fine for me, i use it with a 1080p monitor via remote desktop every day.@zenowl77

Hello, are you able to use the override_profiles with your setup? I run proxmox 9, with a 1060 6GB gpu, 18.4 driver on host and guest. All working but without overrides, so frame rate is limited to 45fps or the resolution is 1280x0124.

It worked fine on the 6.8 kernel with 16.5 drivers but now it ignores the override file? What am i missing?

here is the exact override config i use for that VM most the time:

Code:

[vm.128]

display_width = 3840

display_height = 2160

max_pixels = 8294400

cuda_enabled = 1

frl_enabled = 0

framebuffer = 0x1DC000000

framebuffer_reservation = 0x24000000 # 8GBHi everyone, I was having a hard time getting this to work on PVE-9 so I installed 8.4 and now everything is working.

I've got a Quadro P6000 and a Titan Xp, both set as vgpus.

The only problem I'm having is the P6000 has 24GB ram but vgpu 17.5 emulates a T4 which only have 16GB.

When I go to create a 3rd VM, it says I have zero devices available for 8GB (two VM's are currently running with nvidia-233):

How can I change from a T4 to some other card that has 24GB ram?

Thanks

I've got a Quadro P6000 and a Titan Xp, both set as vgpus.

The only problem I'm having is the P6000 has 24GB ram but vgpu 17.5 emulates a T4 which only have 16GB.

When I go to create a 3rd VM, it says I have zero devices available for 8GB (two VM's are currently running with nvidia-233):

Code:

nvidia-smi vgpu

Tue Nov 11 17:09:58 2025

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 550.144.02 Driver Version: 550.144.02 |

|---------------------------------+------------------------------+------------+

| GPU Name | Bus-Id | GPU-Util |

| vGPU ID Name | VM ID VM Name | vGPU-Util |

|=================================+==============================+============|

| 0 NVIDIA TITAN Xp | 00000000:03:00.0 | 0% |

+---------------------------------+------------------------------+------------+

| 1 Quadro P6000 | 00000000:81:00.0 | 0% |

| 3251634207 GRID T4-8Q | 7cfe... win11,debug-thre... | 0% |

| 3251646173 GRID T4-8Q | c4b0... win11xristos,deb... | 0% |

+---------------------------------+------------------------------+------------+

How can I change from a T4 to some other card that has 24GB ram?

Thanks

you want to change the line in vgpu_unlock-rs/src/lib.rs on 370-377 where it says Tesla T4 and gives a hex code, change the 1EB8 to 1EB9 for the edition of the T4 with 32GB (cant find anything about the card itself as a 32gb edition but a few lists give that option or list it as a quadro 6000, or just use 1E38 for a T40 which is 24GB exactly.)Hi everyone, I was having a hard time getting this to work on PVE-9 so I installed 8.4 and now everything is working.

I've got a Quadro P6000 and a Titan Xp, both set as vgpus.

The only problem I'm having is the P6000 has 24GB ram but vgpu 17.5 emulates a T4 which only have 16GB.

When I go to create a 3rd VM, it says I have zero devices available for 8GB (two VM's are currently running with nvidia-233):

View attachment 92657

Code:nvidia-smi vgpu Tue Nov 11 17:09:58 2025 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 550.144.02 Driver Version: 550.144.02 | |---------------------------------+------------------------------+------------+ | GPU Name | Bus-Id | GPU-Util | | vGPU ID Name | VM ID VM Name | vGPU-Util | |=================================+==============================+============| | 0 NVIDIA TITAN Xp | 00000000:03:00.0 | 0% | +---------------------------------+------------------------------+------------+ | 1 Quadro P6000 | 00000000:81:00.0 | 0% | | 3251634207 GRID T4-8Q | 7cfe... win11,debug-thre... | 0% | | 3251646173 GRID T4-8Q | c4b0... win11xristos,deb... | 0% | +---------------------------------+------------------------------+------------+

View attachment 92658

How can I change from a T4 to some other card that has 24GB ram?

Thanks

i believe it should work after either a reboot or recompile + reboot.

currently you're emulating a 16gb card so 8+8=16, that limit definitely makes sense there. it should be fixed once you change which card you are spoofing it into.

the issues with PVE 9 are that compiling the patch is broken, if you use the patched driver from 8.4 on 9 it will work fine. (i personally booted up a VM with pve 8.4 just to compile my driver on it and move it to 9, you cant need the gpu attached or anything to compile the drivers)

you're welcome.Thanks @zenowl77 !

I couldn't get mdevctl types to output anything in PVE 9, I tried many drivers/patches combinations, none worked.

When you say 'recompile', which part do I need to recompile?

Let me try to edit your suggestion and reboot.

Code:

cd vgpu_unlock-rs/

cargo build --releasethis is what i mean but i don't know if it will be needed at all. (i think it should work if you just change the line)

Last edited:

Well that was a quick recompiles, 2.6 seconds:

Rebooting now.

Code:

/opt/vgpu_unlock-rs# cargo build --release

Compiling vgpu_unlock-rs v2.5.0 (/opt/vgpu_unlock-rs)

warning: struct `StraightFormat` is never constructed

--> src/format.rs:93:12

|

93 | pub struct StraightFormat<T>(pub T);

| ^^^^^^^^^^^^^^

|

= note: `#[warn(dead_code)]` (part of `#[warn(unused)]`) on by default

warning: `vgpu_unlock-rs` (lib) generated 1 warning

Finished `release` profile [optimized] target(s) in 2.68sRebooting now.

Darn, after this change, now I get no mdevctl types and I see this in the log:

mdevctl types

root@proxmox:~# dmesg -T | grep vgpu

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x300

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x8100

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:03:00.0

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:81:00.0

mdevctl types

root@proxmox:~# dmesg -T | grep vgpu

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x300

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x8100

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:03:00.0

[Tue Nov 11 18:39:38 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:81:00.0

Ok, I changed them back to 0x1EB8, recompiled, rebooted, and now mdevctl types show all the profiles.

I then tried to change to 1B38 for a P40 24GB, but getting same error vgpu not found and no mdevctl types.

I changed to 1E38 as you suggested, re-compiled, rebooting now.

I then tried to change to 1B38 for a P40 24GB, but getting same error vgpu not found and no mdevctl types.

I changed to 1E38 as you suggested, re-compiled, rebooting now.

Nope, same issue, no mdevctl types, and error in dmesg:

dmesg -T | grep vgpu

[Tue Nov 11 18:59:23 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x300

[Tue Nov 11 18:59:24 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x8100

[Tue Nov 11 18:59:24 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:03:00.0

[Tue Nov 11 18:59:24 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:81:00.0

Maybe I need to edit the lib.rs in other places as well?

dmesg -T | grep vgpu

[Tue Nov 11 18:59:23 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x300

[Tue Nov 11 18:59:24 2025] [nvidia-vgpu-vfio] No vGPU types present for GPU 0x8100

[Tue Nov 11 18:59:24 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:03:00.0

[Tue Nov 11 18:59:24 2025] [nvidia-vgpu-vfio] vGPU device data not available. No vGPU devices found for GPU 0000:81:00.0

Maybe I need to edit the lib.rs in other places as well?