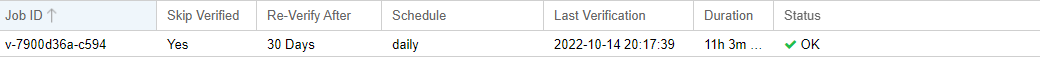

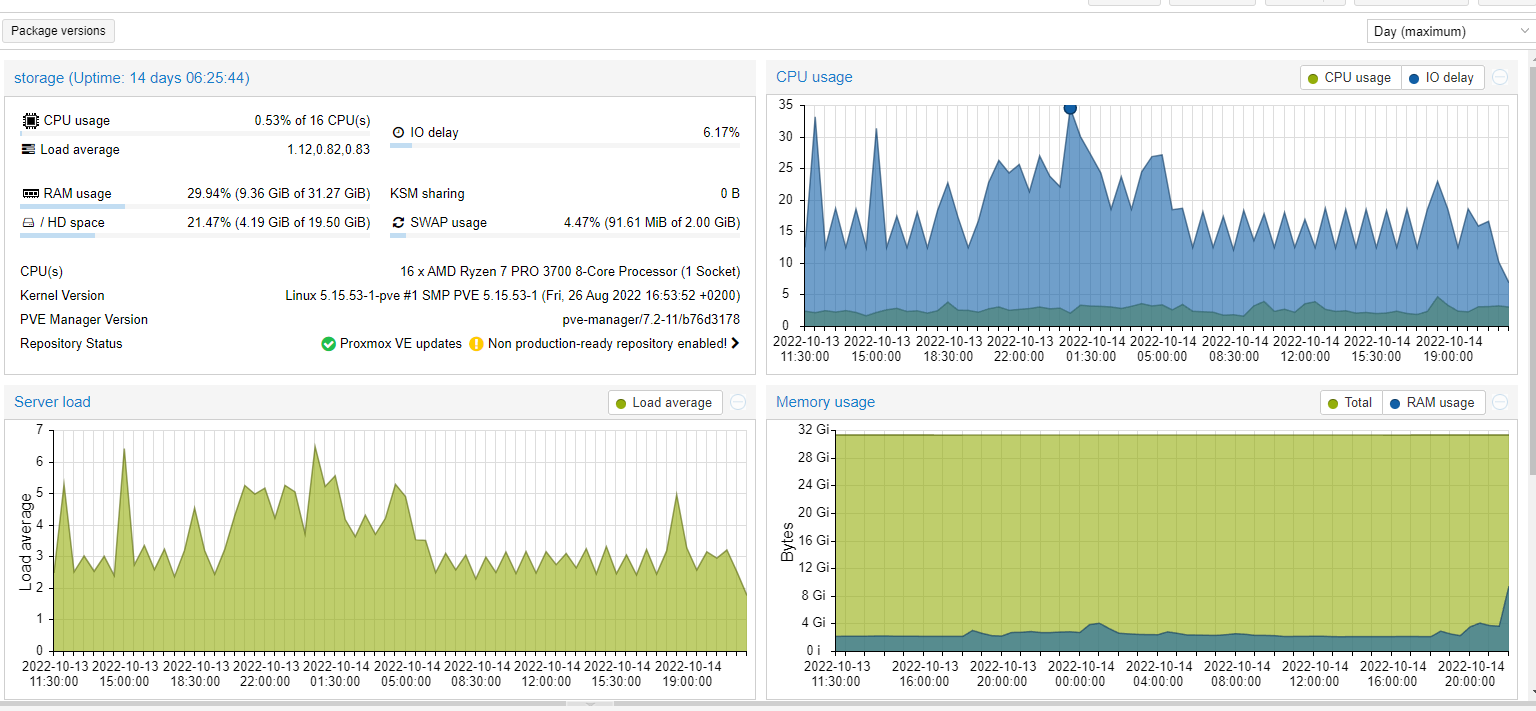

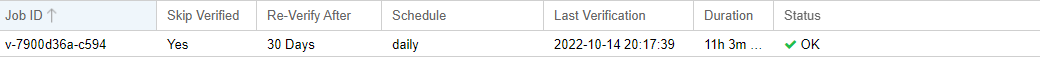

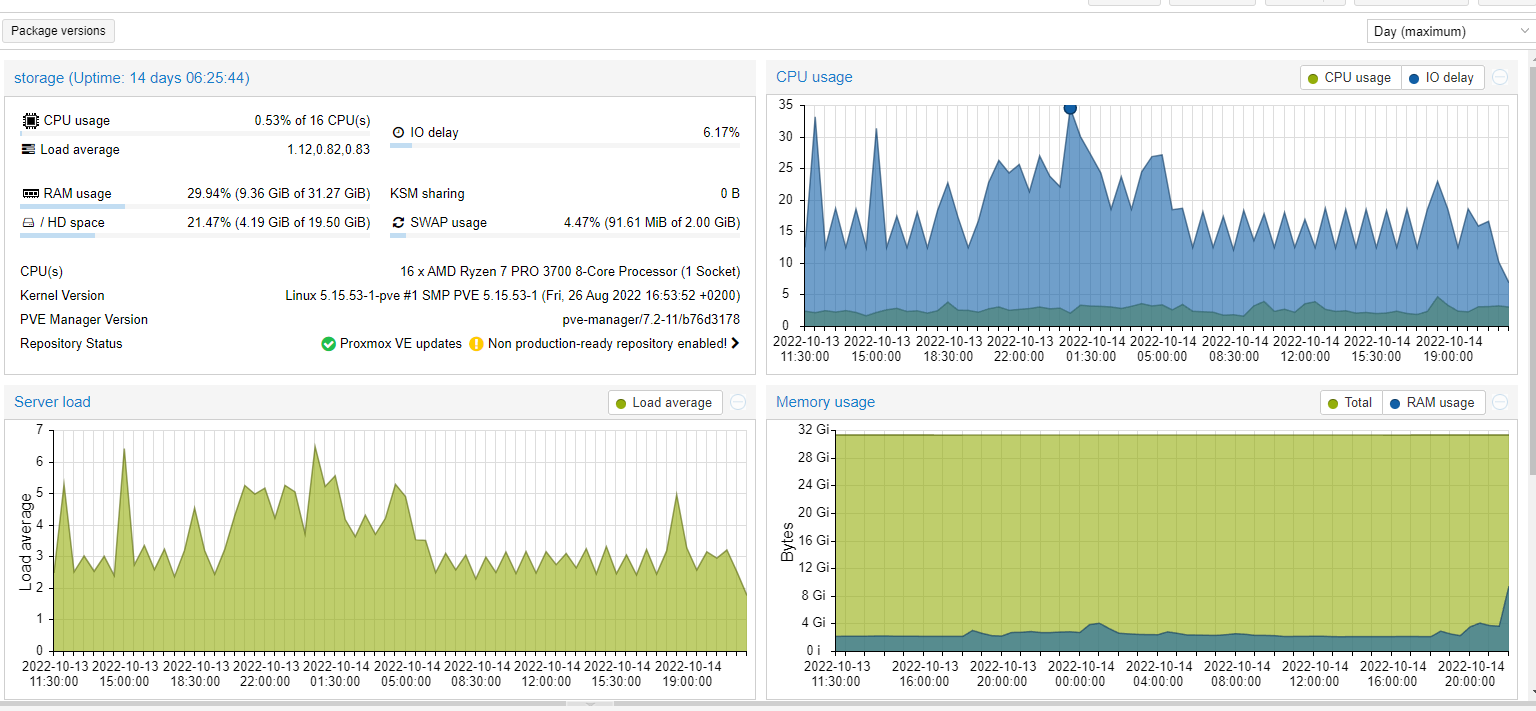

I have about 100 virtual machines I back up daily and experience terrible IO performance during verificatinos that take 10+ hours

The disks used are 4x RAID 10 (Hardare RAID) WUH721414AL5201 (https://www.serversupply.com/HARD D...PM/WESTERN DIGITAL/WUH721414AL5201_313352.htm)

Hardware Raid Controller: LSI MegaRAID SAS 9361-8i

Is this because of the sector size of the disk? Should it be set smaller?

The disks used are 4x RAID 10 (Hardare RAID) WUH721414AL5201 (https://www.serversupply.com/HARD D...PM/WESTERN DIGITAL/WUH721414AL5201_313352.htm)

Hardware Raid Controller: LSI MegaRAID SAS 9361-8i

Is this because of the sector size of the disk? Should it be set smaller?

Code:

Sector Size: 512

Logical Sector Size: 512