We are running a few proxmox backup servers deployed on top of Truenas, which is based on ZFS, so I am quite sure that there is no hardware disk failure.

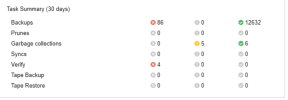

But recently, when I was trying to restore backup from PBS. I found some backup is broken, the error log shows some .chunk is missing (no such file or directory). After that, I started verifying jobs on each deployment, and some backup jobs reported failed on each node.

Here is how our backup job works:

Inside a specific region, a few pve nodes(4-5) are connected to one proxmox backup server(no cluster between nodes), each VM on a difference node will be backup from 0:00-6:00, and we will delete a previous backup before creating a new backup, garbage collection is running every day, so I think maybe garbage collection is running at the same time of backing up, did it accidentally remove some chunk files?

I am using:

But recently, when I was trying to restore backup from PBS. I found some backup is broken, the error log shows some .chunk is missing (no such file or directory). After that, I started verifying jobs on each deployment, and some backup jobs reported failed on each node.

Here is how our backup job works:

Inside a specific region, a few pve nodes(4-5) are connected to one proxmox backup server(no cluster between nodes), each VM on a difference node will be backup from 0:00-6:00, and we will delete a previous backup before creating a new backup, garbage collection is running every day, so I think maybe garbage collection is running at the same time of backing up, did it accidentally remove some chunk files?

I am using:

Code:

Proxmox Backup Server 3.0-1