Hi,

I do get

an do not understand how to fix this.

Likely something happened, when the storage was full.

Here comes the log of the verify task.

I do get

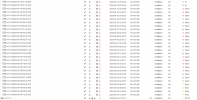

Code:

Datastore: nas0

Verification failed on these snapshots/groups:

vm/113/2023-04-22T02:25:46Z

vm/113/2023-04-22T01:08:44Z

vm/113/2023-04-21T22:26:17Z

vm/113/2023-04-21T20:25:32Z

vm/113/2023-04-21T18:25:38Z

vm/113/2023-04-21T16:25:25Z

vm/113/2023-04-21T14:25:45Z

vm/113/2023-04-21T12:25:38Z

vm/113/2023-04-21T10:25:36Z

vm/113/2023-04-21T08:25:42Z

vm/113/2023-04-21T06:25:47Z

vm/113/2023-04-21T04:25:55Zan do not understand how to fix this.

Likely something happened, when the storage was full.

Here comes the log of the verify task.