Hello Forum!

I run a 3 nodes hyper-converged meshed 10GbE ceph cluster, currently updated to the latest version on 3 identical hp server as a test-environment (pve 6.3.3 and ceph octopus 15.28, no HA,) with 3 x 16 SAS-HDs connected via HBA, 3x pve-os + 45 osds, rbd only, activated ceph-dashboard) Backups are made via PVE-GUI to a shared nfs NAS (vzdump.lzo).

This cluster was originally set up in early 2019 and ran for some month with sparse load. It was then in 2020 updated to 6.x and nautilus. I set up some vms, configured bridged networks, added a dedicated corosync-network, added a 10GbE Interface to the LAN-bridge, migrated some vms on and offline and did some tests which were quite satisfying in order to learn managing the environment. Once I was forced to relock and restore a stucked vm from backup and after ceph update to nautilus, I recreated successfully all osds of a node which after all resulted in a healthy ceph-cluster running 45 osds in one pool containing 1024 pgs.

Tow weeks ago, after having upgraded to 6.3.3 and ceph octopus, things started to go wrong, which was, as far as I can recall it, the beginning of a still ongoing failure/problem cascade.

Ceph autoscaling started to reduce the amount of pgs from1024 to 128 and that changed several times during the ongoing rebalancing. While heavy osd activity was to be seen, health warnings for slow ops arose which flooded syslog up to 30 gigabytes, so as the nodes root directory ran almost out of space and a nightly backup-task hung for hours, before I stoped it. The related vm couldn't be started any more. Finally I was able to restore the vm after unlocking it from backup into a new vm-id and then I tried to get rid of the original vm, which failed.

The restored vm stuck again after 3 days when I, without success, tried to migrate it to a different node, while massive health warnings continued to flood syslog and heavy rebalancing activity occurred. This time restoring the vm into a new id stucked with the following message (task output):

...

trying to acquire cfs lock 'storage-vm_store' ...

trying to acquire cfs lock 'storage-vm_store' ...

new volume ID is 'vm_store:vm-108-disk-0'

Several attempts to restore the vm all failed and finally I had to stop the pending tasks. Eventually I removed the disks via rbd. Then I tried to clean-up by removing the unresposive vms via GUI which just didn't happen.

To make a long story short - all attempts to resolve the problems and the failures I certainly made in order to restore/clean-up vms let to an unstable hardly manageable ceph cluster, which I will have to rebuild from scratch. Since I don't want to repeat errors, I have a bunch of question regarding some of the issues I faced:

But first some basic informations about the environment:

# pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.78-2-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.73-1-pve: 5.4.73-1

pve-kernel-5.4.65-1-pve: 5.4.65-1

pve-kernel-5.4.60-1-pve: 5.4.60-2

pve-kernel-5.4.44-2-pve: 5.4.44-2

pve-kernel-5.4.34-1-pve: 5.4.34-2

pve-kernel-4.15: 5.4-12

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.18-1-pve: 5.3.18-1

pve-kernel-5.3.13-1-pve: 5.3.13-1

pve-kernel-4.15.18-24-pve: 4.15.18-52

pve-kernel-4.15.18-9-pve: 4.15.18-30

ceph: 15.2.8-pve2

ceph-fuse: 15.2.8-pve2

corosync: 3.0.4-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.16-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-2

libpve-guest-common-perl: 3.1-4

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-4

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.3-1

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.6-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-3

pve-cluster: 6.2-1

pve-container: 3.3-2

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-3

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

Current state of ceph cluster (2 pools with 512 pgs each + one automatically created 'device_health_metrics' pool, osd.2, osd.74, osd.77 were shut down due to slow ops;no vm running) :

# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 12.26364 root default

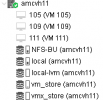

-3 4.08829 host amcvh11

1 hdd 0.27280 osd.1 up 1.00000 1.00000

2 hdd 0.27280 osd.2 down 0 1.00000

3 hdd 0.27249 osd.3 up 1.00000 1.00000

4 hdd 0.27249 osd.4 up 1.00000 1.00000

5 hdd 0.27249 osd.5 up 1.00000 1.00000

6 hdd 0.27249 osd.6 up 1.00000 1.00000

7 hdd 0.27280 osd.7 up 1.00000 1.00000

8 hdd 0.27249 osd.8 up 1.00000 1.00000

9 hdd 0.27249 osd.9 up 1.00000 1.00000

10 hdd 0.27249 osd.10 up 1.00000 1.00000

11 hdd 0.27249 osd.11 up 1.00000 1.00000

12 hdd 0.27249 osd.12 up 1.00000 1.00000

14 hdd 0.27249 osd.14 up 1.00000 1.00000

45 hdd 0.27249 osd.45 up 1.00000 1.00000

47 hdd 0.27249 osd.47 up 1.00000 1.00000

-5 4.08768 host amcvh12

13 hdd 0.27280 osd.13 up 1.00000 1.00000

48 hdd 0.27249 osd.48 up 1.00000 1.00000

49 hdd 0.27249 osd.49 up 1.00000 1.00000

50 hdd 0.27249 osd.50 up 1.00000 1.00000

51 hdd 0.27249 osd.51 up 1.00000 1.00000

52 hdd 0.27249 osd.52 up 1.00000 1.00000

54 hdd 0.27249 osd.54 up 1.00000 1.00000

55 hdd 0.27249 osd.55 up 1.00000 1.00000

56 hdd 0.27249 osd.56 up 1.00000 1.00000

57 hdd 0.27249 osd.57 up 1.00000 1.00000

58 hdd 0.27249 osd.58 up 1.00000 1.00000

59 hdd 0.27249 osd.59 up 1.00000 1.00000

60 hdd 0.27249 osd.60 up 1.00000 1.00000

61 hdd 0.27249 osd.61 up 1.00000 1.00000

62 hdd 0.27249 osd.62 up 1.00000 1.00000

-7 4.08768 host amcvh13

0 hdd 0.27280 osd.0 up 1.00000 1.00000

63 hdd 0.27249 osd.63 up 1.00000 1.00000

64 hdd 0.27249 osd.64 up 1.00000 1.00000

65 hdd 0.27249 osd.65 up 1.00000 1.00000

66 hdd 0.27249 osd.66 up 1.00000 1.00000

68 hdd 0.27249 osd.68 up 1.00000 1.00000

69 hdd 0.27249 osd.69 up 1.00000 1.00000

70 hdd 0.27249 osd.70 up 1.00000 1.00000

71 hdd 0.27249 osd.71 up 1.00000 1.00000

72 hdd 0.27249 osd.72 up 1.00000 1.00000

73 hdd 0.27249 osd.73 up 1.00000 1.00000

74 hdd 0.27249 osd.74 down 0 1.00000

75 hdd 0.27249 osd.75 up 1.00000 1.00000

76 hdd 0.27249 osd.76 up 1.00000 1.00000

77 hdd 0.27249 osd.77 down 0 1.00000

# ceph -s

cluster:

id: ae713943-83f3-48b4-a0c2-124c092c250b

health: HEALTH_WARN

Reduced data availability: 31 pgs inactive, 14 pgs peering

Degraded data redundancy: 18368/1192034 objects degraded (1.541%), 23 pgs degraded, 23 pgs undersized

1 pools have too many placement groups

2 daemons have recently crashed

1225 slow ops, oldest one blocked for 59922 sec, daemons [osd.13,osd.2,osd.62,osd.74,osd.77] have slow ops.

services:

mon: 3 daemons, quorum amcvh11,amcvh12,amcvh13 (age 33h)

mgr: amcvh11(active, since 47h), standbys: amcvh12, amcvh13

osd: 45 osds: 42 up (since 15h), 42 in (since 14h); 27 remapped pgs

data:

pools: 3 pools, 993 pgs

objects: 397.36k objects, 1.5 TiB

usage: 1.1 TiB used, 10 TiB / 11 TiB avail

pgs: 3.122% pgs not active

18368/1192034 objects degraded (1.541%)

950 active+clean

11 active+undersized+degraded+remapped+backfill_wait

11 activating+undersized+degraded+remapped

10 peering

6 activating

4 remapped+peering

1 active+undersized+degraded+remapped+backfilling

progress:

Rebalancing after osd.2 marked in (16h)

[=======================.....] (remaining: 3h)

PG autoscaler decreasing pool 4 PGs from 512 to 128 (10h)

[==..........................] (remaining: 2w)

Here are my questions:

1. How to rebuild a ceph-cluster from scratch?

For rebuilding ceph from scratch I found the following threat/procedure: https://forum.proxmox.com/threads/how-to-clean-up-a-bad-ceph-config-and-start-from-scratch.68949/

1.1 Is this the way to go or has somebody any additional suggestions?

2. It should be possible to restore vzdump backups to a rebuilt ceph storage - is that correct?

I have some vzdump backups on a shared nfs-nas- if I reconnect the nas to the new rebuilt ceph, will I be able to restore the vms into a rebuilt rbd-storage ?

3. What exactly causes the ceph health-warnings and why are the by far most frequent 'get_health_metrics reporting ...' messages are repeated continuously for hours? How can this be stopped in a save way?

My biggest concern is due to the flooding of sysslogwithh the following messages, which starts out of the blue and which I cannot, as mentioned in a forum thread, stop by restarting the reported osds with slow ops or by restarting the monitor of the node. The only way to stop the health reporting was to shut down the related osds permanently.

This may be associated with the automatic appearance of a 'device_health_metrics' pool.

extract from syslog:

Jan 18 00:06:23 amcvh11 spiceproxy[4697]: restarting server

Jan 18 00:06:23 amcvh11 spiceproxy[4697]: starting 1 worker(s)

Jan 18 00:06:23 amcvh11 spiceproxy[4697]: worker 1370310 started

Jan 18 00:06:24 amcvh11 pveproxy[4648]: restarting server

Jan 18 00:06:24 amcvh11 pveproxy[4648]: starting 3 worker(s)

Jan 18 00:06:24 amcvh11 pveproxy[4648]: worker 1370311 started

Jan 18 00:06:24 amcvh11 pveproxy[4648]: worker 1370312 started

Jan 18 00:06:24 amcvh11 pveproxy[4648]: worker 1370313 started

# the following message is continously repeated

Jan 18 00:06:24 amcvh11 ceph-osd[409163]: 2021-01-18T00:06:24.466+0100 7f4d26cab700 -1 osd.46 65498 get_health_metrics reporting 530 slow ops, oldest is osd_op(client.127411400.0:57227 4.12d 4.5e53992d (undecoded) ondisk+retry+read+known_if_redirected e65446)

Jan 18 00:06:25 amcvh11 ceph-osd[409163]: 2021-01-18T00:06:25.422+0100 7f4d26cab700 -1 osd.46 65498 get_health_metrics reporting 530 slow ops, oldest is osd_op(client.127411400.0:57227 4.12d 4.5e53992d (undecoded) ondisk+retry+read+known_if_redirected e65446)

# for almost 11 hours

Jan 18 10:55:36 amcvh11 ceph-osd[409163]: 2021-01-18T10:55:36.423+0100 7f4d26cab700 -1 osd.46 65498 get_health_metrics reporting 2510 slow ops, oldest is osd_op(client.127411400.0:57227 4.12d 4.5e53992d (undecoded) ondisk+retry+read+known_if_redirected e65446)

3.1 What does this message say in detail, apart form my understanding, that the disk does not respond in time ?

3.2 Why was the pool 'device_health_metrics' created and what is its function (see the output of ceph -s above) ?

3.3 Why is ceph executing 'Rebalancing after osd.2 marked in' although I shut it down/out in order to replace the disk (see end of 'ceph -s' output above)?

3.4 Debugging 'slow ops' of osds seems to be a complex issue - I found lots of partly confusing information. Does anyone know a concise debugging checklist or related information which possibly includes the entire environment?

4. Can anybody elaborate how to debug and manage a non responsive vm running on ceph-rbd?

Any pointer is welcome!

5. Is there a save procedure to remove completely a vm (stucked or not) and restore it from backup afterwards?

Again, any advice or pointer is highly appreciated

Sorry for the lengthy story and the bunch of questions- any help or advice is highly appreciated !

If you need any further information or data, pls. let my know.

I run a 3 nodes hyper-converged meshed 10GbE ceph cluster, currently updated to the latest version on 3 identical hp server as a test-environment (pve 6.3.3 and ceph octopus 15.28, no HA,) with 3 x 16 SAS-HDs connected via HBA, 3x pve-os + 45 osds, rbd only, activated ceph-dashboard) Backups are made via PVE-GUI to a shared nfs NAS (vzdump.lzo).

This cluster was originally set up in early 2019 and ran for some month with sparse load. It was then in 2020 updated to 6.x and nautilus. I set up some vms, configured bridged networks, added a dedicated corosync-network, added a 10GbE Interface to the LAN-bridge, migrated some vms on and offline and did some tests which were quite satisfying in order to learn managing the environment. Once I was forced to relock and restore a stucked vm from backup and after ceph update to nautilus, I recreated successfully all osds of a node which after all resulted in a healthy ceph-cluster running 45 osds in one pool containing 1024 pgs.

Tow weeks ago, after having upgraded to 6.3.3 and ceph octopus, things started to go wrong, which was, as far as I can recall it, the beginning of a still ongoing failure/problem cascade.

Ceph autoscaling started to reduce the amount of pgs from1024 to 128 and that changed several times during the ongoing rebalancing. While heavy osd activity was to be seen, health warnings for slow ops arose which flooded syslog up to 30 gigabytes, so as the nodes root directory ran almost out of space and a nightly backup-task hung for hours, before I stoped it. The related vm couldn't be started any more. Finally I was able to restore the vm after unlocking it from backup into a new vm-id and then I tried to get rid of the original vm, which failed.

The restored vm stuck again after 3 days when I, without success, tried to migrate it to a different node, while massive health warnings continued to flood syslog and heavy rebalancing activity occurred. This time restoring the vm into a new id stucked with the following message (task output):

...

trying to acquire cfs lock 'storage-vm_store' ...

trying to acquire cfs lock 'storage-vm_store' ...

new volume ID is 'vm_store:vm-108-disk-0'

Several attempts to restore the vm all failed and finally I had to stop the pending tasks. Eventually I removed the disks via rbd. Then I tried to clean-up by removing the unresposive vms via GUI which just didn't happen.

To make a long story short - all attempts to resolve the problems and the failures I certainly made in order to restore/clean-up vms let to an unstable hardly manageable ceph cluster, which I will have to rebuild from scratch. Since I don't want to repeat errors, I have a bunch of question regarding some of the issues I faced:

But first some basic informations about the environment:

# pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.78-2-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.73-1-pve: 5.4.73-1

pve-kernel-5.4.65-1-pve: 5.4.65-1

pve-kernel-5.4.60-1-pve: 5.4.60-2

pve-kernel-5.4.44-2-pve: 5.4.44-2

pve-kernel-5.4.34-1-pve: 5.4.34-2

pve-kernel-4.15: 5.4-12

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.18-1-pve: 5.3.18-1

pve-kernel-5.3.13-1-pve: 5.3.13-1

pve-kernel-4.15.18-24-pve: 4.15.18-52

pve-kernel-4.15.18-9-pve: 4.15.18-30

ceph: 15.2.8-pve2

ceph-fuse: 15.2.8-pve2

corosync: 3.0.4-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.16-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-2

libpve-guest-common-perl: 3.1-4

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-4

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.3-1

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.6-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-3

pve-cluster: 6.2-1

pve-container: 3.3-2

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-3

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

Current state of ceph cluster (2 pools with 512 pgs each + one automatically created 'device_health_metrics' pool, osd.2, osd.74, osd.77 were shut down due to slow ops;no vm running) :

# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 12.26364 root default

-3 4.08829 host amcvh11

1 hdd 0.27280 osd.1 up 1.00000 1.00000

2 hdd 0.27280 osd.2 down 0 1.00000

3 hdd 0.27249 osd.3 up 1.00000 1.00000

4 hdd 0.27249 osd.4 up 1.00000 1.00000

5 hdd 0.27249 osd.5 up 1.00000 1.00000

6 hdd 0.27249 osd.6 up 1.00000 1.00000

7 hdd 0.27280 osd.7 up 1.00000 1.00000

8 hdd 0.27249 osd.8 up 1.00000 1.00000

9 hdd 0.27249 osd.9 up 1.00000 1.00000

10 hdd 0.27249 osd.10 up 1.00000 1.00000

11 hdd 0.27249 osd.11 up 1.00000 1.00000

12 hdd 0.27249 osd.12 up 1.00000 1.00000

14 hdd 0.27249 osd.14 up 1.00000 1.00000

45 hdd 0.27249 osd.45 up 1.00000 1.00000

47 hdd 0.27249 osd.47 up 1.00000 1.00000

-5 4.08768 host amcvh12

13 hdd 0.27280 osd.13 up 1.00000 1.00000

48 hdd 0.27249 osd.48 up 1.00000 1.00000

49 hdd 0.27249 osd.49 up 1.00000 1.00000

50 hdd 0.27249 osd.50 up 1.00000 1.00000

51 hdd 0.27249 osd.51 up 1.00000 1.00000

52 hdd 0.27249 osd.52 up 1.00000 1.00000

54 hdd 0.27249 osd.54 up 1.00000 1.00000

55 hdd 0.27249 osd.55 up 1.00000 1.00000

56 hdd 0.27249 osd.56 up 1.00000 1.00000

57 hdd 0.27249 osd.57 up 1.00000 1.00000

58 hdd 0.27249 osd.58 up 1.00000 1.00000

59 hdd 0.27249 osd.59 up 1.00000 1.00000

60 hdd 0.27249 osd.60 up 1.00000 1.00000

61 hdd 0.27249 osd.61 up 1.00000 1.00000

62 hdd 0.27249 osd.62 up 1.00000 1.00000

-7 4.08768 host amcvh13

0 hdd 0.27280 osd.0 up 1.00000 1.00000

63 hdd 0.27249 osd.63 up 1.00000 1.00000

64 hdd 0.27249 osd.64 up 1.00000 1.00000

65 hdd 0.27249 osd.65 up 1.00000 1.00000

66 hdd 0.27249 osd.66 up 1.00000 1.00000

68 hdd 0.27249 osd.68 up 1.00000 1.00000

69 hdd 0.27249 osd.69 up 1.00000 1.00000

70 hdd 0.27249 osd.70 up 1.00000 1.00000

71 hdd 0.27249 osd.71 up 1.00000 1.00000

72 hdd 0.27249 osd.72 up 1.00000 1.00000

73 hdd 0.27249 osd.73 up 1.00000 1.00000

74 hdd 0.27249 osd.74 down 0 1.00000

75 hdd 0.27249 osd.75 up 1.00000 1.00000

76 hdd 0.27249 osd.76 up 1.00000 1.00000

77 hdd 0.27249 osd.77 down 0 1.00000

# ceph -s

cluster:

id: ae713943-83f3-48b4-a0c2-124c092c250b

health: HEALTH_WARN

Reduced data availability: 31 pgs inactive, 14 pgs peering

Degraded data redundancy: 18368/1192034 objects degraded (1.541%), 23 pgs degraded, 23 pgs undersized

1 pools have too many placement groups

2 daemons have recently crashed

1225 slow ops, oldest one blocked for 59922 sec, daemons [osd.13,osd.2,osd.62,osd.74,osd.77] have slow ops.

services:

mon: 3 daemons, quorum amcvh11,amcvh12,amcvh13 (age 33h)

mgr: amcvh11(active, since 47h), standbys: amcvh12, amcvh13

osd: 45 osds: 42 up (since 15h), 42 in (since 14h); 27 remapped pgs

data:

pools: 3 pools, 993 pgs

objects: 397.36k objects, 1.5 TiB

usage: 1.1 TiB used, 10 TiB / 11 TiB avail

pgs: 3.122% pgs not active

18368/1192034 objects degraded (1.541%)

950 active+clean

11 active+undersized+degraded+remapped+backfill_wait

11 activating+undersized+degraded+remapped

10 peering

6 activating

4 remapped+peering

1 active+undersized+degraded+remapped+backfilling

progress:

Rebalancing after osd.2 marked in (16h)

[=======================.....] (remaining: 3h)

PG autoscaler decreasing pool 4 PGs from 512 to 128 (10h)

[==..........................] (remaining: 2w)

Here are my questions:

1. How to rebuild a ceph-cluster from scratch?

For rebuilding ceph from scratch I found the following threat/procedure: https://forum.proxmox.com/threads/how-to-clean-up-a-bad-ceph-config-and-start-from-scratch.68949/

1.1 Is this the way to go or has somebody any additional suggestions?

2. It should be possible to restore vzdump backups to a rebuilt ceph storage - is that correct?

I have some vzdump backups on a shared nfs-nas- if I reconnect the nas to the new rebuilt ceph, will I be able to restore the vms into a rebuilt rbd-storage ?

3. What exactly causes the ceph health-warnings and why are the by far most frequent 'get_health_metrics reporting ...' messages are repeated continuously for hours? How can this be stopped in a save way?

My biggest concern is due to the flooding of sysslogwithh the following messages, which starts out of the blue and which I cannot, as mentioned in a forum thread, stop by restarting the reported osds with slow ops or by restarting the monitor of the node. The only way to stop the health reporting was to shut down the related osds permanently.

This may be associated with the automatic appearance of a 'device_health_metrics' pool.

extract from syslog:

Jan 18 00:06:23 amcvh11 spiceproxy[4697]: restarting server

Jan 18 00:06:23 amcvh11 spiceproxy[4697]: starting 1 worker(s)

Jan 18 00:06:23 amcvh11 spiceproxy[4697]: worker 1370310 started

Jan 18 00:06:24 amcvh11 pveproxy[4648]: restarting server

Jan 18 00:06:24 amcvh11 pveproxy[4648]: starting 3 worker(s)

Jan 18 00:06:24 amcvh11 pveproxy[4648]: worker 1370311 started

Jan 18 00:06:24 amcvh11 pveproxy[4648]: worker 1370312 started

Jan 18 00:06:24 amcvh11 pveproxy[4648]: worker 1370313 started

# the following message is continously repeated

Jan 18 00:06:24 amcvh11 ceph-osd[409163]: 2021-01-18T00:06:24.466+0100 7f4d26cab700 -1 osd.46 65498 get_health_metrics reporting 530 slow ops, oldest is osd_op(client.127411400.0:57227 4.12d 4.5e53992d (undecoded) ondisk+retry+read+known_if_redirected e65446)

Jan 18 00:06:25 amcvh11 ceph-osd[409163]: 2021-01-18T00:06:25.422+0100 7f4d26cab700 -1 osd.46 65498 get_health_metrics reporting 530 slow ops, oldest is osd_op(client.127411400.0:57227 4.12d 4.5e53992d (undecoded) ondisk+retry+read+known_if_redirected e65446)

# for almost 11 hours

Jan 18 10:55:36 amcvh11 ceph-osd[409163]: 2021-01-18T10:55:36.423+0100 7f4d26cab700 -1 osd.46 65498 get_health_metrics reporting 2510 slow ops, oldest is osd_op(client.127411400.0:57227 4.12d 4.5e53992d (undecoded) ondisk+retry+read+known_if_redirected e65446)

3.1 What does this message say in detail, apart form my understanding, that the disk does not respond in time ?

3.2 Why was the pool 'device_health_metrics' created and what is its function (see the output of ceph -s above) ?

3.3 Why is ceph executing 'Rebalancing after osd.2 marked in' although I shut it down/out in order to replace the disk (see end of 'ceph -s' output above)?

3.4 Debugging 'slow ops' of osds seems to be a complex issue - I found lots of partly confusing information. Does anyone know a concise debugging checklist or related information which possibly includes the entire environment?

4. Can anybody elaborate how to debug and manage a non responsive vm running on ceph-rbd?

Any pointer is welcome!

5. Is there a save procedure to remove completely a vm (stucked or not) and restore it from backup afterwards?

Again, any advice or pointer is highly appreciated

Sorry for the lengthy story and the bunch of questions- any help or advice is highly appreciated !

If you need any further information or data, pls. let my know.