Hello,

I've a proxmox server hosting OMV in a VM.

For simplicity, performance and convenience, I want my data hosted on my Proxmox ZFS.

I want to use OMV as a samba server manager (and other things). And to be able to access the same datas from other VM/LXC (NextCloud, Backup, Plex LXC etc.)

I have now took hours and hours read A LOT of posts about accessing a ZFS pool/dataset on Proxmox. There is no complete solution or tutorial that explain a fast and easy solution.

I looks like the easiest, fastest way I found is this one, It seems neat and makes sense... but it's not a very detailed explanation for beginners and with some typos "briding ports" ?!? :

:

It's recurrent problematic that I've read on a lot of forums, Reddit etc. and I think it's the nicest way to do it for "home" server with limited ressources and power.

So, if an "expert" could explain how he would set this up, I would be very happy (and probably many others)

(and probably many others)

Thanks a lot !

I've a proxmox server hosting OMV in a VM.

For simplicity, performance and convenience, I want my data hosted on my Proxmox ZFS.

I want to use OMV as a samba server manager (and other things). And to be able to access the same datas from other VM/LXC (NextCloud, Backup, Plex LXC etc.)

I have now took hours and hours read A LOT of posts about accessing a ZFS pool/dataset on Proxmox. There is no complete solution or tutorial that explain a fast and easy solution.

I looks like the easiest, fastest way I found is this one, It seems neat and makes sense... but it's not a very detailed explanation for beginners and with some typos "briding ports" ?!?

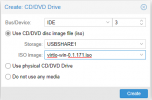

- Created some ZFS datasets on Proxmox, and configured a network bridge (without briding ports - so like a "virtual network", in my case 192.168.1.0/28) between Proxmox and OMV (with VirtIO NIC).

- Then I created some NFS Shares on Proxmox and connected to them via RemoteMount Plugin in OMV. Speed is like native (the VirtIO Interface did incredible 35Gbs when I tested it with some iperf Benchmarks), and now I dont need and passthrough. Works like a charme for me

It's recurrent problematic that I've read on a lot of forums, Reddit etc. and I think it's the nicest way to do it for "home" server with limited ressources and power.

So, if an "expert" could explain how he would set this up, I would be very happy

Thanks a lot !

Last edited: