root@pve:~# lvdisplay

--- Logical volume ---

LV Name data

VG Name pve

LV UUID FgW31E-N4dn-ItL7-flcQ-0esd-AM4c-c6Lq2i

LV Write Access read/write

LV Creation host, time proxmox, 2023-11-08 10:47:57 +0100

LV Pool metadata data_tmeta

LV Pool data data_tdata

LV Status available

# open 0

LV Size 337.86 GiB

Allocated pool data 0.00%

Allocated metadata 0.50%

Current LE 86493

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:9

--- Logical volume ---

LV Path /dev/pve/swap

LV Name swap

VG Name pve

LV UUID 3Uwylu-BccP-96tl-RvDs-qWPp-FnpX-NO0ai6

LV Write Access read/write

LV Creation host, time proxmox, 2023-11-08 10:47:42 +0100

LV Status available

# open 2

LV Size 8.00 GiB

Current LE 2048

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Logical volume ---

LV Path /dev/pve/root

LV Name root

VG Name pve

LV UUID PL1DzK-D3s2-jR35-JUVS-b7te-1HGA-tWEyRw

LV Write Access read/write

LV Creation host, time proxmox, 2023-11-08 10:47:42 +0100

LV Status available

# open 1

LV Size 96.00 GiB

Current LE 24576

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

--- Logical volume ---

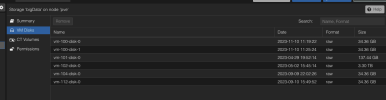

LV Path /dev/bigData/vm-101-disk-0

LV Name vm-101-disk-0

VG Name bigData

LV UUID YG1ABF-neuW-nHWI-VVR9-TqRp-g3yM-20bdcd

LV Write Access read/write

LV Creation host, time pve, 2023-04-29 19:52:14 +0200

LV Status available

# open 0

LV Size 128.00 GiB

Current LE 32768

Segments 2

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:5

--- Logical volume ---

LV Path /dev/bigData/vm-102-disk-0

LV Name vm-102-disk-0

VG Name bigData

LV UUID XfogHO-NJ0k-jpk1-CODd-ca09-Qvau-xw58cy

LV Write Access read/write

LV Creation host, time pve, 2023-05-02 15:45:14 +0200

LV Status available

# open 0

LV Size 3.00 TiB

Current LE 786432

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:6

--- Logical volume ---

LV Path /dev/bigData/vm-104-disk-0

LV Name vm-104-disk-0

VG Name bigData

LV UUID AZLtLq-NSl3-1dOA-vegB-2YTb-AHem-7AmdTN

LV Write Access read/write

LV Creation host, time pve, 2023-09-09 22:02:26 +0200

LV Status available

# open 0

LV Size 32.00 GiB

Current LE 8192

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:7

--- Logical volume ---

LV Path /dev/bigData/vm-112-disk-0

LV Name vm-112-disk-0

VG Name bigData

LV UUID Ie4GlG-E6Dv-ZOFo-nwMv-EMi9-lxy2-cqiHwX

LV Write Access read/write

LV Creation host, time pve, 2023-09-10 15:49:52 +0200

LV Status NOT available

LV Size 32.00 GiB

Current LE 8192

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/bigData/vm-100-disk-0

LV Name vm-100-disk-0

VG Name bigData

LV UUID E1f3jC-cpZO-BHdX-7aUM-kg0x-Mi2I-h7lLPA

LV Write Access read/write

LV Creation host, time pve, 2023-11-10 11:19:22 +0100

LV Status available

# open 0

LV Size 32.00 GiB

Current LE 8192

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:4

--- Logical volume ---

LV Path /dev/bigData/vm-100-disk-1

LV Name vm-100-disk-1

VG Name bigData

LV UUID 9PULko-Wik8-gzEl-axXw-gcp7-abST-AuNJnI

LV Write Access read/write

LV Creation host, time pve, 2023-11-10 11:25:24 +0100

LV Status available

# open 0

LV Size 32.00 GiB

Current LE 8192

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:8