Dear proxmox gurus!

first please accept my deep respect as the solution is absolutely amazing. I do use it for a non-profit organization to help them cope with their IT on an affordable way. It is running for 5+ years and I am on version 6.4-13 at the moment, planning to go to 7 by xmas. When in doubt, I do study the documentation and read forums but I have one thing I would like someone to interpret for me as I am afraid my understanding is not correct. I am strugling in the way how RAM is allocated and swap used. The reason behind is that in past 3-5 months I can see a nearly regular bi-weekly VM restarts most likely caused by RAM cap which started to be utilized above past 5 years average.

The setup is HP ML server with HBA, Xeon 6 cores, 86 GB RAM. ZFS setup with SSD drives used for logs and cache (mirrored to make sure defect SSD does not corrupt the data, although the server is on UPS) and 4 7K2 drives in mirror/strip.

Also there is a ZRAM installed of size 12 GB for swapping

There are 4 VM - all windows 2012 R2 64 bit and the RAM assigned is 32+3+3+4 GB = 42 GB RAM allocated. All machines have balooning enabled and working.

Now comes my confusion.

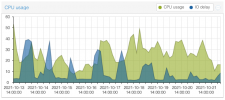

For many years I had the swappines set to 1 and the system was rock stable. The zfs_arc_min and max were not set thus = 0. The RAM usage was always like 75 % for many years with the same VM running all the time. Most likely after some proxmox update in May/June I started to experience random VM restarts which I concluded were due to a high RAM usage because since about June I can see the RAM usage as 92-96% = 82 to 84 GB out of 86 GB. So I started to read more about the RAM usage and SWAP and ARC size and did a test 3 weeks back when I committed following changes:

1) I have limited the ARC min and max size (followed by update-initramfs -u):

2) I have modified the swappiness (to 10, 20, 60 and 100 - one by one for couple of days)

sysctl -w vm.swappiness=60

My experience was that the total usage of RAM was suddenly all 2 weeks about 57 GB - which could be theoretically all allocated RAM to all VMs + ZRAM for SWAP.

The SWAP usage was still 0 all the time but what wet drasticly up were the io delays causing many replications to time out and also the backups with PBS went much longer.

I concluded, that the tuning of the zfs zfs_arc_max was not a good step and I reverted it back to nothing (deleted the content of the /etc/modprobe.d/zfs.conf file).

From the revert the system is again on 94-96% of RAM usage, there are no timeouts on replications/backups and the system runs stable. Ony I am not sure whether I get some random VM crash/restart with such a loda RAM utilization.

And now I would like fellow gurus to clarify my following questions/assumptions:

1) The ZFS/Poxmox by design uses about 50% of available RAM for FS caching - is that right? Was the zfs zfs_arc_max expected to limit the maximum of RAM used for this cache?

2) The SWAP is used first when the RAM runs out, so it is correct to assume that in my case the SWAP usage is 0 becasue the system does barely run out of the RAM? - is there any difference (apart performance) in the logic of using SWAP between SWAP on HDD and SWAP in RAM?

3) If the ZFS system is expected to use 50% of RAM for cache, is it correct to not to alocate more than 50% of physical RAM to all virtual machines together to be on a safe side?

4) is it expected behavior in case the RAM runs out to not to crash the VM but instead to utilize SWAP? Can the VM crashing be somehow prevented in this particular case?

5) What is the exact logic behind swappines set to 10 and 90 in a situation described here when the virtual machines have total RAM assigned about 45% of physical RAM and the cache uses another 50% of RAM - will the change between 10 and 60 or 90 be seen somehow?

I know that the reddish RAM usage in Proxmox GUI is more a psychological thing than anything else, but I would like to keep the RAM usage below say 90-85% to "be on a safe side" and at the same moment give the system most of available resources without putting the stability to danger?

My plan is to set the zfs zfs_arc_max to 32GB so that the performance during backups and replications is still OK but at the same time to use max 85% of the RAM to prevent VM crashes, but I am not sure, whether this is the best approach.

I also plan to disable the SWAp completly as I see it as wasted 12 GB of RAM or to limit it to say 6 GB to save 6 more GB of RAM for cache....

I have no other test environement where I could observer behavior during long time and during backups and replications so I better ask before I do something really bad.I really do like the system and do not want to play with it beyond the configuration limits as I admire its stability and do not want to break it just becasue of lack of understanding.

P.S. if there is any config details you woudl like to see, pelase let me know.

first please accept my deep respect as the solution is absolutely amazing. I do use it for a non-profit organization to help them cope with their IT on an affordable way. It is running for 5+ years and I am on version 6.4-13 at the moment, planning to go to 7 by xmas. When in doubt, I do study the documentation and read forums but I have one thing I would like someone to interpret for me as I am afraid my understanding is not correct. I am strugling in the way how RAM is allocated and swap used. The reason behind is that in past 3-5 months I can see a nearly regular bi-weekly VM restarts most likely caused by RAM cap which started to be utilized above past 5 years average.

The setup is HP ML server with HBA, Xeon 6 cores, 86 GB RAM. ZFS setup with SSD drives used for logs and cache (mirrored to make sure defect SSD does not corrupt the data, although the server is on UPS) and 4 7K2 drives in mirror/strip.

Code:

zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 3.62T 510G 3.13T - - 41% 13% 1.00x ONLINE -

Code:

zpool status

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 09:30:35 with 0 errors on Sun Oct 10 09:54:38 2021

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

wwn-0x50014ee004593460-part2 ONLINE 0 0 0

wwn-0x50014ee00459337b-part2 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

wwn-0x50014ee059ae513a ONLINE 0 0 0

wwn-0x50014ee00459347d ONLINE 0 0 0

logs

mirror-2 ONLINE 0 0 0

wwn-0x55cd2e414dddc1ca-part1 ONLINE 0 0 0

wwn-0x55cd2e414dddf7cc-part1 ONLINE 0 0 0

wwn-0x55cd2e414dddc34e-part1 ONLINE 0 0 0

wwn-0x55cd2e414dddf74c-part1 ONLINE 0 0 0

cache

wwn-0x55cd2e414dddc1ca-part2 ONLINE 0 0 0

wwn-0x55cd2e414dddf7cc-part2 ONLINE 0 0 0

wwn-0x55cd2e414dddc34e-part2 ONLINE 0 0 0

wwn-0x55cd2e414dddf74c-part2 ONLINE 0 0 0Also there is a ZRAM installed of size 12 GB for swapping

Code:

zramctl

NAME ALGORITHM DISKSIZE DATA COMPR TOTAL STREAMS MOUNTPOINT

/dev/zram5 lzo-rle 2G 4K 74B 12K 6 [SWAP]

/dev/zram4 lzo-rle 2G 4K 74B 12K 6 [SWAP]

/dev/zram3 lzo-rle 2G 4K 74B 12K 6 [SWAP]

/dev/zram2 lzo-rle 2G 4K 74B 12K 6 [SWAP]

/dev/zram1 lzo-rle 2G 4K 74B 12K 6 [SWAP]

/dev/zram0 lzo-rle 2G 7M 2M 2.4M 6 [SWAP]There are 4 VM - all windows 2012 R2 64 bit and the RAM assigned is 32+3+3+4 GB = 42 GB RAM allocated. All machines have balooning enabled and working.

Now comes my confusion.

For many years I had the swappines set to 1 and the system was rock stable. The zfs_arc_min and max were not set thus = 0. The RAM usage was always like 75 % for many years with the same VM running all the time. Most likely after some proxmox update in May/June I started to experience random VM restarts which I concluded were due to a high RAM usage because since about June I can see the RAM usage as 92-96% = 82 to 84 GB out of 86 GB. So I started to read more about the RAM usage and SWAP and ARC size and did a test 3 weeks back when I committed following changes:

1) I have limited the ARC min and max size (followed by update-initramfs -u):

Code:

/etc/modprobe.d/zfs.conf

options zfs zfs_arc_min=4294967296

options zfs zfs_arc_max=85899345922) I have modified the swappiness (to 10, 20, 60 and 100 - one by one for couple of days)

sysctl -w vm.swappiness=60

My experience was that the total usage of RAM was suddenly all 2 weeks about 57 GB - which could be theoretically all allocated RAM to all VMs + ZRAM for SWAP.

The SWAP usage was still 0 all the time but what wet drasticly up were the io delays causing many replications to time out and also the backups with PBS went much longer.

I concluded, that the tuning of the zfs zfs_arc_max was not a good step and I reverted it back to nothing (deleted the content of the /etc/modprobe.d/zfs.conf file).

From the revert the system is again on 94-96% of RAM usage, there are no timeouts on replications/backups and the system runs stable. Ony I am not sure whether I get some random VM crash/restart with such a loda RAM utilization.

And now I would like fellow gurus to clarify my following questions/assumptions:

1) The ZFS/Poxmox by design uses about 50% of available RAM for FS caching - is that right? Was the zfs zfs_arc_max expected to limit the maximum of RAM used for this cache?

2) The SWAP is used first when the RAM runs out, so it is correct to assume that in my case the SWAP usage is 0 becasue the system does barely run out of the RAM? - is there any difference (apart performance) in the logic of using SWAP between SWAP on HDD and SWAP in RAM?

3) If the ZFS system is expected to use 50% of RAM for cache, is it correct to not to alocate more than 50% of physical RAM to all virtual machines together to be on a safe side?

4) is it expected behavior in case the RAM runs out to not to crash the VM but instead to utilize SWAP? Can the VM crashing be somehow prevented in this particular case?

5) What is the exact logic behind swappines set to 10 and 90 in a situation described here when the virtual machines have total RAM assigned about 45% of physical RAM and the cache uses another 50% of RAM - will the change between 10 and 60 or 90 be seen somehow?

I know that the reddish RAM usage in Proxmox GUI is more a psychological thing than anything else, but I would like to keep the RAM usage below say 90-85% to "be on a safe side" and at the same moment give the system most of available resources without putting the stability to danger?

My plan is to set the zfs zfs_arc_max to 32GB so that the performance during backups and replications is still OK but at the same time to use max 85% of the RAM to prevent VM crashes, but I am not sure, whether this is the best approach.

I also plan to disable the SWAp completly as I see it as wasted 12 GB of RAM or to limit it to say 6 GB to save 6 more GB of RAM for cache....

I have no other test environement where I could observer behavior during long time and during backups and replications so I better ask before I do something really bad.I really do like the system and do not want to play with it beyond the configuration limits as I admire its stability and do not want to break it just becasue of lack of understanding.

P.S. if there is any config details you woudl like to see, pelase let me know.

Code:

arc_summary

------------------------------------------------------------------------

ZFS Subsystem Report Mon Oct 18 14:27:22 2021

Linux 5.4.140-1-pve 2.0.5-pve1~bpo10+1

Machine: pve01 (x86_64) 2.0.5-pve1~bpo10+1

ARC status: HEALTHY

Memory throttle count: 0

ARC size (current): 100.0 % 43.2 GiB

Target size (adaptive): 100.0 % 43.2 GiB

Min size (hard limit): 6.2 % 2.7 GiB

Max size (high water): 16:1 43.2 GiB

Most Frequently Used (MFU) cache size: 68.5 % 20.8 GiB

Most Recently Used (MRU) cache size: 31.5 % 9.6 GiB

Metadata cache size (hard limit): 75.0 % 32.4 GiB

Metadata cache size (current): 47.6 % 15.4 GiB

Dnode cache size (hard limit): 10.0 % 3.2 GiB

Dnode cache size (current): 0.7 % 22.7 MiB