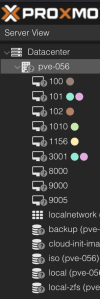

Just updated one of PVE hosts to most recent patches and both VMs & Storages now shown (?) Unknown status.

I found in forums if try to restart pvestatd it should fix VMs status.

After running following it helps recover VMs statuses, but not Storages:

But after reboot it's broken again...

Anybody knows more permanent fix?

Here are package version from that host:

I found in forums if try to restart pvestatd it should fix VMs status.

After running following it helps recover VMs statuses, but not Storages:

Bash:

systemctl restart pvestatdBut after reboot it's broken again...

Anybody knows more permanent fix?

Here are package version from that host:

Code:

proxmox-ve: 8.2.0 (running kernel: 6.8.8-1-pve)

pve-manager: 8.2.4 (running version: 8.2.4/faa83925c9641325)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.8-1

proxmox-kernel-6.8.8-1-pve-signed: 6.8.8-1

proxmox-kernel-6.8.4-3-pve-signed: 6.8.4-3

proxmox-kernel-6.5.13-5-pve-signed: 6.5.13-5

proxmox-kernel-6.5: 6.5.13-5

proxmox-kernel-6.5.11-4-pve-signed: 6.5.11-4

ceph-fuse: 18.2.2-pve1

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.3

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.9

libpve-storage-perl: 8.2.2

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.4-1

proxmox-backup-file-restore: 3.2.4-1

proxmox-firewall: 0.4.2

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.1.12

pve-docs: 8.2.2

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.1

pve-firewall: 5.0.7

pve-firmware: 3.12-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.2

pve-qemu-kvm: 8.1.5-6

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.4-pve1

Last edited: