I have a cluster of around 15 nodes.

Today i successfully upgraded pbs from 3.0 to 3.3.2. (reboot and full test of backup\restore worked )

Then i Tried to upgrade a single node (from the GUI). all went normal and system worked after upgrade.

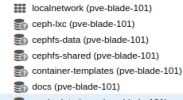

i Initiated a reboot, to make sure everything stable after reboot , including kernel, but i have issue connected to the host vie the gui, (ssh works) but the note is not fully working, it have network share issues (all marked as gray question mark) and as a result vm's and lxc's fail to start.

any tips how to investigate the issue ?

solution:

eventually i managed to stabilize the system,

for some reason updating and the rebooting the proxmox host from 8.0 to 8.3 that had some nfs mounts (hosted on stand alone truenass server)

caused the truenass share to generate errors (started on the upgraded host, after it is rebooted it tried to connect and failed) and then trough the weekend more and more servers that mounted the nfs had a problem with it.

fortunately rebooting the truenass server solved it.

Today i successfully upgraded pbs from 3.0 to 3.3.2. (reboot and full test of backup\restore worked )

Then i Tried to upgrade a single node (from the GUI). all went normal and system worked after upgrade.

i Initiated a reboot, to make sure everything stable after reboot , including kernel, but i have issue connected to the host vie the gui, (ssh works) but the note is not fully working, it have network share issues (all marked as gray question mark) and as a result vm's and lxc's fail to start.

any tips how to investigate the issue ?

solution:

eventually i managed to stabilize the system,

for some reason updating and the rebooting the proxmox host from 8.0 to 8.3 that had some nfs mounts (hosted on stand alone truenass server)

caused the truenass share to generate errors (started on the upgraded host, after it is rebooted it tried to connect and failed) and then trough the weekend more and more servers that mounted the nfs had a problem with it.

fortunately rebooting the truenass server solved it.

Last edited: