Hi All,

I would like to find a solution to the slow memory Leak / Growth problem I have with some PVE Nodes I manage.

I have PVE 6.2-6 installed (Issue occured with previous versions also).

Almost all nodes are using the same QNAP Storage pool for VMs via NFS V4 protocol.

Almost all Guest Machines are Microsoft Windows (Drives use VirtIO, Network use VirtIO, Guest agents Installed, Display Configured as SPICE, i440fx)

I can reclaim the leaked RAM by migrating guest vm to another node and back (no reboot required for guest or PVE)

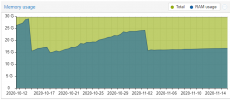

Using the past week as an example,

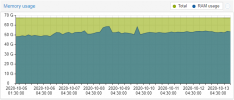

PVENode1 is slowly affected (4 weeks ago 44GB used, 2 weeks ago had 48GB used, now at 53GB used) (4 guest vm)

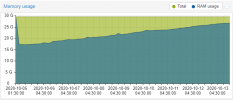

PVENode2 in 2 weeks had increase 10GB of RAM without any Guest VM changes / restarts. (7 guest vm)

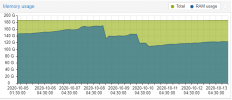

PVENode3 had grown to 170GB of RAM and half a week ago I used the migration trick to re-claim the RAM. The RAM usage came down to 108GB and starting to grow again, currently 123GB. (17 guest vm)

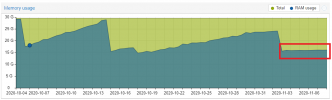

PVENode4 similar to PVENode1 had 43 GB, grown to 50GB in the past week. (5 guest vm)

PVENode5 similar to PVENode1 (Guest VMs changed in this period) (3 guest vm)

Attached are example memory plots.

I might need to be guided through the next steps if you need more information.

I would like to find a solution to the slow memory Leak / Growth problem I have with some PVE Nodes I manage.

I have PVE 6.2-6 installed (Issue occured with previous versions also).

Almost all nodes are using the same QNAP Storage pool for VMs via NFS V4 protocol.

Almost all Guest Machines are Microsoft Windows (Drives use VirtIO, Network use VirtIO, Guest agents Installed, Display Configured as SPICE, i440fx)

I can reclaim the leaked RAM by migrating guest vm to another node and back (no reboot required for guest or PVE)

Using the past week as an example,

PVENode1 is slowly affected (4 weeks ago 44GB used, 2 weeks ago had 48GB used, now at 53GB used) (4 guest vm)

PVENode2 in 2 weeks had increase 10GB of RAM without any Guest VM changes / restarts. (7 guest vm)

PVENode3 had grown to 170GB of RAM and half a week ago I used the migration trick to re-claim the RAM. The RAM usage came down to 108GB and starting to grow again, currently 123GB. (17 guest vm)

PVENode4 similar to PVENode1 had 43 GB, grown to 50GB in the past week. (5 guest vm)

PVENode5 similar to PVENode1 (Guest VMs changed in this period) (3 guest vm)

Attached are example memory plots.

I might need to be guided through the next steps if you need more information.