Trying to be calm, (forgive me I'm frustrated and cannot find an answer) I have been really enjoying proxmox and it brings a lot of joy to my world for the last 6 months.

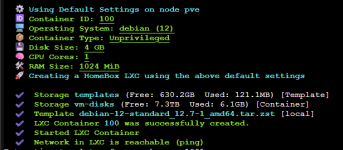

I was given a fantastic old Dell PowerEdge Server with a lot of hardware (48 cores, 96 Gigs of Ram and 15tb of storage). I watched a lot of youtube to get my storage right. Long story short after doing all I learned to setup my storage, making a template drive, ISO, Container and VM disks, ZFS and even connecting my synology... I go to deploy a VM today and get an error because for some reason every LXC or VM drops something on "local" and local is now FULL. Local is the drive is the Proxmox OS. On the Dell it is two SSD cards in a hardware RAID.

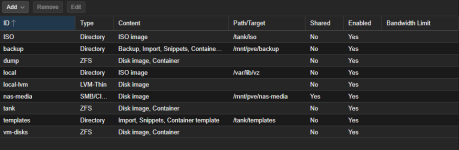

When you make drives, in datacenter you can say, this drive is for backups, and this one is for snippets and templates, and this one is ISO, but NO WHERE have I found any information on what this means, when I deploy a VM or LXC this goes here, and this goes there. I am sitting on literally 27 tbs of drives, and for some reason my setup has vast amounts of empty drives, and Proxmox trying to cram the containers on local, a 14gb card from amazon. No google searches have had a clear answer to this, frankly, simple question. I never get an option to select where I want to put the container when I install a new LXC, only for the containers drives. Local is automatically where the container is placed.

Again. I'm sure everyone is rolling their eyes, but as someone trying to learn and doing all they can to not bother anyone, the STORAGE page on proxmox website goes deep on ZSF and so on, but I have yet to find anywhere that tells me when I say a drive (in datacenter) is for "THIS" that means that "what" is going to be put there when I deploy a thing.

I am now removing most of the LXC's I have spooled up, and trying to figure out how to get things off my local and to the other drives I have setup for containers.

Can anyone tell me, if I deploy an LXC, what do I need to set a drive (or pool) to deploy the container... It always askes me where I want the VM's drive.

Thank you.

I was given a fantastic old Dell PowerEdge Server with a lot of hardware (48 cores, 96 Gigs of Ram and 15tb of storage). I watched a lot of youtube to get my storage right. Long story short after doing all I learned to setup my storage, making a template drive, ISO, Container and VM disks, ZFS and even connecting my synology... I go to deploy a VM today and get an error because for some reason every LXC or VM drops something on "local" and local is now FULL. Local is the drive is the Proxmox OS. On the Dell it is two SSD cards in a hardware RAID.

When you make drives, in datacenter you can say, this drive is for backups, and this one is for snippets and templates, and this one is ISO, but NO WHERE have I found any information on what this means, when I deploy a VM or LXC this goes here, and this goes there. I am sitting on literally 27 tbs of drives, and for some reason my setup has vast amounts of empty drives, and Proxmox trying to cram the containers on local, a 14gb card from amazon. No google searches have had a clear answer to this, frankly, simple question. I never get an option to select where I want to put the container when I install a new LXC, only for the containers drives. Local is automatically where the container is placed.

Again. I'm sure everyone is rolling their eyes, but as someone trying to learn and doing all they can to not bother anyone, the STORAGE page on proxmox website goes deep on ZSF and so on, but I have yet to find anywhere that tells me when I say a drive (in datacenter) is for "THIS" that means that "what" is going to be put there when I deploy a thing.

I am now removing most of the LXC's I have spooled up, and trying to figure out how to get things off my local and to the other drives I have setup for containers.

Can anyone tell me, if I deploy an LXC, what do I need to set a drive (or pool) to deploy the container... It always askes me where I want the VM's drive.

Thank you.