3 node Ceph works beautifully, but has very limited recovery options and requires a lot of spare space in the OSD if you want it to self-heal. I will try to explain myself to complement

@alexskysilk replies:

No, Ceph will self heal and start to create a 3rd copy of everything on the remaining 2 nodes

It can't: the default CRUSH rule tells Ceph to create one replica on an OSD of different hosts. If there's just two hosts, a third replica can't be created.

And with 3:2 setting, the pool will be read only until the 3rd replicas fully made on the remaining 2 hosts.

All PGs will be left in "active+undersized" state and fully accesible. If any other OSD fails while 1 host is down, some PGs will be left with just one replica and will become innaccesible, pausing I/O on the VMs using those PGs (probably most if not all). After the default 10 minutes the downed OSD will be marked OUT and Ceph will start recovery, creating a second replica on the remaining OSD of that host.

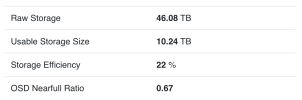

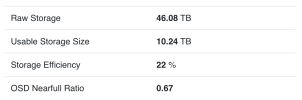

So with 3 nodes, 15 TB each, the total usable capacity with 3 replicas will be ~10 TB, safe nearfull ratio at 0.67, efficiency at 22%.

Not if you want Ceph to self-heal on the remaining OSDs of that host. To clarify, I mean without replacing the failed drive. On all my 3 host Ceph clusters I disable self-heal so I will start it manually once the failed drive is replaced and/or if there's enough free space in the OSDs.

If you add a 4th node with 15 TB, the usable capacity will be ~15 TB and nearfull ratio can be raised to 0.75, efficiency at 25%.

Not if you want Ceph to self-heal on the remaining 3 nodes. The fourth node is usually just added to provide full host redundancy, with the bonus of adding some usable capacity. Capacity will be equivalent to a 3 node cluster but adds the chance to keep 3 full replicas on full host failure. Also, planning on a single OSD failure will have much less impact on available capacity of the whole cluster, as those third replicas may be created on the remaining disks of the host with the failed OSD

and in the fourth node. If you plan on self-healing while one OSD is down

and one host is down, capacity will be exactly as with 3 nodes.

With a 5th node with 15 TB, the usable capacity will be ~20 TB and nearfull ratio can be raised to 0.8, efficiency at 27%

Fifth and next nodes do add capacity to a 3:2 replica pool, but the available space would be similar to what you calculated with 4 nodes (to allow recovery if one full node fails).

The calculation I said not correct said that the OP must calculate with a total of 50% capacity loss (25%+25% reduction) of one node's capacity (with 3 nodes and 3 replicas). If this is the correct calculation nobody willing to use Ceph to use 7 TB usable total on 3 nodes from 46 TB raw... That will be 15% efficiency only.

Seems like none of those calculators take the number of OSDs per host and their size into account and the space required for recovery if one or more of them fails. Seems they just provide the recomended capacity during normal usage.

But when we talk about self healing, Ceph not interested in what failed and where (OSDs from different nodes or one whole node, etc.) when doing healing

It absolutely cares about where to place the replicas with whatever you set in the CRUSH map. To get the behavior you wrote, failure domain must be set to OSD (and that will open the chance of having all 3 replicas in the same host and defeats the whole purpose of Ceph unless you really know what you are doing). Again, by default sets failure domain to host and creates one replica in different hosts and will never create it more than one replica in the same host.

If a 15 TB node fails (which contains the safe maximum of 10 TB data with 0.67 nearfull ratio) there will be 5 TB free space on the remaining 2 nodes each, and the lost 10 TB of 3rd replicas can be recreated on the remaining 2 nodes 5-5 TB free capacity (in this case the 2 nodes will be total full and must not used, of course, but we not loose the whole pool because of out of space errors).

On 3 node cluster, no recovery will be started automatically if one node fails due to the default CRUSH rule of "one replica on an OSD of a different host", so no chance for any other OSD to become full.

But I'm very interested in links that support the other calculation method, because if I understand this wrong, I constantly under-spec my pools.

Okey, let's do it with an example to show you why you

must reserve space to plan for single OSD failure

or disable automatic self-healing on 3 node Ceph clusters:

- 3 host

- 2 OSD each of 2TB (host1: OSD.0, OSD.1, host2: OSD.2, OSD.3, host3: OSD.4, OSD.5)

- 1 pool with 3 replicas, min_size 2

- 12TB gross space (3 hosts x 2 OSD x 2TB), 4TB total usable space (12TB / 3 replicas).

- Ceph is 50% fuil, so 6TB used in the OSD's and 2TB of data.

- OSD usage will be probably around 45-55%.

- Imagine that OSD.0 from host1 fails. The OSD service will stop and it will get marked DOWN.

- Pool is available even if ~half of it's PGs will be marked "undersized" because one of the three replicas is not available. Impact will be 1/2 of the data of the cluster.

- Ceph will not recover anything just yet.

- After

mon_osd_down_out_interval (default 600 secs), OSD.0 will be marked OUT. A new CRUSH map will be generated with just one OSD on host1 and two OSD in host2 and host3.

- All OSD will know that ~half the PGs are missing a third replica that must be created. Where shall it be created? On an OSD of 3 different hosts. Given that host2 and host3 already have a replica, the third replica must be created in the only working OSD of host1: OSD.1.

- Given that OSD.1 was already at ~50% capacity, when creating the third replica it will reach near_full (85%), backfill_full (90%) and probably become 95% full because OSD.0 was too at ~50% capacity. When an OSD is full, no write is possible in any PG for which the OSD is Primary, which essentially means that most if not all of the VMs will halt I/O, taking down the service and rendering the cluster useless.

Having more OSD per host will allow to get more usable space: i.e. with 8 OSD per host, a single OSD failure will impact just ~1/8 of the data and recovery can be done on 7 remaining OSD. It's quite late for me to do the math, but hope you get the idea.