Hi - I am hoping to find some more clarification about Ceph total data usage as it is represented in Prox UI.

Info on the cluster & storage:

3 servers, each w/ 2x 1TB SSD + 10x 2TB HDD

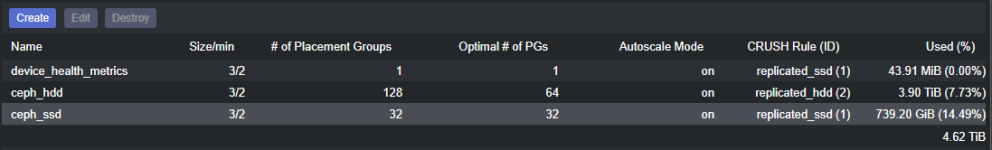

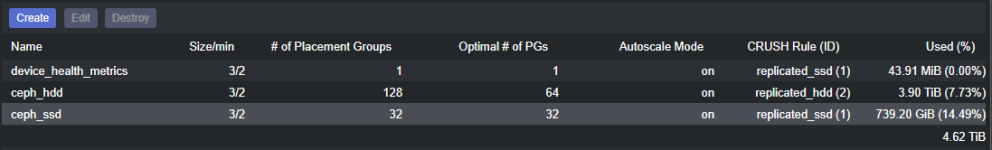

Ceph Pools: 2

#1: ceph_ssd (triple replication, host failure domain)

#2: ceph_hdd (triple replication, host failure domain)

Approx Max Storage (after formatting):

~5.5TB SSD >>> 1.8TB in triplicate

~54.6TB HDD >>> 18.2TB in triplicate

So, there are a couple questions I have here:

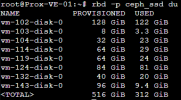

First, why does ceph_ssd report Used 739.20 GiB, but CLI reports 312GB x 3 = 936GB?

Also, why doesn't the GUI % used take into account the replication policy? So, instead of 14.5%, it would report ~43.5%.

Thanks for your help

Info on the cluster & storage:

3 servers, each w/ 2x 1TB SSD + 10x 2TB HDD

Ceph Pools: 2

#1: ceph_ssd (triple replication, host failure domain)

#2: ceph_hdd (triple replication, host failure domain)

Approx Max Storage (after formatting):

~5.5TB SSD >>> 1.8TB in triplicate

~54.6TB HDD >>> 18.2TB in triplicate

So, there are a couple questions I have here:

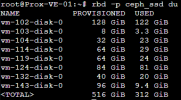

First, why does ceph_ssd report Used 739.20 GiB, but CLI reports 312GB x 3 = 936GB?

Also, why doesn't the GUI % used take into account the replication policy? So, instead of 14.5%, it would report ~43.5%.

Thanks for your help

Last edited: