As the title suggests im attempting to passthrough an HBA card to a TrueNas VM and im not having much luck.

Im new to Linux and Proxmox in general so I could just be doing something glaringly wrong but im unsure how to proceed at this point, I can see all the drives in Proxmox in the web gui and using

The HDD drives disappear from the web gui, the drives also disappear with

If the HBA is not added as a PCI passthrough device, the TrueNas VM will boot successfully.

Configuration

Im Running the following specs:

In BIOS I can confirm that my motherboard and CPU support VT-d and it is Enabled, The HBA device is also configurable through a SAS2 MPT Controller inside my motherboard BIOS.

On the Proxmox side

I have also modified

TrueNas VM Configuration

No changes to Network Settings

In terms of the TrueNas hardware, I can select and add the the HBA:

Any help or insight into this would be much appreciated.

Im new to Linux and Proxmox in general so I could just be doing something glaringly wrong but im unsure how to proceed at this point, I can see all the drives in Proxmox in the web gui and using

lsblk, they are all detected and can be accessed individually, when I start the VM the boot fails with:TASK ERROR: start failed: QEMU exited with code 1.The HDD drives disappear from the web gui, the drives also disappear with

lsblk now only showing sde (my boot drive). It should be noted that the HBA is still visible to Proxmox, and i can add and remove it as a device as normal, if i reboot the PC then the drives return.If the HBA is not added as a PCI passthrough device, the TrueNas VM will boot successfully.

Configuration

Im Running the following specs:

- Processor - 7th gen intel i5 7400

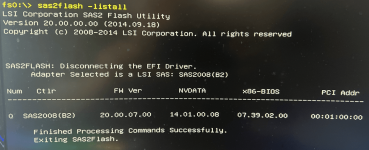

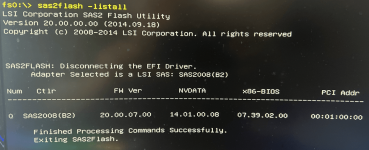

- HBA - LSI 9211-8i (flashed to IT mode)

- Motherboard - Gigabyte GA-H110M-S2H

- 8 GB RAM

- Boot SSD (internally conneted to Motherboard)

- 4 SATA HDD connected to the HBA

In BIOS I can confirm that my motherboard and CPU support VT-d and it is Enabled, The HBA device is also configurable through a SAS2 MPT Controller inside my motherboard BIOS.

On the Proxmox side

- My 4 HBA HDD's are labeled sda-sdd

- My Boot SSD is labeled sde

/etc/default/grubGRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_acs_override=downstream"- Initially my HBA was listed in the same IOMMU group as another device

(6th-10th Gen Core Processor PCIe Controller (x16))so I added the required "pcie_acs_override=downstream" variable and the IOMMU group was changed to 13

update-grub and this was confirmed to be successfulI have also modified

/etc/modules to includevfiovfio_iommu_type1vfio_pcivfio_virqfdTrueNas VM Configuration

BIOS = SeaBIOSSCSI Controller = VirtIO SCSI singleQemu Agent = TrueDiscard = TrueIO thread = TrueSSD emulation = TrueAsync IO = Default (io_uring)4 Core CPU8GB RAM (This is the max RAM installed into the system)No changes to Network Settings

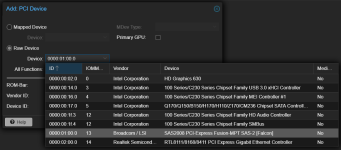

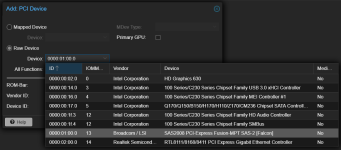

In terms of the TrueNas hardware, I can select and add the the HBA:

All Functions = True- For

ROM-barI have tried both True and False as some posts have suggested and this has not made any difference - PCI-Express cannot be enabled (Q35 only)

Any help or insight into this would be much appreciated.

Last edited: