Hello

i'm stucked with this issue

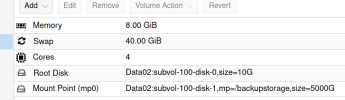

Guessing... it could be a ZFS quota issue, but no quota were set, and inode usage on 1%

LXC container volumes need to be relocated to another (iSCSI) storage. Problem with source?

Log say:

Wiping dos signature on /dev/vg02/vm-100-disk-0.

Logical volume "vm-100-disk-0" created.

Creating filesystem with 2621440 4k blocks and 655360 inodes

Filesystem UUID: dca0f4b6-e4f3-458a-9543-a1b0dd580687

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632

rsync: [receiver] write failed on "/var/lib/lxc/100/.copy-volume-1/var/urbackup/backup_server_files.db": No space left on device (28)

rsync error: error in file IO (code 11) at receiver.c(381) [receiver=3.2.7]

rsync: [sender] write error: Broken pipe (32)

Logical volume "vm-100-disk-0" successfully removed.

TASK ERROR: command 'rsync --stats -X -A --numeric-ids -aH --whole-file --sparse --one-file-system '--bwlimit=0' /var/lib/lxc/100/.copy-volume-2/ /var/lib/lxc/100/.copy-volume-1' failed: exit code 11

Server has 32G ram and no VM/LXC rumning.

df -h

zfs get refquota Data02/subvol-100-disk-0

and

zfs get refquota Data02/subvol-100-disk-1

Please advise what to do

i'm stucked with this issue

Guessing... it could be a ZFS quota issue, but no quota were set, and inode usage on 1%

LXC container volumes need to be relocated to another (iSCSI) storage. Problem with source?

Log say:

Wiping dos signature on /dev/vg02/vm-100-disk-0.

Logical volume "vm-100-disk-0" created.

Creating filesystem with 2621440 4k blocks and 655360 inodes

Filesystem UUID: dca0f4b6-e4f3-458a-9543-a1b0dd580687

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632

rsync: [receiver] write failed on "/var/lib/lxc/100/.copy-volume-1/var/urbackup/backup_server_files.db": No space left on device (28)

rsync error: error in file IO (code 11) at receiver.c(381) [receiver=3.2.7]

rsync: [sender] write error: Broken pipe (32)

Logical volume "vm-100-disk-0" successfully removed.

TASK ERROR: command 'rsync --stats -X -A --numeric-ids -aH --whole-file --sparse --one-file-system '--bwlimit=0' /var/lib/lxc/100/.copy-volume-2/ /var/lib/lxc/100/.copy-volume-1' failed: exit code 11

Server has 32G ram and no VM/LXC rumning.

df -h

Code:

Data02/subvol-100-disk-0 10G 4.5G 5.6G 45% /Data02/subvol-100-disk-0

Data02/subvol-100-disk-1 4.9T 2.4T 2.6T 49% /Data02/subvol-100-disk-1zfs get refquota Data02/subvol-100-disk-0

and

zfs get refquota Data02/subvol-100-disk-1

Code:

Data02/subvol-100-disk-1 refquota 4.88T local

root@pve04:~# zfs get refquota Data02/subvol-100-disk-0

NAME PROPERTY VALUE SOURCE

Data02/subvol-100-disk-0 refquota 10G local

root@pve04:~# zfs get refquota Data02/subvol-100-disk-1

NAME PROPERTY VALUE SOURCE

Data02/subvol-100-disk-1 refquota 4.88T local

Code:

df -i

Data02/subvol-100-disk-0 11797360 85080 11712280 1% /Data02/subvol-100-disk-0

Data02/subvol-100-disk-1 5422031467 33072667 5388958800 1% /Data02/subvol-100-disk-1Please advise what to do

Last edited: