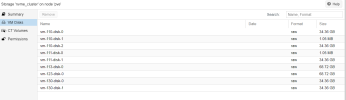

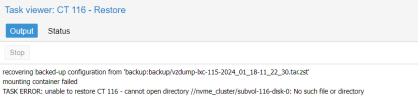

Following a mishandling on my part, I had to restore a backup of my proxmox. Except that in the meantime I created a ZFS storage to which I migrated 4 VMs (110,113,123 and 130). The problem being that following the restart, my ZFS storage was no longer visible, so I remounted it and the problem is that the disks of the VMs in question are no longer remounted and I don't see how to do it.....

Obviously they still occupy space on the ZFS storage but as you can see in the zfs list there is nothing in montpoint

Obviously they still occupy space on the ZFS storage but as you can see in the zfs list there is nothing in montpoint

Bash:

root@pve:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

nvme_cluster 264G 114G 120K /nvme_cluster

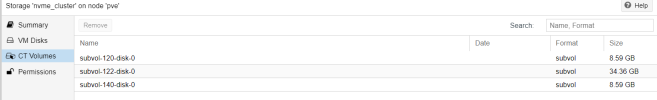

nvme_cluster/subvol-120-disk-0 490M 7.52G 490M /nvme_cluster/subvol-120-disk-0

nvme_cluster/subvol-122-disk-0 1.90G 30.1G 1.90G /nvme_cluster/subvol-122-disk-0

nvme_cluster/subvol-140-disk-0 467M 7.54G 467M /nvme_cluster/subvol-140-disk-0

nvme_cluster/vm-110-disk-0 50.0G 146G 17.5G -

nvme_cluster/vm-110-disk-1 3.05M 114G 56K -

nvme_cluster/vm-110-disk-2 32.5G 146G 56K -

nvme_cluster/vm-113-disk-0 22.0G 114G 22.0G -

nvme_cluster/vm-123-disk-0 86.8G 179G 21.7G -

nvme_cluster/vm-130-disk-0 35.0G 146G 2.52G -

nvme_cluster/vm-130-disk-1 35.0G 146G 2.54G -

Last edited: