I think Disk-4TB is your sdb device and Disk2-4TB is your sdd but lets get some more information about your system

Can you give me the output of

Code:

cat /etc/pve/storage.cfg

lsblk

findmnt

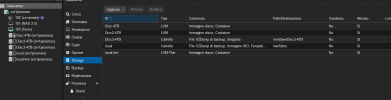

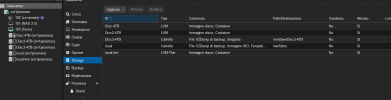

dir: local

path /var/lib/vz

content vztmpl,iso,backup

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

lvm: Disc2-4TB

vgname Disc2-4TB

content images,rootdir

nodes srv1proxmox

shared 0

lvm: Disc-4TB

vgname pool

content rootdir,images

shared 0

dir: Disc3-4TB

path /mnt/pve/Disc3-4TB

content snippets,backup

is_mountpoint 1

nodes srv1proxmox

shared 0

root@srv1proxmox:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 465.8G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part /boot/efi

└─sda3 8:3 0 464.8G 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 96G 0 lvm /

├─pve-data_tmeta 253:2 0 3.4G 0 lvm

│ └─pve-data-tpool 253:7 0 337.9G 0 lvm

│ ├─pve-data 253:8 0 337.9G 1 lvm

│ ├─pve-vm--102--disk--0 253:9 0 5G 0 lvm

│ ├─pve-vm--103--disk--0 253:10 0 4M 0 lvm

│ └─pve-vm--103--disk--1 253:11 0 4M 0 lvm

└─pve-data_tdata 253:3 0 337.9G 0 lvm

└─pve-data-tpool 253:7 0 337.9G 0 lvm

├─pve-data 253:8 0 337.9G 1 lvm

├─pve-vm--102--disk--0 253:9 0 5G 0 lvm

├─pve-vm--103--disk--0 253:10 0 4M 0 lvm

└─pve-vm--103--disk--1 253:11 0 4M 0 lvm

sdb 8:16 0 3.6T 0 disk

├─pool-pool 253:5 0 15.8G 0 lvm

└─pool-vm--103--disk--0 253:6 0 2T 0 lvm

sdc 8:32 0 3.6T 0 disk

└─sdc1 8:33 0 3.6T 0 part /mnt/pve/Disc3-4TB

sdd 8:48 0 3.6T 0 disk

root@srv1proxmox:~# findmnt

TARGET SOURCE FSTYPE OPTIONS

/ /dev/mapper/pve-root

│ ext4 rw,relatime,errors=remount-ro

├─/sys sysfs sysfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/security securityfs securityfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/cgroup cgroup2 cgroup2 rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/pstore pstore pstore rw,nosuid,nodev,noexec,relatime

│ ├─/sys/firmware/efi/efivars efivarfs efivarfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/bpf bpf bpf rw,nosuid,nodev,noexec,relatime,mode=700

│ ├─/sys/kernel/debug debugfs debugfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/tracing tracefs tracefs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/fuse/connections fusectl fusectl rw,nosuid,nodev,noexec,relatime

│ └─/sys/kernel/config configfs configfs rw,nosuid,nodev,noexec,relatime

├─/proc proc proc rw,relatime

│ └─/proc/sys/fs/binfmt_misc systemd-1 autofs rw,relatime,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=18175

├─/dev udev devtmpfs rw,nosuid,relatime,size=16240924k,nr_inodes=4060231,mode=755,inode64

│ ├─/dev/pts devpts devpts rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000

│ ├─/dev/shm tmpfs tmpfs rw,nosuid,nodev,inode64

│ ├─/dev/mqueue mqueue mqueue rw,nosuid,nodev,noexec,relatime

│ └─/dev/hugepages hugetlbfs hugetlbfs rw,relatime,pagesize=2M

├─/run tmpfs tmpfs rw,nosuid,nodev,noexec,relatime,size=3255132k,mode=755,inode64

│ ├─/run/lock tmpfs tmpfs rw,nosuid,nodev,noexec,relatime,size=5120k,inode64

│ ├─/run/rpc_pipefs sunrpc rpc_pipefs rw,relatime

│ └─/run/user/0 tmpfs tmpfs rw,nosuid,nodev,relatime,size=3255128k,nr_inodes=813782,mode=700,inode64

├─/boot/efi /dev/sda2 vfat rw,relatime,fmask=0022,dmask=0022,codepage=437,iocharset=iso8859-1,shortname=mixed,e

├─/mnt/pve/Disc3-4TB /dev/sdc1 ext4 rw,relatime

├─/var/lib/lxcfs lxcfs fuse.lxcfs rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other

└─/etc/pve /dev/fuse fuse rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other