Hello,

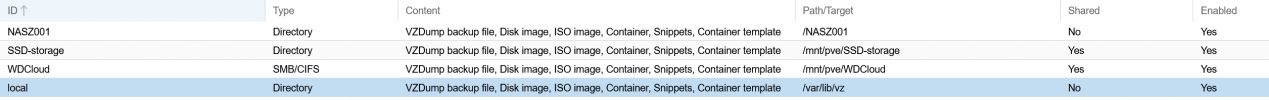

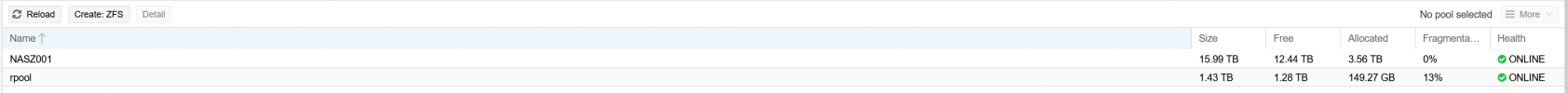

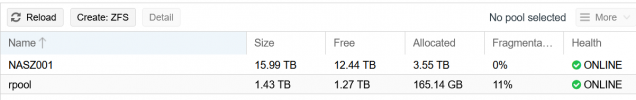

My tale of woe begins with 1 of 6 240 GB SSDs used as my system disk failing since fragmentation in the zpool was 77% I decided to reinstall the system on the same disks to see if the problem recurred. I restored the backups of my VMs and have been happily working thinking everything is fine until I decided to backup a VM and then things got weird. The backup error message says the VM system volume storage does not exist but I think I found it but don't know what I can do to fix it so I can backup my VM.

Thanks in advance for your help!

So,

My tale of woe begins with 1 of 6 240 GB SSDs used as my system disk failing since fragmentation in the zpool was 77% I decided to reinstall the system on the same disks to see if the problem recurred. I restored the backups of my VMs and have been happily working thinking everything is fine until I decided to backup a VM and then things got weird. The backup error message says the VM system volume storage does not exist but I think I found it but don't know what I can do to fix it so I can backup my VM.

Thanks in advance for your help!

Code:

INFO: starting new backup job: vzdump 104 --compress zstd --notes-template '{{guestname}}' --node pve001 --remove 0 --storage NASZ001 --mode snapshot

INFO: Starting Backup of VM 104 (qemu)

INFO: Backup started at 2023-02-12 09:30:18

INFO: status = running

INFO: VM Name: UbuntuToStatic

INFO: include disk 'scsi0' 'local-zfs:vm-104-disk-0' 32G

INFO: exclude disk 'efidisk0' 'local-zfs:vm-104-disk-1' (efidisk but no OMVF BIOS)

ERROR: Backup of VM 104 failed - storage 'local-zfs' does not exist

INFO: Failed at 2023-02-12 09:30:18

INFO: Backup job finished with errors

TASK ERROR: job errors

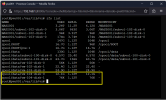

Code:

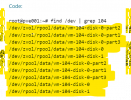

root@pve001:~# find /dev | grep 104

/dev/zvol/rpool/data/vm-104-disk-0-part2

/dev/zvol/rpool/data/vm-104-disk-0-part1

/dev/zvol/rpool/data/vm-104-disk-0-part3

/dev/zvol/rpool/data/vm-104-disk-0

/dev/zvol/rpool/data/vm-104-disk-1

/dev/rpool/data/vm-104-disk-0-part2

/dev/rpool/data/vm-104-disk-0-part1

/dev/rpool/data/vm-104-disk-0-part3

/dev/rpool/data/vm-104-disk-0

/dev/rpool/data/vm-104-disk-1

root@pve001:~# ls /dev/zvol/rpool/data/ -lah | grep 104

lrwxrwxrwx 1 root root 13 Feb 11 12:24 vm-104-disk-0 -> ../../../zd80

lrwxrwxrwx 1 root root 15 Feb 11 12:24 vm-104-disk-0-part1 -> ../../../zd80p1

lrwxrwxrwx 1 root root 15 Feb 11 12:24 vm-104-disk-0-part2 -> ../../../zd80p2

lrwxrwxrwx 1 root root 15 Feb 11 12:24 vm-104-disk-0-part3 -> ../../../zd80p3

lrwxrwxrwx 1 root root 13 Feb 11 12:24 vm-104-disk-1 -> ../../../zd48

root@pve001:~# ls /dev/rpool/data/ -lah | grep 104

lrwxrwxrwx 1 root root 10 Feb 11 12:24 vm-104-disk-0 -> ../../zd80

lrwxrwxrwx 1 root root 12 Feb 11 12:24 vm-104-disk-0-part1 -> ../../zd80p1

lrwxrwxrwx 1 root root 12 Feb 11 12:24 vm-104-disk-0-part2 -> ../../zd80p2

lrwxrwxrwx 1 root root 12 Feb 11 12:24 vm-104-disk-0-part3 -> ../../zd80p3

lrwxrwxrwx 1 root root 10 Feb 11 12:24 vm-104-disk-1 -> ../../zd48So,

Attachments

Last edited: