Beginner-friendly guide for setting up Ubuntu LXC container (CT) with device passthrough on Proxmox VE 8.4 for Frigate installation.

Install everything step-by-step and include passthrough / enable Google Coral Edge TPU and OpenVino iGPU. The guide could also be used for generic device passthrough.

Hopefully this guide could help.

- Create and configure an LXC container with device passthrough in Proxmox VE 8.4

- Install Docker

- Install Frigate inside the container

- (Include) Set up Coral Edge TPU acceleration & OpenVino

Tutorial Video Guide:

https://youtu.be/qN6A4SQMK10

Related Resources:

Proxmox VE Device Passthrough Guide : https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_configuration_14

Frigate Installation Docs: https://docs.frigate.video/frigate/installation

Coral TPU Installation: https://coral.ai/docs/m2/get-started/#2a-on-linux

Reference Setup:

Important to Check Prerequisite Steps in Proxmox VE Host

LXC CT Creation

Post Installation Docker

Frigate Configuration via Frigate Browser - Setup Configuration Editor or directly editing /opt/frigate/config/config.yml

Install everything step-by-step and include passthrough / enable Google Coral Edge TPU and OpenVino iGPU. The guide could also be used for generic device passthrough.

Hopefully this guide could help.

- Create and configure an LXC container with device passthrough in Proxmox VE 8.4

- Install Docker

- Install Frigate inside the container

- (Include) Set up Coral Edge TPU acceleration & OpenVino

Tutorial Video Guide:

https://youtu.be/qN6A4SQMK10

Related Resources:

Proxmox VE Device Passthrough Guide : https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_configuration_14

Frigate Installation Docs: https://docs.frigate.video/frigate/installation

Coral TPU Installation: https://coral.ai/docs/m2/get-started/#2a-on-linux

Reference Setup:

- Lenovo P330 Tiny Workstation (ThinkStation) with Intel CPU i7–8700T and 32 MB RAM

- M.2 Accelerator with Dual Edge TPU installed on M.2 NGFF E-key WiFi Network Card to M-key SSD Adapter

- Proxmox VE 8.4.1

- LXC Ubuntu 24.04-standard

Important to Check Prerequisite Steps in Proxmox VE Host

LXC CT Creation

- Download CT Template

- Create CT

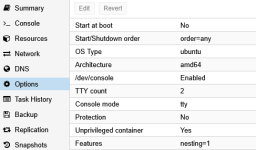

- Unprivileged container=Y , Nesting=Y

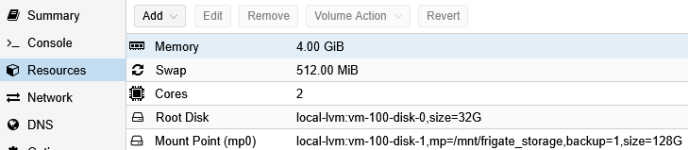

- Disks : rootfs — Configure Disk size as required (e.g. 32G)

- Disks : Add (Frigate storage recording) — Configure Disk size as required (e.g. 128G), Path=/mnt/frigate_storage

- Memory : Configure memory as required (e.g. 4GiB)

- Network : IPv4 — Configure as required (e.g. DHCP)

- Initial Setup Passthrough Device

- Get device information (group and group id) from host

Bash:root@host:~# ls -al /dev/dritotal 0 drwxr-xr-x 3 root root 100 Apr 1 12:28 . drwxr-xr-x 18 root root 4800 Apr 1 23:01 .. drwxr-xr-x 2 root root 80 Apr 1 12:28 by-path crw-rw---- 1 root video 226, 1 Apr 1 12:28 card1 crw-rw---- 1 root render 226, 128 Apr 1 12:28 renderD128 root@host:~# grep video /etc/group video:x:44: root@host:~# grep render /etc/group render:x:104:

For Coral Edge TPU (skip this if doesn't have Coral Edge TPU)

Bash:root@host:~# ls -al /dev/apex* crw-rw---- 1 root apex 120, 0 Apr 1 12:28 /dev/apex_0 root@host:~# grep apex /etc/group apex:x:1000:root

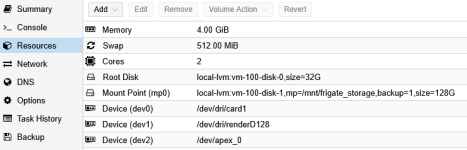

- Add Device Passthrough via Proxmox GUI config

Resources — Add — Device Passthrough (leave GID in CT as default. GID in CT to be configured later). Screenshot below already have gid configured (please ignore). - Start Frigate CT and get device information (group id) from Frigate CT container

Groupid video is : 44, groupid render is : 108Bash:root@frigate:~# ls -al /dev/dritotal 0 drwxr-xr-x 2 root root 80 Apr 1 16:38 . drwxr-xr-x 7 root root 520 Apr 1 16:38 .. crw-rw---- 1 root root 226, 1 Apr 1 16:38 card1 crw-rw---- 1 root root 226, 128 Apr 1 16:38 renderD128 root@frigate:~# grep video /etc/group video:x:44: root@frigate:~# grep render /etc/group render:x:108:

For Coral Edge TPU

Groupid apex is not available yet.Bash:root@frigate:~# ls -al /dev/apex* crw-rw---- 1 root root 120, 0 Apr 1 16:38 /dev/apex_0 root@frigate:~# grep apex /etc/group root@frigate:~#

- For Coral Edge TPU : Add apex group in Frigate CT because group apex is not available yet

Bash:root@frigate:~# sh -c "echo 'SUBSYSTEM==\"apex\", MODE=\"0660\", GROUP=\"apex\"' >> /etc/udev/rules.d/65-apex.rules" root@frigate:~# groupadd -g 1000 apex root@frigate:~# adduser $USER apex root@frigate:~# grep apex /etc/group apex:x:1000:root root@frigate:~# cd /etc/udev/rules.d root@frigate:/etc/udev/rules.d# cat 65-apex.rules SUBSYSTEM=="apex", MODE="0660", GROUP="apex"

- Shutdown Frigate CT

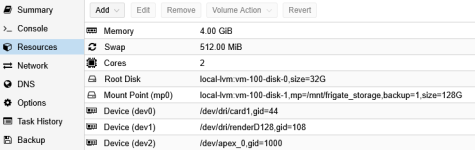

- Change GID for Device Passthrough via Proxmox GUI config

Resources — Edit — Device(s) - Start Frigate CT

- Check groupid of passthrough device in Frigate CT. Group has been changed accordingly (video, render, apex)

Bash:root@frigate:~# ls -al /dev/dritotal 0 drwxr-xr-x 2 root root 80 Apr 2 00:22 . drwxr-xr-x 7 root root 520 Apr 2 00:22 .. crw-rw---- 1 root video 226, 1 Apr 2 00:22 card1 crw-rw---- 1 root render 226, 128 Apr 2 00:22 renderD128 root@frigate:~# ls -al /dev/apex_0 crw-rw---- 1 root apex 120, 0 Apr 2 00:22 /dev/apex_0

- Get device information (group and group id) from host

- Update CT container (and reboot when required)

Bash:apt-get update apt-get upgrade shutdown -r now

Docker Installation on CT LXC

- Uninstall all conflicting packages:

Bash:for pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; done

- Setup Docker’s apt repository

Bash:# Add Docker's official GPG key: apt-get install ca-certificates curl install -m 0755 -d /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc chmod a+r /etc/apt/keyrings/docker.asc # Add the repository to Apt sources: echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \ $(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \ sudo tee /etc/apt/sources.list.d/docker.list > /dev/null apt-get update apt-get upgrade - Install Docker packages

Bash:apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

- Verify Docker installation by running hello-world image

Bash:service docker start docker run hello-world

Post Installation Docker

- Setup to run Docker without root privileges

Bash:groupadd docker sudo usermod -aG docker $USER newgrp docker # to activate without relogin docker run hello-world systemctl enable docker.service systemctl enable containerd.service

- Verify able to run docker command

docker run hello-world

- Configure Docker to start on boot with systemd

-

Bash:

systemctl enable docker.service systemctl enable containerd.service

Frigate Installation

- Preparation Frigate Docker Configuration

Bash:mkdir -p /opt/frigate/config

- Prepare docker-compose.yml (/opt/frigate/docker-compose.yml)

Change groupid accordingly in group_add section. In this guide, groupid are 44 (video), 104 (render), 1000 (apex)

YAML:services:frigate: container_name: frigate privileged: true # this may not be necessary for all setups # disable after configuration restart: unless-stopped image: ghcr.io/blakeblackshear/frigate:stable cap_add: - CAP_PERFMON group_add: - "44" - "104" - "1000" shm_size: "288mb" # update for your cameras based on calculation above volumes: - /etc/localtime:/etc/localtime:ro - /opt/frigate/config:/config - /mnt/frigate_storage:/media/frigate - type: tmpfs # Optional: 1GB of memory, reduces SSD/SD Card wear target: /tmp/cache tmpfs: size: 1000000000 ports: - "8971:8971" - "8554:8554" # RTSP feeds # - "5000:5000" # Internal unauthenticated access. Expose carefully. # - "8555:8555/tcp" # WebRTC over tcp # - "8555:8555/udp" # WebRTC over udp devices: - /dev/dri/renderD128:/dev/dri/renderD128 # For intel hwaccel, needs to be updated for your hardware - /dev/dri/card1:/dev/dri/card1 - /dev/apex_0:/dev/apex_0 # For Coral Edge TPU, enable this. Passes a PCIe Coral, follow driver instructions here https://coral.ai/docs/m2/get-started/#2a-on-linux # - /dev/bus/usb:/dev/bus/usb # Passes the USB Coral, needs to be modified for other version # - /dev/video11:/dev/video11 # For Raspberry Pi 4B environment: FRIGATE_RTSP_PASSWORD: "myrtsppassword" FRIGATE_ME_RTSP_USER: "myuser" FRIGATE_ME_RTSP_PASS: "mypass"

- Bring up frigate docker

Bash:root@frigate:~# docker compose up -d

- Frigate is up and ready at port 8971. Browse https using ip_address & port_number to login. Get initial admin and password from frigate log.

Bash:root@frigate:~# docker logs frigate

- Go To Menu Settings - System Metrics and Verify

Frigate Configuration via Frigate Browser - Setup Configuration Editor or directly editing /opt/frigate/config/config.yml

- Example Config for Adding Detector Coral Edge TPU

YAML:##### Detector tpu #####detectors: coral1: type: edgetpu device: pci

- Example Config for Adding Detector OpenVino

YAML:##### Detector OpenVino ##### detectors: ov: type: openvino device: GPU model: width: 300 height: 300 input_tensor: nhwc input_pixel_format: bgr path: /openvino-model/ssdlite_mobilenet_v2.xml labelmap_path: /openvino-model/coco_91cl_bkgr.txt

- Example Config for Adding Detector CPU

YAML:##### Detector cpu #####detectors: cpu1: type: cpu num_threads: 3 model: path: "/custom_model.tflite" cpu2: type: cpu num_threads: 3

- IMPORTANT : To disable Secure Boot in Proxmox Host BIOS

- Enable Device Passthrough

Add option to /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt"

Run update-grub and reboot

Bash:update-grub

Install following packages:

Bash:apt-get install intel-gpu-tools intel-media-va-driver i965-va-driver

Add modules to /etc/modules:

Code:vfio vfio_iommu_type1 vfio_pci vfio_virqfd

Enable modules

Bash:update-initramfs -u -k all

Reboot and verify

Bash:cat /proc/cmdline lsmod | grep vfio dmesg | grep -e DMAR -e IOMMU -e AMD-Vi

- Enable Intel GPU Monitoring in CT

Update kernel parameter (kernel.perf_event_paranoid) to 0.

Check current parameter

Bash:root@host:~# sysctl kernel.perf_event_paranoidkernel.perf_event_paranoid = 4

Temporary changes. Restart frigate to see the result via Menu Settings - System Metrics

Bash:root@host:~# sh -c 'echo 0 > /proc/sys/kernel/perf_event_paranoid'

Enable permanently during booting

Bash:root@host:~# sh -c 'echo kernel.perf_event_paranoid=0 >> /etc/sysctl.d/local.conf'root@host:~# cat /etc/sysctl.d/local.conf kernel.perf_event_paranoid=0

Reboot

- Enable Coral Edge TPU

https://coral.ai/docs/m2/get-started/#2a-on-linux

or could refer to following guide Installation Coral Dual Edge TPU runtime on Linux Proxmox VE

Attachments

Last edited: